Summary

Call center quality assurance (QA) transforms customer interactions by systematically monitoring, evaluating, and improving agent performance. This guide covers the fundamentals of call quality, explains why QA matters for business success, and provides 11 actionable best practices to continuously enhance your quality assurance program. Modern QA combines human expertise with AI-powered tools to analyze 100% of interactions, deliver real-time coaching, and drive measurable improvements in customer satisfaction and operational efficiency.

Table of Contents

A. What is call center quality assurance?

Call center quality assurance is a systematic process that ensures customer interactions align with your business standards and objectives. It involves monitoring conversations, evaluating agent performance, and implementing improvements to enhance service delivery.

Key components of call center QA include:

- Call monitoring

- Call scoring

- Sentiment analysis

- Call calibration

- Performance metric analysis

- Agent training and coaching

- Quality scorecards

At Enthu, we’ve discovered a better path. When contact centers shift to strategic quality frameworks, they unlock genuine operational transformation. Customer delight increases and agent engagement strengthens. Business outcomes improve across every metric that matters.

Today’s QA programs analyze calls, chats, emails, and text interactions to identify improvement opportunities. They track compliance adherence, measure customer sentiment, and provide actionable feedback to agents.

As Enthu.AI’s co-founder explains:

“A well-run QA program is like having a mirror that reflects our best and worst moments, helping us improve fast.” – Tushar Jain, Co-Founder & CEO, Enthu.AI

Let’s dive into the basics of call center quality assurance, why it is important, and the eleven best practices we gathered from industry experts and our own experience.

Upload Call & Get Insights

DOWNLOAD DUMMY FILE

DOWNLOAD DUMMY FILE B. What does good quality assurance for call centers look like?

If you don’t know what “good” looks like, how will you ever get there?

That’s the real challenge for most QA teams, measuring success beyond just a scorecard.

Most call centers aim for an 85% QA score or higher. This means agents are generally hitting key standards.

If you’re above 90%, great, you’re running a tight ship.

But if you’re regularly under 80%, it’s time to dig deeper. Are agents unclear on expectations?

Is the feedback loop broken?

Pass/Fail Thresholds

The industry norm is a 70-75% pass threshold.

But in regulated industries like finance or healthcare, you might need 100% accuracy on compliance sections, no exceptions.

Top Agent Patterns:

Your best reps usually:

- Handle objections like pros

- Balance empathy with efficiency

- Stick to scripts without sounding robotic

They’re not just following rules, they’re creating great customer moments.

Want to get more out of your coaching sessions? Read this quick guide on how to run coaching that actually drives performance.

Related: How to Improve Quality Assurance in a Call Center? (2026).

C. Benefits of quality assurance in call centers

Implementing robust QA processes delivers measurable returns across multiple business dimensions. Here are some reasons why customer service quality assurance is important for your call centers:

1. Enhanced customer satisfaction

Systematic quality monitoring identifies and resolves service issues before they escalate. When agents consistently follow best practices, customers receive better experiences that drive loyalty and positive word-of-mouth.

2. Improved agent performance

Regular feedback and coaching help agents develop skills and confidence. QA data reveals training needs, enabling targeted development that accelerates proficiency.

3. Increased first call resolution

Contact center quality assurance programs identify common issues that require multiple contacts to resolve. By addressing root causes, you reduce repeat calls and improve operational efficiency.

4. Compliance assurance

Automated monitoring ensures agents follow regulatory requirements and company policies. Real-time alerts flag potential violations before they become costly compliance issues.

5. Reduced operational costs

QA programs identify inefficiencies like excessive handle times, unnecessary transfers, and agent downtime. Eliminating these waste sources directly improves your bottom line.

6. Competitive differentiation

In markets where 73% of consumers say customer experience is their top buying consideration, superior service quality becomes a powerful competitive advantage.

D. What are some top challenges of call center quality assurance?

Understanding common QA obstacles helps you proactively design programs that overcome them. Here are some common challenges of call center QA:

1. Limited Evaluation Coverage

Manual QA processes typically assess only 1-2% of interactions due to time constraints. This small sample size fails to capture true performance patterns and leaves blind spots.

2. Evaluator bias and inconsistency

Different evaluators often score the same interaction differently, creating fairness concerns and reducing data reliability. Without regular calibration, scoring standards drift over time.

3. Time-consuming manual reviews

Listening to full call recordings and completing evaluation forms consumes significant supervisor time. This limits how many interactions can be reviewed and delays feedback delivery.

4. Agent resistance

Some agents perceive QA as punitive monitoring rather than developmental support. This mindset creates defensiveness and limits improvement potential.

5. Balancing quantity and quality

Contact centers face constant tension between call volume targets and quality standards. Overemphasis on speed metrics can compromise service excellence.

6. Technology integration complexity

Implementing new call center quality assurance software requires integration with existing systems like CRM platforms and workforce management software. Legacy infrastructure can complicate deployment.

7. Keeping pace with volume

Traditional QA methods struggle to maintain consistent evaluation coverage as call volumes fluctuate. Manual processes can’t scale to match demand spikes.

E. Essential metrics for call center QA

Tracking the right call center quality assurance metrics ensures your QA program drives meaningful business outcomes.

| Metric | What It Measures | Why It Matters |

| First Call Resolution (FCR) | Percentage of issues resolved on first contact | Directly impacts customer satisfaction and operational efficiency |

| Average Handle Time (AHT) | Average duration of customer interactions | Balances efficiency with thorough service delivery |

| Customer Satisfaction (CSAT) | Customer ratings of service experience | Primary indicator of service quality and customer perception |

| Net Promoter Score (NPS) | Likelihood customers will recommend your business | Measures customer loyalty and predicts revenue growth |

| Quality Score | Agent compliance with evaluation criteria | Assesses adherence to standards and identifies training needs |

| Compliance Score | Adherence to regulatory and policy requirements | Prevents violations and reduces legal/financial risk |

| Call Monitoring Score | Performance across communication skills, empathy, and problem-solving | Provides comprehensive agent performance assessment |

| Customer Sentiment | Emotional tone detected in conversations | Identifies at-risk customers and coaching opportunities |

| Schedule Adherence | Time agents are available for customer interactions | Impacts service levels and workforce efficiency |

These metrics work together to provide a complete picture of contact center performance. Track them consistently to identify trends, measure improvement, and demonstrate QA program value.

F. 11 call center quality assurance best practices for 2026

Modern QA demands a strategic approach that combines proven methodologies with innovative technology. Here’s how to build a world-class quality assurance program that drives measurable results.

1. Establish clear, measurable QA standards

Stop the guessing game. Write down exactly what “great service” looks like at your company.

Document everything, from greetings to tone, problem-solving approaches, and closings.

Create simple scorecards with 3-4 sections covering:

- Process adherence: Did they follow the right steps?

- Product knowledge: Did they know their stuff?

- Empathy & tone: Were they genuinely helpful?

- Issue resolution: Did they solve the problem?

Use number scales (0-5) for soft skills and yes/no checkboxes for must-do compliance items. Clear standards eliminate confusion and help agents succeed.

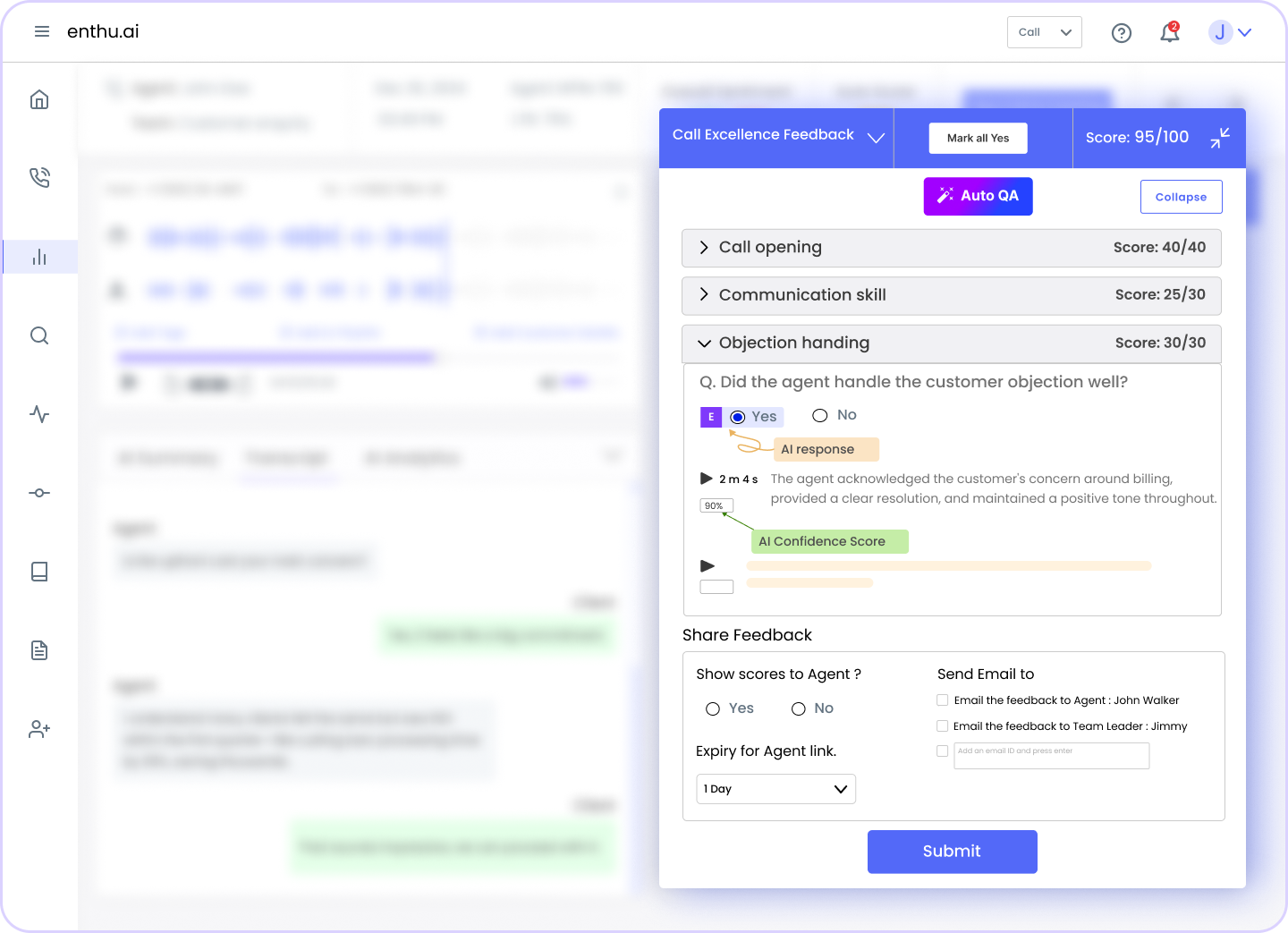

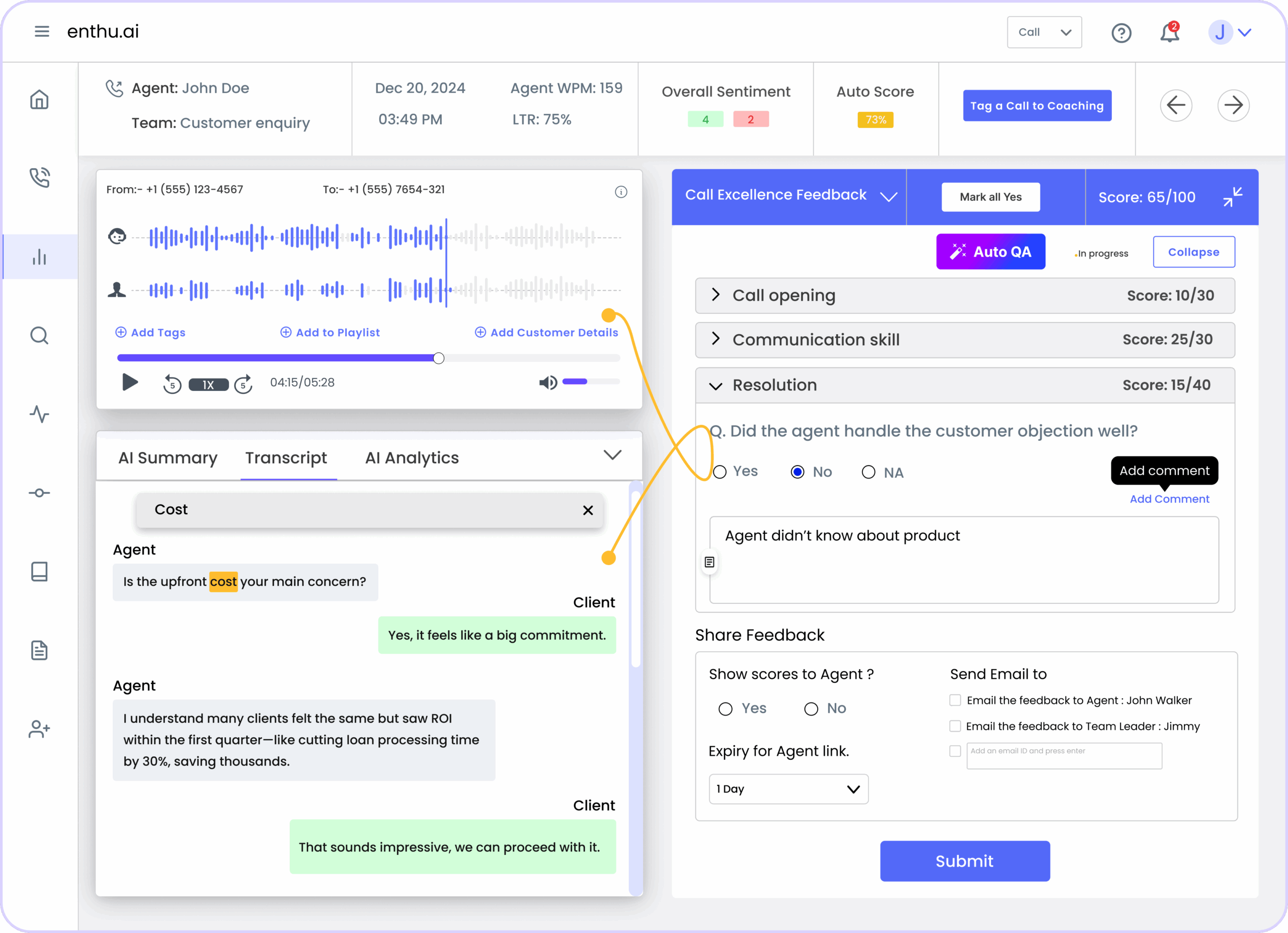

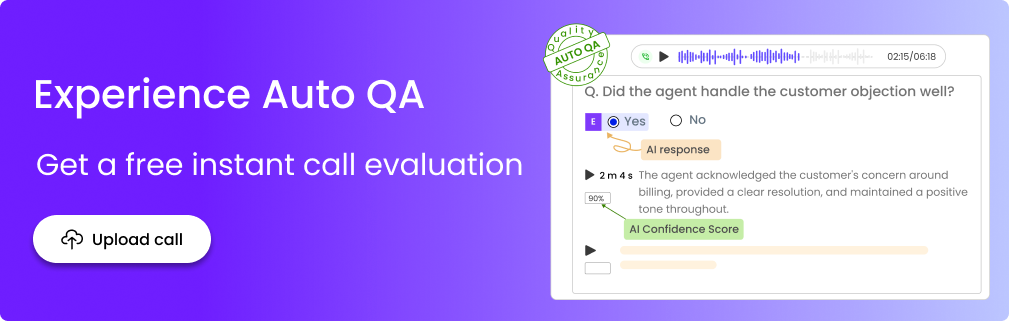

2. Implement AI-powered auto-scoring

Listening to every single call manually? That’s impossible.

AI-powered auto-scoring analyzes 100% of your calls with 90%+ accuracy. The technology identifies customer mood, checks script compliance, and flags potential issues automatically.

It works in 20+ languages and can reduce your average handle time. Your supervisors stop drowning in call reviews and start actually coaching.

AI spots patterns humans miss while cutting evaluation time dramatically. Keep humans involved for complex situations, but let technology handle the routine work.

Enthu pro tip: Utilize AI auto-scoring that evaluates 100% of conversations, freeing your QA team to focus on strategic coaching rather than manual call reviews.

3. Use statistically significant sample sizes

Checking five calls per month per agent tells you almost nothing. You need real data, not random snapshots. Aim to evaluate at least 10% of interactions – use AI to make this realistic.

Key requirements:

- Spread reviews throughout the month (not just Monday mornings)

- Include different customer types and complaint calls

- Cover peak hours and slow periods

- Collect minimum 5 customer surveys per agent monthly

Bigger sample sizes mean reliable insights you can actually use to improve performance. Small samples lead to bad decisions and unfair evaluations.

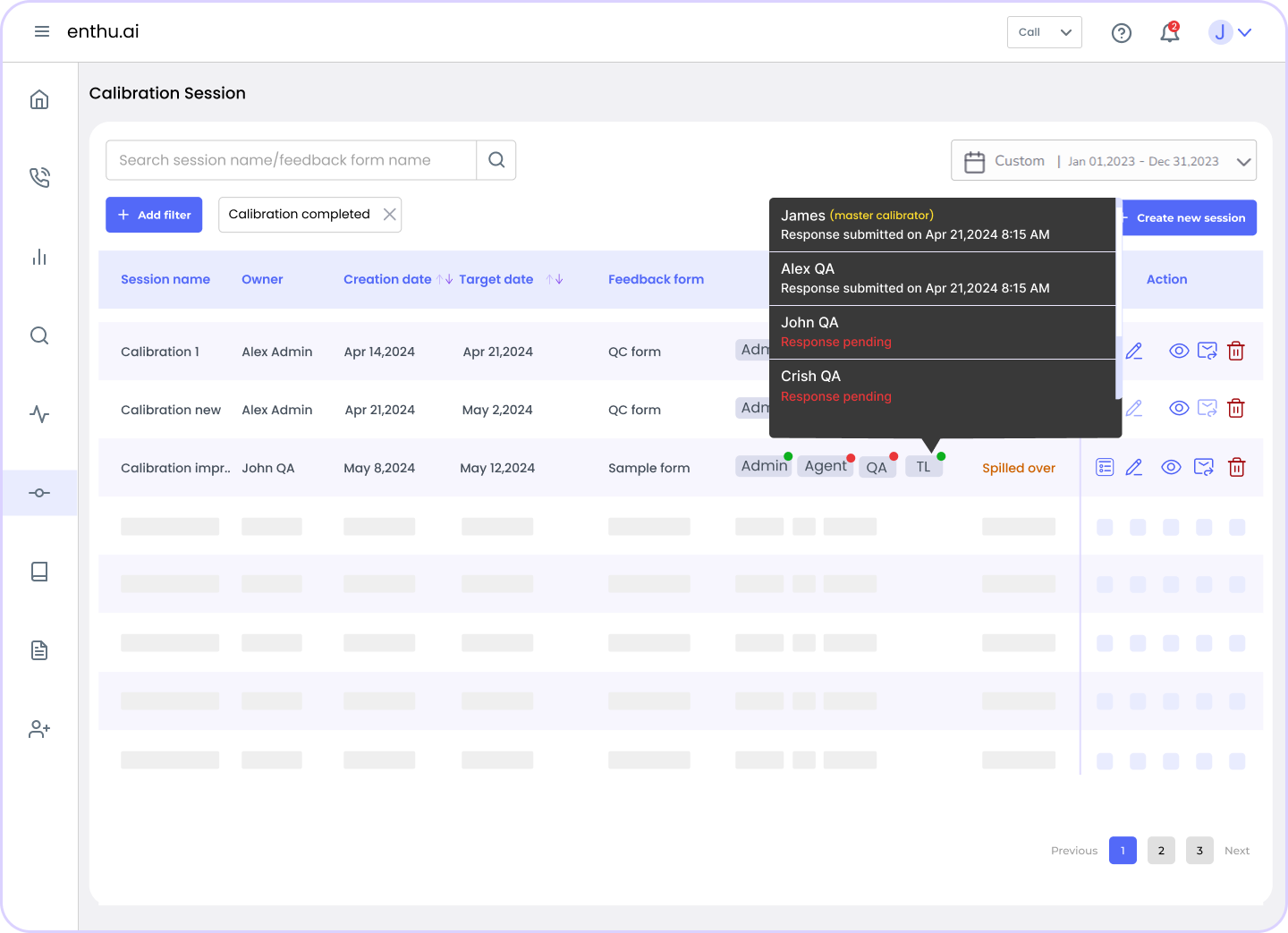

4. Conduct regular calibration sessions

Ever notice how different supervisors score the same call completely differently? That’s a huge problem.

Weekly or biweekly calibration sessions fix this.

Have your evaluators score identical calls independently, then compare notes. Where did scores differ? Why? Talk it through until everyone’s aligned.

Send calls to reviewers a couple days early so meetings focus on discussion, not listening. Document every decision in a shared file everyone can reference. Invite agents to occasional sessions for their input.

5. Deliver real-time feedback and coaching

Real-time coaching transforms performance instantly. Modern agent coaching tools can alert supervisors during live calls when something’s going wrong – low satisfaction signals, missed compliance steps, rising customer frustration.

Agents can course-correct immediately instead of repeating mistakes for weeks. The AI suggests next-best actions based on what’s happening right now in the conversation.

This immediate feedback loop cuts handle time and boosts outcomes dramatically. Contact centers coaching on four or more calls see the strongest results. Make coaching conversations, not lectures.

Enthu.ai Tip: Use an AI tool that provides real-time agent coaching with instant alerts when conversations need supervisor intervention, enabling immediate performance correction.

6. Capture and analyze every interaction

You can’t improve what you don’t measure. Recording every interaction creates a complete record for protection and learning.

Why 100% recording matters:

- Dispute resolution: Proof when customers dispute what was said

- Compliance coverage: Complete audit trails for regulatory requirements

- Pattern recognition: Spot trends across hundreds of conversations

- Training material: Real examples for coaching sessions

Use platforms that automatically organize, transcribe, and tag interactions so they’re searchable later. This gives you the data foundation for coaching, training, and strategic decisions.

7. Leverage speech and text Analytics

Your agents have thousands of conversations monthly – what are customers really saying?

Speech analytics uncovers hidden gold.

The technology automatically identifies trending complaints, emotional triggers, and phrases that lead to great outcomes. It flags compliance risks before they become expensive problems.

Sentiment analysis tracks when conversations turn negative so you can train agents on those exact moments.

Studies show speech analytics can boost productivity by 40%. The AI predicts which customers might churn based on conversation patterns, enabling proactive saves.

Enthu.ai Tip: Leverage sentiment analysis and speech analytics tools that help you automatically surface coaching opportunities and compliance risks across 100% of your interactions.

8. Create channel-specific scorecards

Judging an email by phone call standards makes zero sense. Each channel has unique quality requirements.

Channel-specific focus areas:

- Phone calls: Tone, pace, verbal empathy, active listening

- Emails: Grammar, clarity, completeness, structure

- Chat: Response speed, multitasking, efficient canned responses

- Social media: Public professionalism, brand voice, crisis management

Create separate evaluation forms for each channel reflecting what actually matters there. Weight the scoring differently too – empathy might be heavily weighted on phone calls but less critical in password reset emails.

9. Incorporate customer feedback

Your internal scores only tell half the story, customers have the other half.

Send brief surveys immediately after interactions while experiences are fresh. Keep them short—2-3 questions max covering satisfaction, resolution, and overall experience. Connect survey results directly to agent records in your CRM.

When customers rate service poorly, trigger immediate follow-up workflows.

Compare what your QA team scores versus what customers report. Use customer feedback to refine your scorecards and training priorities.

10. Engage agents in the QA process

QA programs fail when agents feel monitored instead of supported. Flip the script – involve them from day one.

How to engage agents effectively:

- Let them help design scorecards (builds understanding and buy-in)

- Encourage self-evaluations before supervisor reviews

- Allow formal dispute processes for fairness

- Implement peer reviews between experienced agents

- Share trends and insights openly, not secretively

Peer feedback often lands better than management critique.

11. Link QA to continuous training

QA scores sitting in spreadsheets help nobody. Transform findings into targeted coaching and training. Build personalized development plans addressing each agent’s specific gaps.

When five agents struggle with the same objection, that’s a team training opportunity. Create short microlearning modules tackling single skills, these work better than marathon training sessions. Coach frequently on multiple calls, not once quarterly.

Track whether coached behaviors actually improve in later evaluations. This closed loop proves training ROI. Gamify improvements to make development motivating instead of punitive.

Enthu.ai Tip: Our analytics dashboard automatically identifies coaching opportunities and tracks improvement trends, making it easy to measure training effectiveness and ROI.

G. Achieve efficient call center quality assurance with enthu

Traditional QA methods can’t keep pace with modern contact center demands. You need technology that evaluates every conversation, not just 1-2% of interactions.

By taking advantage of smart technology, you can optimize your QA processes, making life easier for agents and boosting satisfaction scores and customer retention. Enthu.ai is a holistic contact center solution with call center quality monitoring software features built right in:

- Automatic call recording

- AI-powered call monitoring

- Intelligent call scoring

- Custom scorecards

- Detailed dashboards

- Speech analytics

- AI-generated call summaries

Ready to transform your QA program?

Request for a FREE demo today!

FAQs

1. What is call center quality assurance? Call center quality assurance is a systematic process that monitors and evaluates customer interactions to ensure they meet established standards. It involves reviewing calls, providing agent feedback, tracking metrics, and implementing improvements to enhance service quality and customer satisfaction. 2. What are the most important call center QA metrics? The most critical QA metrics include First Call Resolution (FCR), Average Handle Time (AHT), Customer Satisfaction (CSAT), Net Promoter Score (NPS), Quality Score, and Compliance Score. These metrics measure both efficiency and effectiveness, providing a complete picture of contact center performance and customer experience quality. 3. How often should QA evaluations be conducted? Best practices recommend conducting QA evaluations continuously rather than periodically. With AI-powered tools, you can analyze 100% of interactions automatically. Manual evaluations should occur at least weekly, with calibration sessions conducted biweekly or monthly to ensure scoring consistency. 4. What's the difference between quality assurance and quality control? Quality assurance is proactive, focusing on monitoring interactions and providing coaching to prevent issues. Quality control is reactive, addressing problems as they occur to maintain consistency. Call center quality management encompasses both approaches plus performance analysis and continuous training for long-term excellence. 5. How can AI improve call center quality assurance? AI enables 100% interaction analysis instead of small sample reviews, identifies patterns humans might miss, provides real-time coaching alerts, automates scoring to ensure consistency, and generates actionable insights from speech analytics. This dramatically increases QA coverage while reducing manual effort and improving accuracy.

On this page

On this page