It’s 2025.

You wouldn’t manually scan every single email for spam.

Neither would you check every payment by hand.

So why are you still auditing calls like it was 1995?

Manual QA is stuck in the past. It’s slow, repetitive, and wildly inefficient.

But worry not… as I said before, it’s 2025. And it’s time to revisit how to do Quality Assurance (QA). That’s why you are here on this blog.

What I love the most about 2025?

The AI to make QA efficient and actionable is already available and successful, that too without breaking your bank.

In this blog, I will guide you through the process of automating your Quality Assurance process in a contact center using AI.

A. How to automate your call QA using AI?

Before we jump into QA automation using AI, let’s make sure you have a solid foundation.

The disclaimer

You don’t need a big tech setup but just a few hygiene factors to make AI your best friend.

- Make sure your calls are recorded and available for analysis, either as a bulk data dump or through an API.

- Next, you need a clear QA scorecard – your definition of a “good conversation”. Think scripts, compliances, objection handling, closing; and so on.

- Also, have your call recordings linked to an agent. Without that, it becomes hard to coach the right person.

Once you’ve got these basics covered, automating your QA becomes surprisingly simple.

You bring the rules. The AI brings the scale.

Let’s break it down further.

1. Convert your voice recordings to text

Good quality transcription is the first step towards automating your QA program.

Converting audio into text enables the AI to quickly scan through the conversations, picking the relevant signals and stack ranking results according to your requirement.

If you have a development team in place, you can use tools like elevenlabs, DeepGram, AssemblyAI, Whisper by OpenAI, and Speechmatics to bulk transcribe your calls.

If you are a user of Enthu.AI, you can cut short all the grunt work. Just integrate using our pre-built connectors and transcribe all your calls automatically through our APIs (we integrate with 100+ telephony dialers and business phones).

2. Define context for every QA parameter in your scorecard

The expectation from auto QA should be to reduce your manual hassle of evaluating calls.

For that, you need to clearly define all your evaluation criteria. This goes as an input to the AI, and as the adage goes “You get what you give”.

Make sure you are giving the right inputs to the AI.

As an example, assume your scorecard evaluates the agent on “Setting the call context” as well as “Handling Objections”.

Most of the contact centers already have those definitions in place, These are actually the lighthouses that your QA teams use while ranking calls.

Put that in a spreadsheet for future use.

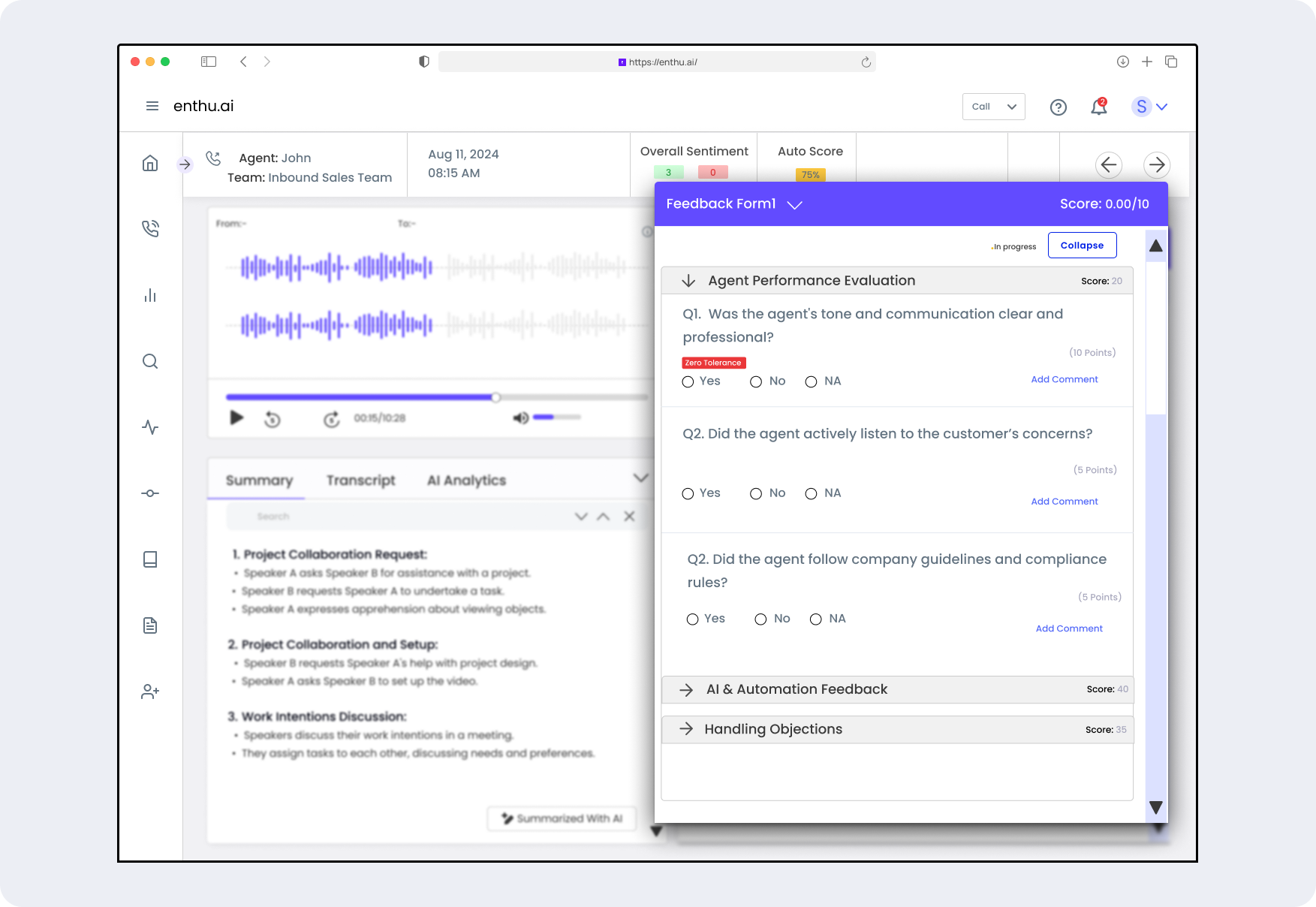

If you are using Enthu.AI, it gives you the feature to set up your own scorecards and fill in the context of each question right then and there.

3. Define sampling logics

Just because you can evaluate 100% of your calls doesn’t mean you have to.

Sure, tools like Enthu.AI make it possible to review every interaction, but smart QA teams focus on what matters most.

The goal isn’t to drown in data—it’s to get insights that actually help you improve.

That’s where sampling logic comes in.

You decide which calls to evaluate based on volume, team, call type, or performance tiers.

Maybe you want to review 10 calls per agent per week.

Maybe you want to focus only on calls longer than 5 minutes.

Maybe you want to zero in on first-time resolutions or negative sentiment calls.

This way, you’re working with a statistically significant dataset.

Not too small to be random.

Not too large to be wasteful.

It helps remove bias from your QA process.

Some of these filters (like duration, disposition etc.) can be put right away in your dialer software. For keywords or sentiment related filters, you would need your dev team to put a search across the transcripts.

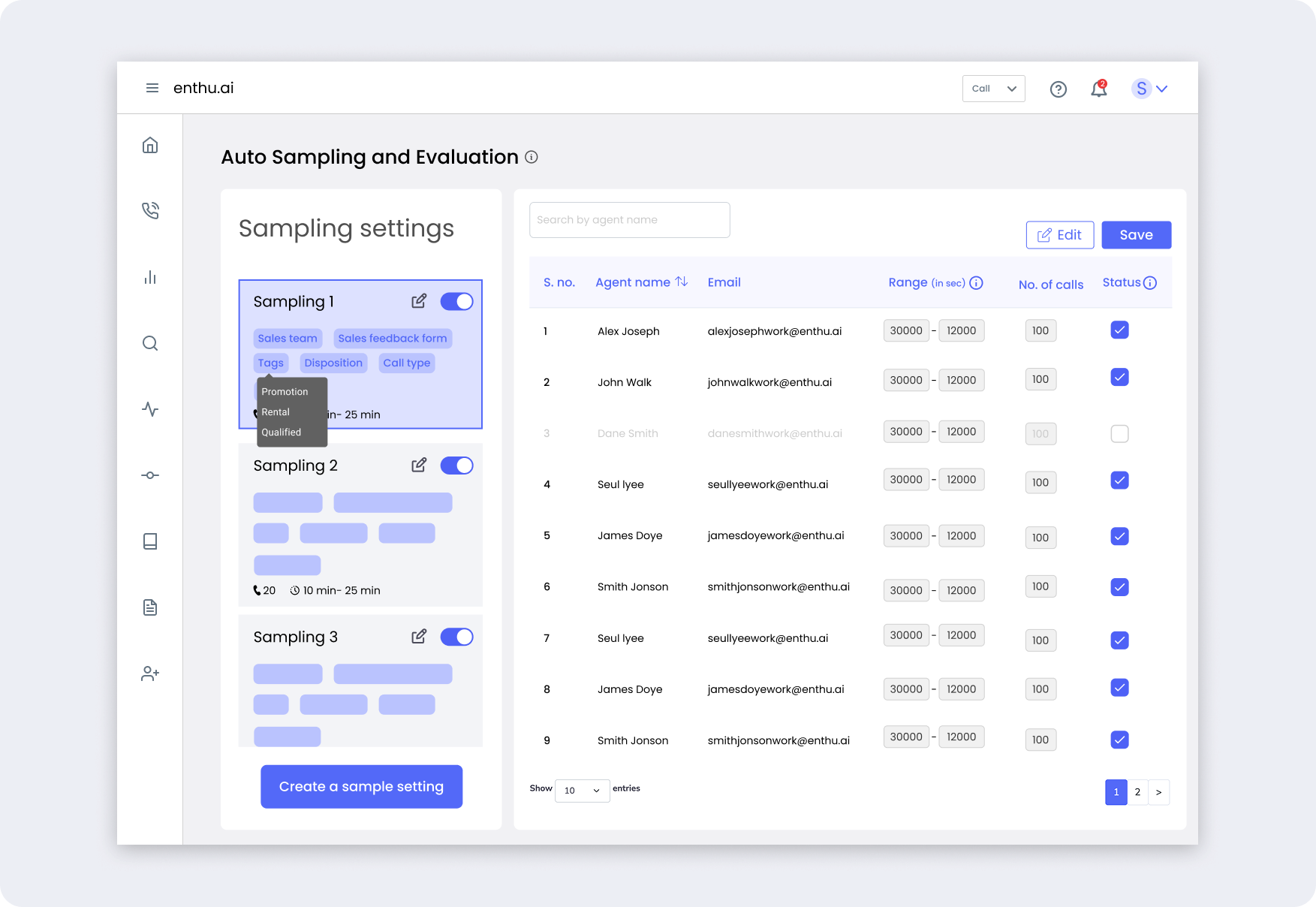

Or if you are using Enthu.AI, you can set up your sampling logics right within the tool, as shown below:-

4. Use an AI model to score sampled calls

By now, you’ve defined what makes a great call and determined which calls matter most.

Now comes the magic—actually scoring those calls at scale.

This is where AI-driven evaluation takes over.

Instead of human reviewers spending hours listening to calls, you can feed each transcript into an LLM model (like Llama or GPT-4) and prompt it to evaluate the call based on your pre-defined QA parameters.

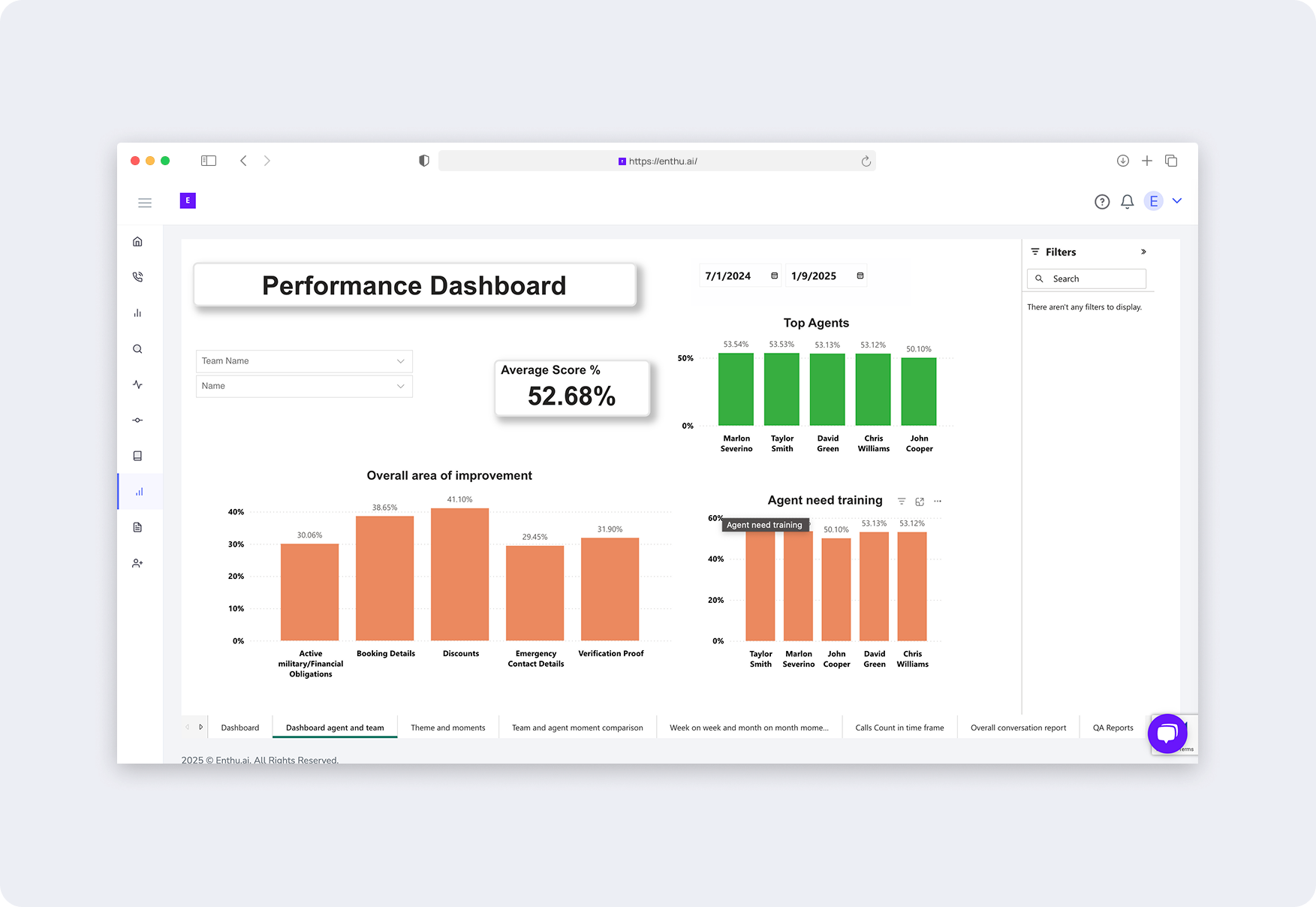

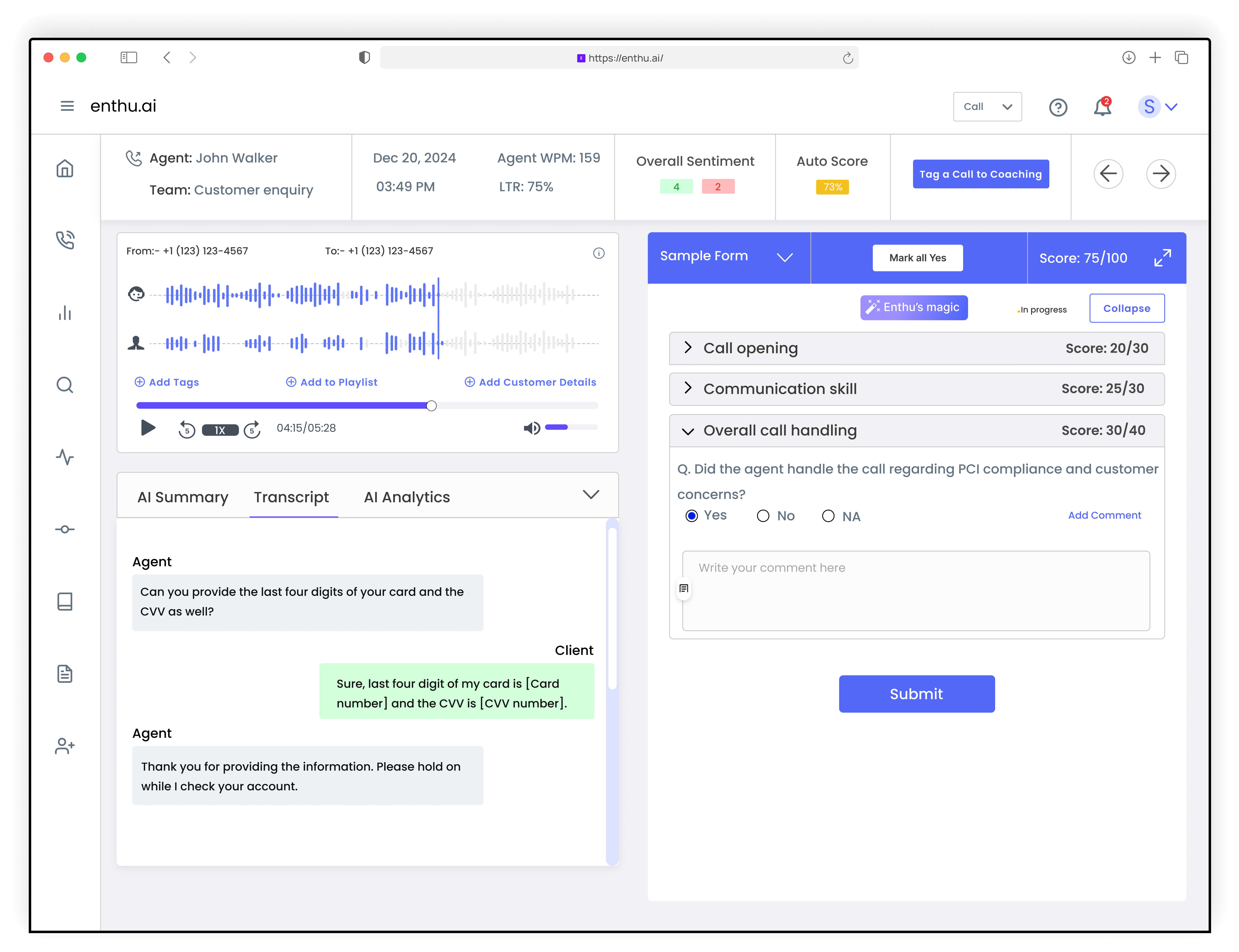

“Analyze the transcript below and evaluate the agent’s performance on: 1) Setting call context, 2) Handling objections, and 3) Compliance adherence. Provide a score out of 10 for each, with reasoning.” Here’s what an AI-generated evaluation might look like: Agent Performance Evaluation The AI doesn’t just score the call—it explains why, helping QA teams make more informed decisions. Enthu’s users know how easy it is to upload 100 calls all together and get agent’s evaluation in minutes. A QA score alone doesn’t tell the full story. It’s just a number without deeper insights or meaning. Agents need to know why they lost points.They must understand what went wrong in each call. Clear feedback helps them improve skills and performance. Without insights, they keep making the same mistakes. The goal is growth, not just scoring every call. For example, a simple spreadsheet might look like this: Download the Excel from this link: Agent Reports Take a look at the table. It outlines key metrics for agent improvement. It includes measurement methods and target benchmarks. It also provides actionable steps. These help enhance performance and customer satisfaction. Just a sec… We are not done yet, Power BI helps analyze agent performance with visual reports. Enthu.AI pulls data from multiple scorecards into one dashboard. Managers track key metrics like call duration and resolution time. Filters allow focusing on specific agents or call types. Charts highlight trends, strengths, and improvement areas. Data-driven insights improve coaching and training plans. Performance comparisons help set realistic benchmarks. Custom dashboards tailor reports to business needs. Automated reports reduce manual effort and errors. Imagine you’re listening to hundreds of calls a day. Sounds like frustrating, right? That’s what manual QA feels like!” Now, let’s break it down: Manual QA is like trying to catch every typo in a 200-page essay—by hand. Sure, it works, but it’s slow, and humans tend to miss stuff. Plus, let’s be real, you’d probably fall asleep after the first 50 calls. Auto QA, on the other hand, is like having a robot that never gets tired and catches every single mistake. It works 24/7, never needs coffee, and always finds the patterns. It’s faster and more accurate, making your life a lot easier. So, while Manual QA still has its place (like for those tricky calls), Auto QA is the superhero that gets the job done quickly and efficiently. Let’s check the key differences between Manual QA and Auto QA to see which one truly works best. Manual QA teams can’t review every call—it’s just too much work. Most centers only check 1-5% of calls, leaving tons of mistakes unnoticed. How Auto QA Wins: More coverage = fewer blind spots = better quality. Manual QA takes hours per call—listening, scoring, and reporting. By the time a mistake is found, it’s too late to fix. How Auto QA Wins: Faster reviews mean quicker improvements and fewer compliance risks. Humans get tired, miss details, and judge calls differently. One QA reviewer may score a call as “good,” while another might see it as “bad.” How Auto QA Wins: Consistent, data-driven evaluations improve training and performance. In Manual QA, compliance mistakes often go unnoticed until it’s too late. One missing disclaimer or a misleading statement can lead to heavy fines. How Auto QA Wins: Catch compliance issues before they become costly mistakes. With Manual QA, coaching is slow and generic. Managers don’t have time to analyze every agent’s strengths and weaknesses. How Auto QA Wins: Better coaching = faster agent improvement = happier customers. Manual QA needs a big team to review even a small fraction of calls. That’s expensive, especially as the call volume grows. How Auto QA Wins: Less manual work, lower costs, better results. Smart teams are automating QA—join them with a free call evaluation! Feature The moment people hear “automation,” they panic—“Will this replace me?” But let’s be real. Auto QA isn’t here to replace humans; it’s here to assist them. Think about manual QA: That’s not productive, not scalable, and not effective. What auto QA does? Auto QA doesn’t take jobs—it makes them easier. Instead of listening to calls all day, QAs can spend time actually improving agent performance. Talking to call center managers, we found they struggle with missed compliance risks, lost coaching opportunities and limited performance insights. Auto QA checks 100% of your calls. It listens to every call automatically. No call is left out. It’s like having a robot do all the work for you. The system looks at everything in the call—tone, script, customer handling, and more. It checks if agents follow the right steps. You can track how well agents are doing on each call. If something’s wrong, it flags it right away. No need to listen to hours of calls. What Enthu.AI’s users achieve with auto QA This means no more guesswork—you’ll always know which agents need help, where compliance risks are, and what’s driving customer satisfaction. Why settle for random sampling when AI can monitor everything? See how 100% QA coverage changes the game – Try Now Compliance mistakes can lead to heavy penalties, yet most call centers still rely on manual checks. With only a fraction of calls reviewed, critical issues often go unnoticed until it’s too late. How does automated QA help? Automated QA monitors 100% of calls, instantly flagging compliance risks and ensuring agents follow protocols. Instead of catching mistakes after the fact, automated QA ensures every call meets legal and regulatory standards in real time. CTA: Why take the risk? Stay compliant with ease. DEMO Embracing Automated Quality Assurance in your call center is no longer just an option—it’s a necessity. Quality assurance software like Enthu.AI can streamline processes, enhance agent training, and improve customer satisfaction. By automating QA, you not only boost accuracy and consistency but also create a more efficient work environment. So, are you ready to transform your call center operations? Start implementing automated QA solutions today to meet rising customer expectations head-on. For more insights on enhancing your call center’s performance, check out our related articles on AI in customer service and effective training strategies. Take action now—upgrade your QA processes and see the difference it makes for your team and customers! What challenges are you facing with your current QA processes? Let us know in the comments below! 1. What does quality assurance do in a call center? Quality assurance (QA) in a call center monitors and evaluates customer interactions to ensure agents meet performance standards. It helps identify strengths and weaknesses, enabling targeted training and improvement. QA ultimately enhances customer satisfaction and operational efficiency. 2. What is quality assurance automation? Quality assurance automation refers to the use of technology and software to streamline the evaluation process of customer interactions in call centers. It analyzes calls, chats, and emails, providing real-time feedback and data-driven insights without manual intervention. This automation increases accuracy, consistency, and scalability in monitoring agent performance. 3. What is auto QA for customer support? Auto QA for customer support is an automated system that assesses customer interactions to evaluate the quality of service provided. It utilizes algorithms and speech analytics to score interactions, flag issues, and generate reports. This ensures timely feedback for agents and helps improve overall customer experience.5. Update reports and draw agent improvement insights

Agent Name Calls Handled QA Score (%) Compliance (%) Customer Satisfaction (CSAT) Average Call Duration (mins) First Call Resolution (%) Sentiment Score Key Improvement Area Coaching Notes John 50 78 85 4.2/5 7.5 65 Neutral (50) Objection handling Needs better response scripts Sarah 45 82 90 4.5/5 6.8 70 Positive (72) Script adherence Reinforce script training David 60 75 80 4.0/5 8.2 60 Neutral (48) Reduce hold time Improve system navigation Emma 55 88 95 4.7/5 6.2 75 Positive (85) Empathy and tone Continue positive reinforcement Michael 52 80 87 4.3/5 7 68 Neutral (55) Clarify product details Needs better product knowledge Metric Description Measurement Method Target Value Action for Improvement QA Score (%) Overall quality score based on predefined evaluation criteria. Average of QA evaluations on sampled calls. 85%+ Targeted coaching based on QA feedback. Compliance (%) Adherence to regulatory and company guidelines. Percentage of calls meeting compliance standards. 90%+ Regular compliance training and monitoring. Customer Satisfaction (CSAT) Customer rating of interaction experience. Post-call survey (scale of 1-5). 4.5/5+ Improve soft skills, active listening, and problem resolution. First Call Resolution (FCR %) Percentage of issues resolved in the first call. Number of one-touch resolutions / total calls. 70%+ Enhance product knowledge and troubleshooting efficiency. Average Call Duration (mins) Time taken per call from start to resolution. Call logs and system tracking. 6-7 minutes Optimize scripts, avoid unnecessary hold time. Hold Time (mins) Total time the customer is on hold. Call system tracking. Under 1.5 minutes Improve system navigation and knowledge base access. Sentiment Score AI-based analysis of customer tone and language. NLP model scoring from -100 (negative) to +100 (positive). 70 Train agents on empathy, tone modulation, and de-escalation techniques. Silence Percentage (%) Portion of the call spent in silence. Call transcription analysis. Under 10% Reduce dead air, guide agents on keeping engagement. Escalation Rate (%) Calls transferred to a senior agent or manager. (Escalated calls / total calls) * 100. Below 5% Provide better decision-making autonomy and confidence training. Upsell/Cross-sell Success (%) Success in converting interactions into sales opportunities. (Successful upsells/cross-sells / total opportunities) * 100. 25%+ Improve sales training and personalized customer recommendations. Agent Interruptions (%) Percentage of times an agent interrupts a customer. AI-based conversation analysis. Below 5% Improve active listening skills and patience training. Call Setiment Distribution (%) Breakdown of positive, neutral, and negative customer sentiments. AI-based sentiment analysis. 70% positive, 20% neutral, <10% negative. Address common negative trends, reinforce positive behaviors. Post-Call Work Time (mins) Time spent on after-call documentation and updates. Call handling system tracking. Under 2 minutes Automate documentation, use templates for efficiency.

B. Auto QA vs manual QA: Which one works best for call centers?

1. Call coverage: 100% vs. 5% of calls

2. Speed: instant vs. days of work

3. Accuracy: AI data vs. human bias

4. Compliance: proactive vs. reactive

5. Coaching: personalized vs. generic feedback

6. Cost: High Labor Cost vs. Scalable AI

Manual QA Auto QA (with Enthu.AI) Call Coverage 1-5% of calls checked 100% of calls analyzed Speed Takes days/weeks Instant scoring and reports Accuracy Prone to human error & bias AI-driven, consistent and fair Compliance Delayed issues Risks flagged immediately Coaching Generic feedback Personalized training insights Cost High labour cost Scales without extra staff C. Is auto QA going to eat my job?

D. Does auto QA analyze 100% of my calls?

1. Auto QA solves this problem by analyzing every single conversation. No exceptions.

2. Can automated QA minimize compliance risks?

Conclusion

FAQs

On this page

On this page