A. What are industry-specific terms?

Industry-specific terms are words and phrases unique to your business domain. They’re the specialized vocabulary that defines how your team communicates with customers.

These terms fall into several categories :

- Proper nouns include brand names, product titles, company names, and location-specific identifiers.

- Domain-specific terminology covers technical terms in healthcare, finance, insurance, and other regulated industries.

- New or evolving language encompasses recently introduced policy names, product launches, acronyms, and company-specific jargon.

B. Why does transcription accuracy for industry-specific terms matter?

Here’s why accurate AI transcription of these terms matters so much:

- Compliance and risk management: In regulated industries like healthcare and finance, inaccurate transcripts create serious liability. Misidentified product names, missed disclosures, or garbled policy references become compliance nightmares. A retail bank with an incorrectly transcribed loan type faces significant regulatory risk.

- Business intelligence quality: Your call analytics are only as good as your data. When transcription errors corrupt your conversation data, you can’t identify patterns or perform accurate root-cause analysis. If your ASR system consistently mis-transcribes a location name, all those high-value interactions disappear into generic call data.

- AI system effectiveness: Transcription errors undermine your entire contact center AI ecosystem. An AI Agent might misinterpret customer intent because of a single transcription mistake. Your AI Copilot provides wrong guidance during calls. Performance evaluations become unreliable.

- Operational efficiency: When agents can’t trust transcriptions, they waste time correcting errors or re-listening to calls. QA teams struggle to find relevant conversations because search functions return inaccurate results.

C. How Enthu.ai achieves high accuracy on complex terms

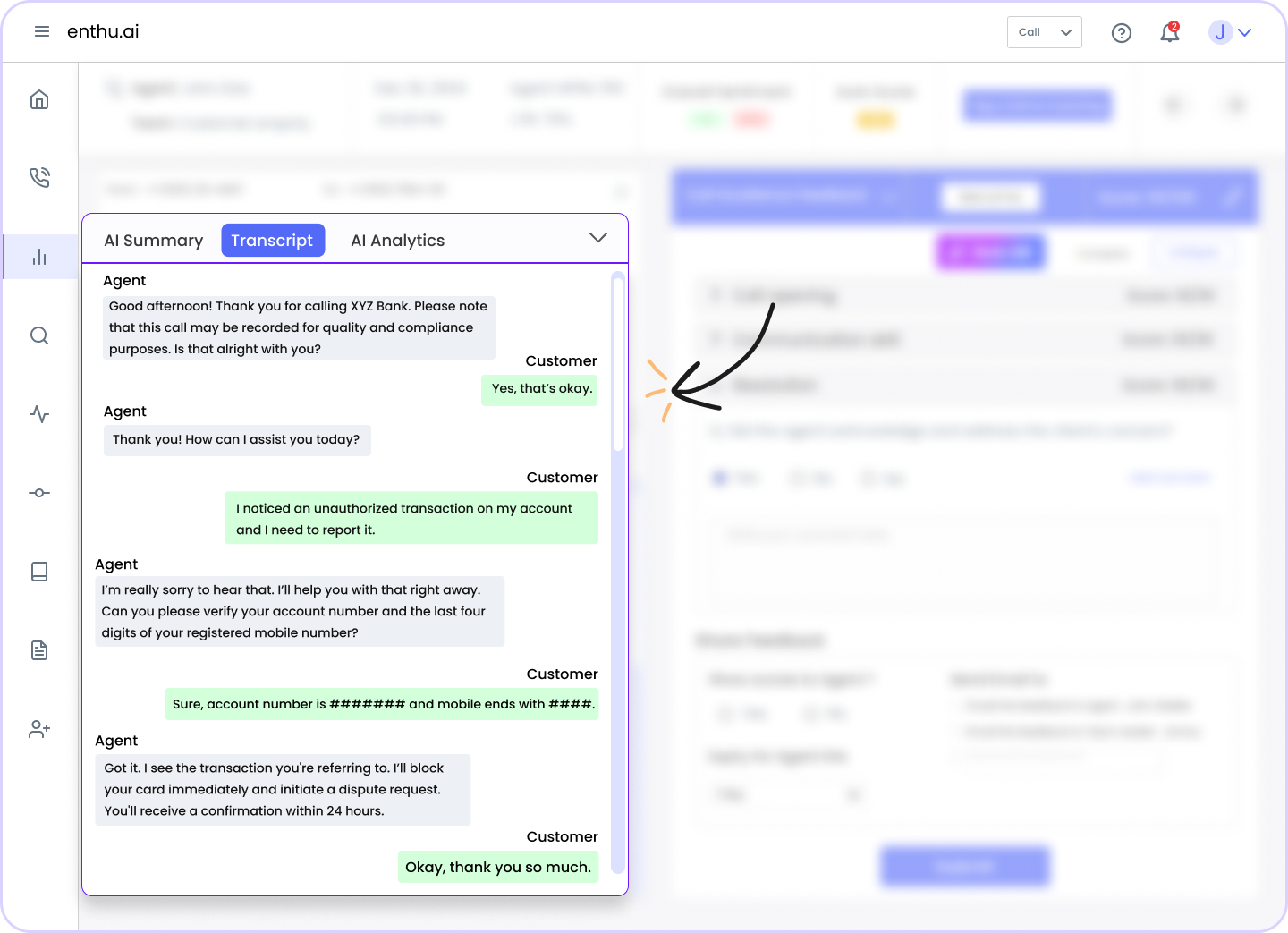

Enthu.AI is an AI-powered call transcription software that addresses transcription challenges through a comprehensive approach built specifically for contact center environments:

- Advanced speech recognition technology: The platform uses cutting-edge speech recognition techniques to achieve 95-97% accuracy on high-quality calls. The transcription algorithm maintains over 90% accuracy even in challenging conditions.

- Automatic speaker separation: Enthu.AI automatically distinguishes between speakers and labels each segment clearly. The system accurately identifies who said what, even in complex three-way discussions.

- Intelligent language detection: The platform identifies the primary language spoken in a call without manual input. It adapts instantly to different languages, eliminating setup friction for global teams.

- Precise timestamping: Every word in your transcripts includes accurate timestamps. You can jump directly to specific moments in recordings without scrubbing through entire calls.

- Comprehensive call coverage: Unlike traditional QA sampling that covers less than 1% of interactions, Enthu.AI captures and transcribes 100% of voice calls with automated QA. This complete coverage ensures no conversation is missed.

- Noise handling capabilities: The system maintains accuracy across diverse call conditions, including background noise and varied audio quality. This resilience means you get reliable transcripts regardless of the recording environment.

- Context-aware processing: Enthu.AI doesn’t just transcribe words, it understands context. The platform analyzes conversations to extract meaningful insights, identify key moments, and flag coaching opportunities.

- Seamless integration workflow: The platform ingests recordings via APIs, converts speech to text, separates speakers, and automatically handles sensitive data. You get clean, actionable transcripts without manual intervention.

- Continuous improvement approach: Enthu.AI encourages prospects to provide call samples and test transcription accuracy during initial stages. This testing-first approach ensures the system meets your specific requirements before full deployment.

D. The real cost of missed industry terms

When your transcription system fails to capture industry-specific vocabulary accurately, the consequences ripple throughout your organization. Let’s take a closer look at some of these risks:

1. Blind spots in customer insights

You can’t analyze what you can’t accurately transcribe.

A hospital system trying to understand call drivers for a specific medical condition finds that mis-transcribed clinical terms scatter valuable data across unrelated categories.

Marketing teams miss trending product mentions because brand names are consistently garbled.

2. Compromised compliance programs

Regulated industries face the highest stakes. Financial services firms must document specific disclosures during customer calls.

Insurance companies need accurate records of policy details and coverage discussions. Healthcare providers must maintain precise clinical documentation.

When transcription errors corrupt these records, you’re creating audit vulnerabilities.

3. Frustrated quality assurance teams

Traditional QA sampling already covers less than 1% of interactions.

When transcriptions are unreliable, even that small sample becomes questionable. Evaluators spend excessive time verifying transcripts instead of coaching agents.

4. Weakened training programs

New agent onboarding relies heavily on call reviews and examples.

Inaccurate transcripts make it harder to find specific coaching moments. Training materials based on flawed transcriptions perpetuate errors.

5. Lost revenue opportunities

Sales teams need accurate conversation intelligence to identify upselling opportunities and objection patterns.

When product names and pricing discussions are mis-transcribed, these insights vanish.

E. Common transcription challenges contact centers face

Your contact center environment creates unique challenges for speech recognition systems :

- Background noise and acoustic chaos: Call centers are noisy environments. Agents work in open spaces with overlapping conversations, keyboard clicks, hold music, and environmental sounds. Each 10dB increase in background noise reduces transcription accuracy by 8-12%.

- Diverse accents and speaking patterns: Modern contact centers serve global customer bases with support teams distributed across regions. Accent variations dramatically impact accuracy. Research shows accented speech exhibits word error rates of 30-50% compared to just 2-8% for native speakers. Even within single countries, regional dialects create recognition challenges.

- Technical and domain vocabulary: Vocabulary in many business conversations consists of industry-specific jargon. Healthcare conversations include clinical terminology and pharmaceutical names. Financial services calls reference complex products and regulatory terms. Insurance discussions involve specific policy types and coverage details.

- Out-of-vocabulary (OOV) terms: These are words your ASR model hasn’t encountered in training data. Brand names, new products, proprietary terms, and company-specific acronyms all qualify as OOV terms. Standard speech recognition systems can’t properly process these words because they’re not in the model’s vocabulary.

- Overlapping speech and interruptions: Real conversations rarely follow turn-taking patterns. Customers interrupt agents, multiple people speak simultaneously, and emotions run high. These conditions challenge even advanced speech recognition systems.

- Variable call quality: Not every call comes through crystal-clear. VoIP compression, poor phone connections, mobile audio quality, and technical distortions all impact transcription. Heavily compressed audio loses critical frequency information needed for accurate recognition.

F. Measuring success: accuracy metrics that matter

Understanding transcription quality requires clear metrics. Here are some most important metrics to measure transcription quality:

- Word Error Rate (WER): This standard metric measures the percentage of incorrectly transcribed words. It’s calculated by adding substitutions (wrong words), deletions (missed words), and insertions (extra words), then dividing by the total number of words spoken.A WER below 10% indicates excellent performance. Rates between 10-15% are acceptable for live call center environments.

- Accuracy percentage: This is the inverse of WER. Current leading platforms achieve 95-98% accuracy in optimal conditions.

- Domain-specific term recognition: Beyond overall accuracy, measure how well the system captures your specific vocabulary. Test with actual call samples containing industry jargon, brand names, and technical terms.

- Speaker diarization accuracy: Evaluate how precisely the system identifies and labels different speakers. This matters for coaching, compliance, and conversation analysis.

- Processing speed: Measure how quickly transcripts become available. Real-time or near-real-time processing enables immediate insights and faster workflows.

- Consistency across conditions: Test accuracy across various scenarios: different accents, background noise levels, call quality variations, and speaker overlap.

- Business outcome metrics: Track operational improvements: reduced QA review time, faster agent onboarding, improved compliance scores, and increased customer satisfaction.

G. Best practices for optimizing transcription quality

You can maximize transcription accuracy through these strategic implementation approaches :

- Test with your actual calls: Don’t rely solely on vendor accuracy claims. Provide sample recordings from your environment during evaluation. Test across different scenarios: accents, call quality, and terminology.

- Implement noise reduction strategies: Use high-quality headsets for agents. Record in controlled environments when possible. Apply noise-canceling technology to improve audio quality.

- Leverage custom vocabulary features: Many transcription platforms allow adding domain-specific terms. Upload lists of product names, brand terms, technical jargon, and commonly misheard words. These custom dictionaries improve recognition of specialized vocabulary.

- Optimize recording setup: Capture each speaker on separate audio channels when possible. This improves speaker diarization and overall clarity. Ensure proper microphone positioning and audio settings.

- Train your team: Encourage clear speech and appropriate pacing. Minimize side conversations during calls. Use consistent terminology for products and services.

- Monitor and refine continuously: Review transcription accuracy regularly. Identify recurring errors and adjust custom vocabularies. Track accuracy trends over time.

- Integrate with existing systems: Connect transcription tools with your CRM, QA platforms, and analytics systems. Seamless integration maximizes the value of accurate transcripts.

- Focus on speaker separation: Clear speaker identification enhances transcript usability. Ensure your platform accurately labels agent vs. customer speech.

- Plan for diverse accents: If your team serves global customers, test accuracy across accent variations. Consider accent neutralization tools for improved communication.

- Establish quality thresholds: Define acceptable accuracy levels for your use cases. Legal and medical applications require higher standards than internal notes.

H. How to choose the best transcription software?

Choosing the right transcription platform requires evaluating several factors.

- Accuracy for your specific needs: Test with your actual call recordings. Verify performance on your industry terminology, accent variations, and typical audio conditions.

- Speaker diarization quality: Ensure accurate identification and labeling of different speakers. This capability is essential for coaching and compliance.

- Search and filtering capabilities: Confirm you can quickly find relevant conversations. Look for keyword search, phrase detection, and timestamp navigation.

- Integration options: Verify compatibility with your existing tech stack. Check for API access, CRM connectors, and QA platform integration.

- Implementation timeline: Enthu.AI offers faster implementation than traditional speech analytics partners. Some organizations report getting value in days rather than months.

- Scalability: Ensure the platform handles your call volume without performance degradation.

- Support and training: Evaluate onboarding processes and ongoing support. Look for responsive technical teams and comprehensive documentation.

- Compliance features: Confirm the platform meets your industry regulations. Look for data encryption, access controls, and audit trails.

- Pricing structure: Understand the cost model and verify it aligns with your budget. Some platforms offer pilot programs to test before full commitment.

- User feedback: Review customer testimonials and case studies. Look for organizations in similar industries with comparable use cases.

Transform your contact center with accurate transcription

Your conversations contain untapped insights. Accurate transcription unlocks this potential.

Enthu.AI delivers the precision your contact center needs.

The result?

Better coaching, stronger compliance, deeper insights, and improved customer experiences.

Organizations using Enthu.AI report significant improvements.

Yopa, a leading UK estate agency, improved agent performance by 30%. MS Auto used Enthu.AI to QA 100% calls and lift conversions by 5%. And HomePride Bath improved the appointment set by 5%.

“Before Enthu.AI, pulling recordings out from the dialer and selecting the right calls was such a painful task. Worse, my visibility was always limited to the handful of calls my team managed to review.” – Alex McConville, head of sales in Yupa.

Ready to see the difference accurate transcription makes?

Enthu.AI offers a 14-day free pilot with no credit card required and quick setup.

Request a free demo today!

FAQs

1. How long does it take to achieve 95%+ accuracy with Enthu.AI?

Implementation varies based on your needs. Generic out-of-the-box deployment takes 5-7 days and achieves 90%+ accuracy immediately. Custom fine-tuning for your specific industry terminology requires 2-4 weeks but delivers 95-97% accuracy. Most customers see measurable improvement within 30 days as the system adapts to their environment and speech patterns.

2. Do we need to provide training data, or does Enthu.AI work immediately?

Enthu.AI delivers 90%+ accuracy out-of-the-box for general English calls without any setup. However, achieving 95-97% domain-specific accuracy requires custom fine-tuning on 500-1,000 representative calls from your contact center. The test-and-optimize approach is recommended for mission-critical environments.

3. Is Enthu.AI compliant with HIPAA, FINRA, and GDPR regulations?

Yes. Enthu.AI holds certifications for HIPAA (healthcare), FINRA (financial services), GDPR (data privacy), and SOC 2 Type II compliance. All transcriptions are encrypted at-rest and in-transit. Sensitive data like PII, payment information, and SSNs are automatically detected and redacted from transcripts and audit logs.

4. Can Enthu.AI handle background noise, accents, and poor audio quality?

Yes. Enthu.AI’s acoustic enhancement includes real-time noise suppression, gain normalization, and speaker adaptation for diverse accents. Heavy background noise or poor audio quality may reduce accuracy by 2-5%, but the system includes acoustic pre-processing to mitigate this.

5. Can Enthu.AI integrate with our existing call recording and analytics systems?

Yes. Enthu.AI provides APIs and pre-built connectors for all major platforms: Genesys, NICE, Avaya, Amazon Connect, Twilio, and 50+ others. Integration typically takes 2-5 days. Real-time transcription streams directly to your existing QA, analytics, and coaching platforms without workflow disruption.

On this page

On this page