You handle dozens of calls every day. Notes pile up. Important details slip through. That costs time and revenue.

Speech-to-text flips the script.

AI transcription audio to text capabilities capture every word across tens of thousands of minutes, allowing you to search, coach, and report in seconds, not days.

You spot trends, risks, and opportunities instantly.

What if you could surface every objection, promise, and compliance moment? What if you could coach the right call every day?

That is the power of AI transcription tied to quality and coaching workflows.

In this guide, you will learn about the technology, how it works, and where it is helpful.

Let’s get you from “we sample a few calls” to “we learn from every call.”

Let’s jump in!

A. What is AI transcription?

AI transcription is the automated conversion of speech into text using machine learning. It listens to audio, recognizes words and speakers, and outputs readable text. Think of it as a super-fast, always-on note-taker for calls.

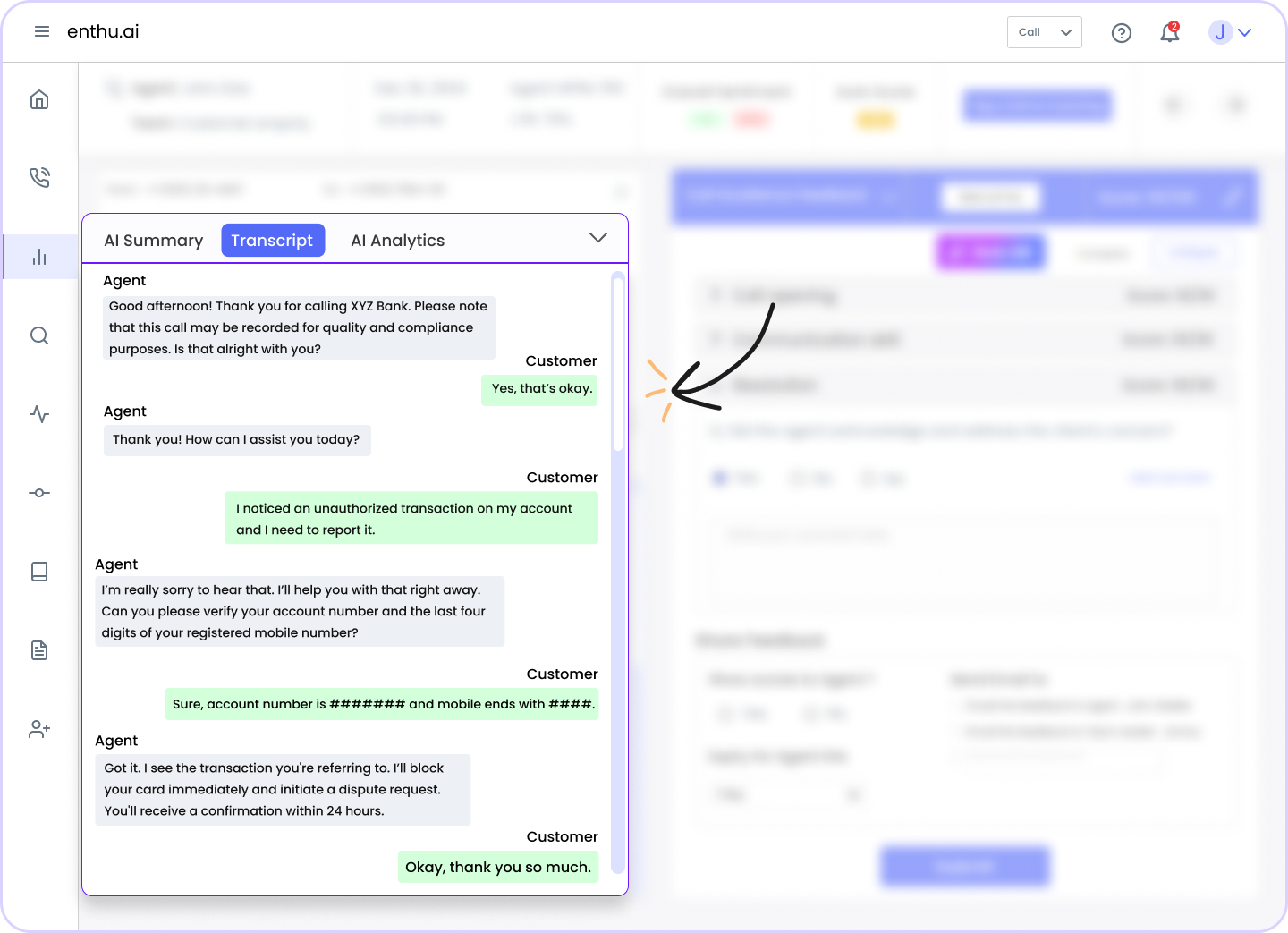

For example, a 28-minute billing support call is converted into a searchable transcript with timestamps, speaker labels, and redacted card numbers. You can then jump to “refund” mentions, flag missed disclosures, and coach the agent on empathy.

B. How does AI transcription work?

AI transcription tools rely on Automatic Speech Recognition (ASR) technology to convert spoken voice into text. Over time, ASR evolved from rule-based pattern matching to deep learning models that understand context, accents, and noise.

Below is how the transcription pipeline works:

1. Audio preprocessing

This is the cleanup step. The tool receives raw audio (a call recording, meeting recording, etc.). It then:

- Removes background noise, hums, and static (noise reduction)

- Normalizes volume so spoken parts aren’t too quiet or too loud

- Splits long audio into smaller chunks or segments

- Eliminates long silences or meaningless gaps

This stage ensures the audio the system “hears” is as clean as possible, which boosts accuracy in later steps.

2. Feature extraction

Once the audio is cleaned, the system converts it from a raw waveform into features that a model can analyze and recognize.

- It slices the audio into short frames (e.g., 20-30 ms windows)

- For each frame, it computes features such as frequency spectra, mel-spectrograms, and MFCCs (Mel Frequency Cepstral Coefficients), among others.

- These features capture patterns in pitch, tone, energy, and how sound changes over time.

These features are the “language” that the model uses to match audio with speech.

3. Pattern recognition

Now comes the core mapping: matching features to probable speech units.

- The model (usually a neural network or deep learning model) learns the relationship between acoustic features and phonemes (units of sound), subwords, or characters.

- It scores many possible matches: “this chunk sounds like /k/ or /t/ or /ch/”

- The model uses its learned weights (trained on a large amount of data) to select the most probable mapping.

In older systems, this step was handled by acoustic models and hidden Markov models (HMMs). In modern systems, deep neural networks (CNNs, RNNs, transformers) often do this end-to-end.

4. Language modeling

After you have plausible word fragments from pattern recognition, the system needs to build real sentences.

- A language model considers grammar, context, and word frequency to choose which word sequence makes the most sense.

- For example, if the audio could be “there” or “their,” the language model looks at surrounding words to decide

- The language model may use n-grams, neural language models, or transformer architectures.

This step helps reduce errors, such as misheard words or nonsensical phrases.

5. Text output

Here, the system produces the raw transcript:

- It strings together words (or subwords) with timestamps

- It often assigns confidence scores (how sure it is about each word)

- It may insert punctuation, capitals, and line breaks – though often with help from the next stage.

This is your basic, machine-generated transcript.

6. Post-processing

To polish the raw output, the system does finishing work:

- Spell check or correction of common errors

- Grammar fixes or minor adjustments

- Redaction or masking of sensitive data (e.g., credit cards, PII)

- Formatting (paragraphs, speaker labels, timestamps)

- (Optionally) human review or hybrid human + AI passes for critical accuracy

This gives you a clean, practical, and readable transcript.

C. Technology behind AI transcription

AI transcription utilizes machine learning to transcribe audio or video, converting speech into text. It learns by training on vast sets of recordings paired with transcripts. Over time, it gets better at understanding accents, jargon, and speech patterns.

The process includes:

1. Machine learning

You can think of machine learning as teaching a computer how to understand human speech. The tool trains on thousands (or millions) of audio samples and their written transcripts.

Over time, it picks up on differences in accents, industry terms, slang, and even speech nuances. When it starts making mistakes, those errors are used to retrain and improve accuracy.

2. Speech recognition

Once trained, the system hears your audio and begins transcription:

- It breaks the audio into small sound units (sometimes akin to syllables or phonemes).

- It maps those units to likely words or sub-words it already knows.

- It uses probability models to pick which words match best.

That’s how the tool converts what it hears into raw text.

3. Natural language processing (NLP)

After raw text comes meaning. NLP modules polish the output:

- They use context to select the correct homophones (e.g., “there” vs. “their”).

- They fix awkward or nonsensical phrasing.

- They flow smoothly, so sentences read naturally.

This step ensures the transcript isn’t just literal word-for-word noise, but a meaningful narrative.

4. Specialized knowledge

Some AI transcription tools are boosted for specific fields like medicine, law, or tech:

- In healthcare, the tool will understand terms like “myocardial infarction.”

- In legal transcription, it handles phrases like “habeas corpus” or “res judicata.”

This domain adaptation helps accuracy in complex or technical conversations.

Also, read “Speech Analytics: Meaning, Uses & Benefits”

D. Why transcription matters for customer support

You already know the pain points. Manual note-taking steals agent focus. QA teams can’t review every call. Managers guess at root causes.

Here’s how transcription fixes that.

- Easier access to information: Every call becomes searchable text. You find issues by keyword, topic, or outcome. New agents ramp up faster because they engage in honest conversations from day one.

- Better agent training: You build libraries of sentiment analysis examples tied to outcomes. You show “what good looks like.” You create playbooks for empathy, objection handling, and closings.

- Faster turnaround times: Minutes replace hours. Manual transcription can take 4-6 hours to transcribe each recorded hour. Automation delivers near real-time text for coaching the same day.

- Seamless integrations: Send transcripts to your CRM, help desk, and BI tools – trigger cases or tasks based on phrases. Tie transcript snippets to tickets and knowledge updates.

- Higher precision and accuracy: Modern systems reach single-digit word error rates on clear audio. Domain tuning and custom dictionaries boost accuracy on branded terms. Diarization makes it easy to attribute quotes.

- Lower costs: Human transcription can cost one to three dollars per minute. Automation slashes that. You also cut the hidden cost of manual QA and note-taking.

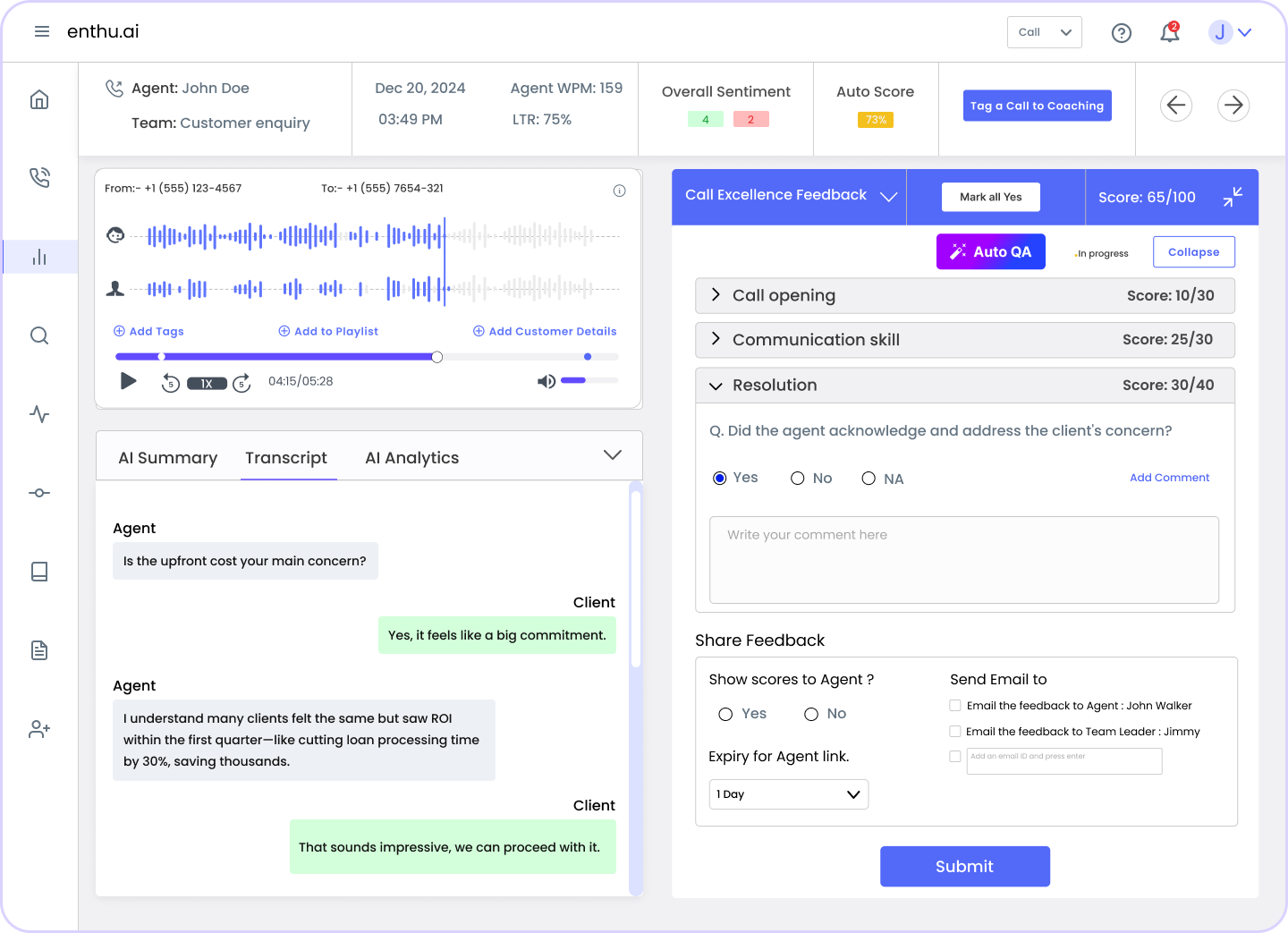

- Broader QA coverage: Many QA teams review under 2% of calls today. Automated QA for call centers lets you score 100% of interactions. You then sample smart, not random.

- Compliance confidence: Call center compliance monitoring flags risky phrases and missing disclosures. Redaction protects PII and card data. You meet PCI DSS, HIPAA, and SOC 2 needs with guardrails.

E. Applications of AI in call transcriptions

Best AI transcription does more than write down words. Here are five practical call-center features it enables.

- Automated QA and scoring:Use AI for call center quality assurance to score every call. Track greetings, authentication, hold etiquette, and closing. Identify handle-time outliers and policy gaps. Route high-risk calls to human review.

- Sentiment and intent analysis: Run call center sentiment analysis on transcripts to identify potential churn risks. Learn how sentiment analysis works on context, not single words. Tie negative sentiment to refunds, escalations, or repeat contacts. Use findings to fix upstream issues. Highlight the benefits of sentiment analysis to your leadership with clear, win-win stories.

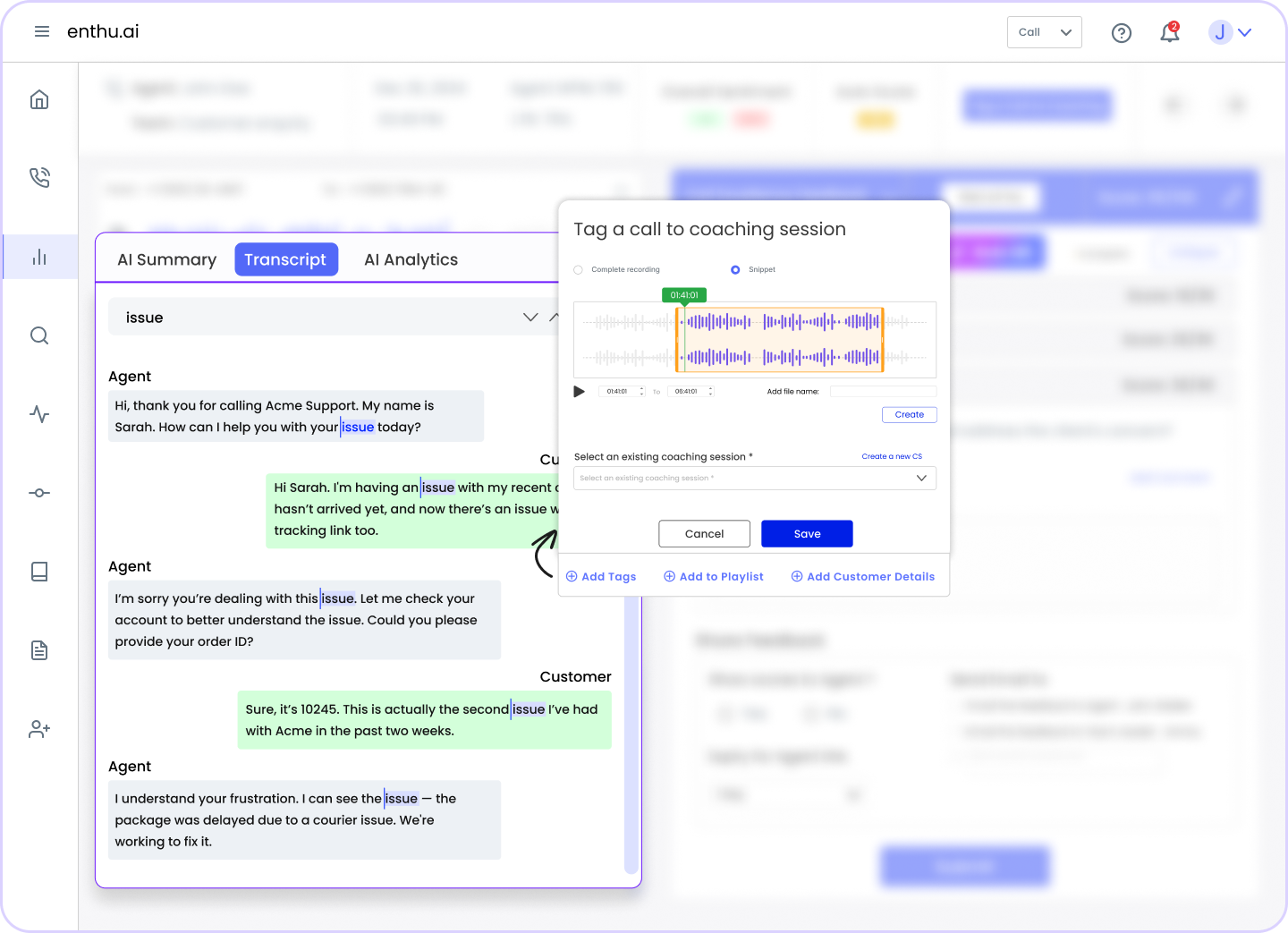

- AI-powered coaching for agents: Build targeted coaching plans from real moments. Share clips where empathy shifted a tough call. Reinforce top phrases that correlate with higher CSAT. Coach immediately, not weeks later.

- Knowledge and process improvements: Spot repeated “how do I reset” queries. Update macros and articles fast. Align product, marketing, and support on real customer language. Reduce avoidable contacts using data, not guesses.

- Risk and compliance workflows: Validate required disclosures on every regulated call. Flag risky promises. Confirm do-not-call handling. Prove adherence with clear transcript trails. Trigger retraining when compliance scores dip.

F. Use cases of AI transcription across industries

Transcription helps wherever conversations drive outcomes. Here is where it shines.

1. Healthcare

Convert telehealth visits into clean summaries. Redact PHI while keeping clinical context. Speed up charting and referrals. Support revenue cycle and coding teams with accurate documentation and coding notes.

Furthermore, specialized Conversation Intelligence platforms like Enthu.ai transform patient-facing operations by automating quality assurance, monitoring HIPAA compliance, using sentiment analysis for agent coaching, and streamlining appointment and billing processes.

For more details on enthu.ai’s healthcare use cases, see Conversation Intelligence for Healthcare.

2. Education and research

Turn lectures and interviews into searchable text. Support accessibility for students with disabilities. Analyze qualitative research at scale. Move faster from transcripts to findings.

3. Marketing and content creation

Repurpose webinars and podcasts into blogs and clips. Extract quotes and hooks with timestamps. Build SEO pages from real voice-of-customer language. Align content with product messaging.

4. Media and journalism

Transcribe interviews on deadline. Search by topic, person, or quote. Verify facts faster. Maintain high editorial standards while adhering to tight timelines.

5. Legal services

Capture depositions, client calls, and witness interviews. Keep timestamps and speaker labels for accurate citations. Maintain a chain of custody and redact sensitive data.

G. Trends and the future of AI transcription

Several shifts will shape your roadmap in the following year.

- Real-time everything: Live transcription enables live coaching and prompts. Supervisors get alerts when sentiment dips or compliance steps are missed.

- Better diarization and overlap handling: Models now separate speakers even when people talk over each other. That boosts coaching accuracy on multi-party calls.

- Multilingual and code-switching capabilities: Systems handle language mixing seamlessly during calls. Auto-language detection removes setup friction for global teams.

- Domain-tuned accuracy: Fine-tuned models lift accuracy on industry terms, SKUs, and brand names. Expect easier on-the-fly dictionary updates.

- From text to insights: LLMs summarize, tag themes, and draft case notes. You move from “what was said” to “what to do next” in one flow.

- Privacy by design: Built-in redaction, data residency choices, and granular retention settings become standard. Expect clearer audit trails and admin controls.

- Synthetic voice detection: As voice cloning advances, detection becomes increasingly crucial. Systems will flag suspected synthetic segments to maintain trust and integrity.

- Elastic architectures: You will mix edge, browser, and cloud processing. That reduces latency and improves resilience during peak loads.

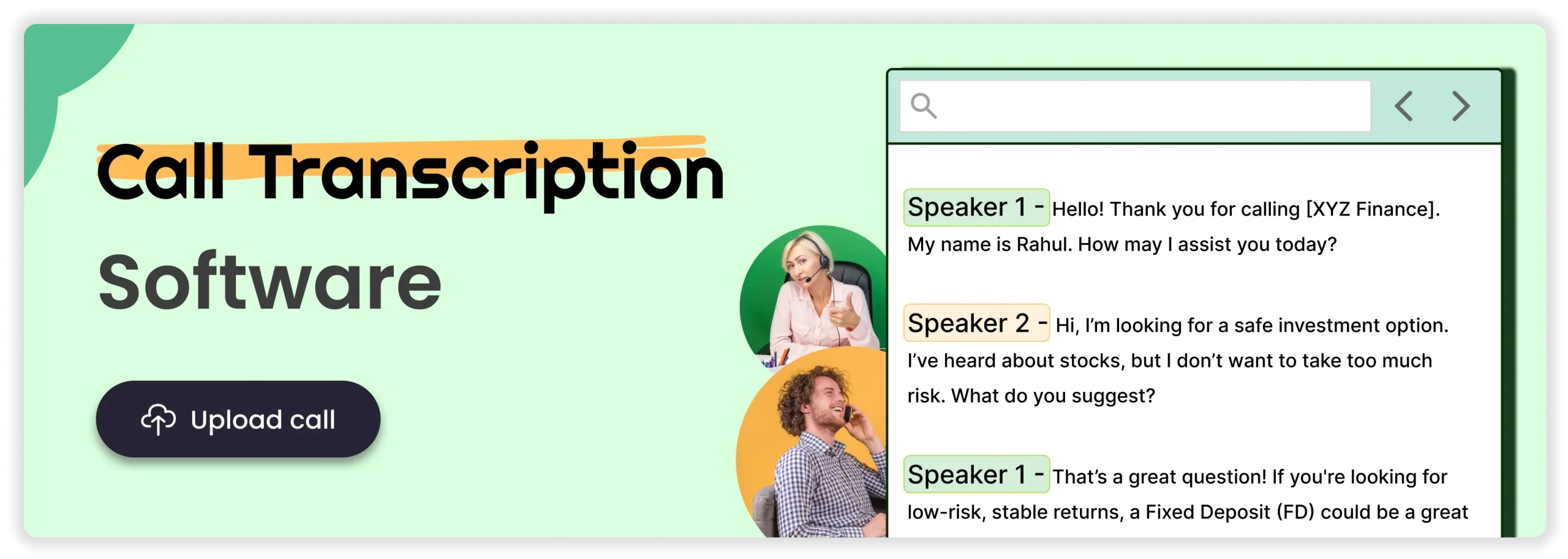

H. Enthu.AI call transcription: get clear and accurate insights

If you want an AI call transcription partner built for CX, Enthu.AI is made for you. Here’s why.

- Human-like accuracy, machine-like speed: Say goodbye to missed details and fuzzy notes. Enthu.AI captures every word with high precision, regardless of accent or background noise. You get reliable transcripts you can act on today.

- Instant search across transcripts: Search hours of calls in seconds. Find topics, keywords, or phrases across teams. Turn customer conversations into easily accessible knowledge for QA and product.

- Effortless speaker identification: See who said what, even in complex three-way calls. Follow the dialogue on screen as clearly as you did live.

- Auto language detection: Skip manual setup. Enthu.AI detects the primary language instantly and adapts on the fly.

- Fully automated pipeline: Enthu.AI ingests recordings via APIs. It converts speech to text, separates speakers, and automatically redacts sensitive data. You get clean transcripts without lifting a finger.

- Fastest implementation, best support, quick ROI: Get value in days, not months. Teams report faster onboarding, stronger coaching, and better QA coverage.

- Works with tools you already use: Connect Enthu.AI to your CRM, help desk, dialer, storage, and BI tools. Keep your workflows intact while you level up insights.

- Agents and managers love it: Agents get clear notes and feedback. Managers receive dashboards, search functionality, and automated scoring. Compliance teams get traceability and reliable redaction.

Ready to extract value from your conversations? Get started with Enthu’s 14-day free pilot and five free evaluations.

I. Practical tips for choosing an AI transcription solution

You want a partner, not a black box. Use this checklist.

- Accuracy across accents: Test with your real recordings.

- Speaker diarization: Ensure the tool accurately labels who’s speaking.

- Search & filtering: Can you find issues fast?

- Integration: Does it plug into your CRM and QA tools?

- Compliance features: Look for redaction and data controls.

- Speed and scalability: Can it handle your volume?

- Support and Onboarding: How long does the implementation take?

Conclusion

AI transcription changes how you work. It makes audio data accessible and actionable. You get faster coaching, better compliance, and clear evidence for disputes.

Adoption continues to rise as models improve and integrations deepen.

If you want fast setup, accurate output, and a CX-focused partner, Enthu.AI gives you a 14-day free pilot, plus five free evaluations to test real calls.

Start with your most crucial call type. Run a few evaluations. You’ll likely spot quick wins in coaching and quality assurance.

FAQs

1. Is AI transcription safe?

Yes, when you choose secure vendors and set strong controls. Look for encryption, redaction, role-based access, and audit logs. Align your policies with PCI DSS, HIPAA, and SOC 2 where applicable.

2. Is AI transcription legal?

Yes, with proper consent and compliant data handling. Follow local call recording laws in your state and country. Announce recording, document consent, and respect retention and deletion rules.

3. Can AI transcribe phone calls?

Yes. Modern systems effectively handle mobile, VoIP, and landline recordings. Accuracy improves with clean audio, high-quality microphones, and domain-specific dictionaries. You can run it live or after the call.

On this page

On this page