Summary

Automating call quality monitoring replaces manual sampling with AI-powered analysis of 100% of calls. This approach eliminates human bias, reduces QA costs by up to 70%, and delivers faster coaching feedback. The process involves defining QA rubrics, selecting automation tools, calibrating AI models, and continuously refining performance. Key benefits include improved compliance, better CSAT scores, and data-driven insights at scale.

A. What is automated call quality monitoring?

Automated call quality monitoring uses AI to evaluate customer interactions without manual intervention. The system transcribes calls, analyzes conversations, scores agent performance, and identifies coaching opportunities automatically.

Unlike traditional methods that review 2-5% of calls, automation evaluates every interaction. This comprehensive coverage reveals patterns impossible to detect through sampling.

Modern automated QA systems combine speech recognition, natural language processing, and machine learning. They assess metrics like empathy, compliance adherence, script following, and customer sentiment in real-time.

1. Manual vs. automated QA – What’s the big difference?

| Aspect | Manual QA | Automated QA |

| Coverage | 2-5% of calls | 100% of interactions |

| Evaluation Speed | Hours to days | Real-time to minutes |

| Consistency | Varies by evaluator | Standardized criteria |

| Human Bias | High subjectivity | Objective scoring |

| Cost per Call | $5-10 per evaluation | Under $0.50 |

| Scalability | Limited by headcount | Unlimited |

Manual processes rely on supervisors listening to random call samples. This creates blind spots where compliance violations and quality issues go unnoticed. Evaluator bias also leads to inconsistent scores across different reviewers.

Automation removes these limitations by applying identical criteria to every call. The AI doesn’t have bad days or personal preferences. You get objective, repeatable evaluations at scale.

2. How automation works in QA

Automation in QA follows a clear, repeatable flow from call capture to insight. Here’s the concise breakdown:

- A call recording system enters the system. Speech recognition turns audio into text with speaker labels.

- Natural language processing analyzes the transcript. The AI detects keywords, sentiment, and compliance phrases.

- The scoring engine applies your QA rubric. It generates a score plus key coaching moments.

- Results appear in dashboards with alerts. Supervisors see risky or unhappy calls instantly.

3. Why automation is the future of contact center quality

As per 2025 Mckinsey’s report, 92% of executives expect to expand AI spending in the next three years. Contact centers adopting automation report 25-40% improvements in first-call resolution.

Regulatory requirements also continue tightening. Industries like healthcare, finance, and telecom face severe penalties for compliance failures. Automated monitoring catches 100% of violations instead of hoping your 5% sample includes the problem calls.

The labor market presents additional challenges. Finding and retaining skilled QA analysts costs more each year. Automation redirects these resources toward strategic coaching instead of repetitive scoring.

B. Benefits of automating call quality monitoring

Implementing call center QA automation can significantly improve your KPIs, driving improvements all across your contact center operations. Here’s how it can impact some of the most important outcomes:

1. Achieve 100% coverage instead of 2-5% sampling

Traditional manual QA teams can only review a tiny fraction of interactions. With thousands of daily calls, this sampling approach misses critical issues.

Automation evaluates every single conversation regardless of volume. You identify compliance breaches, customer complaints, and training needs that would otherwise slip through. This comprehensive visibility transforms decision-making.

2. Improve CSAT, compliance, and coaching outcomes

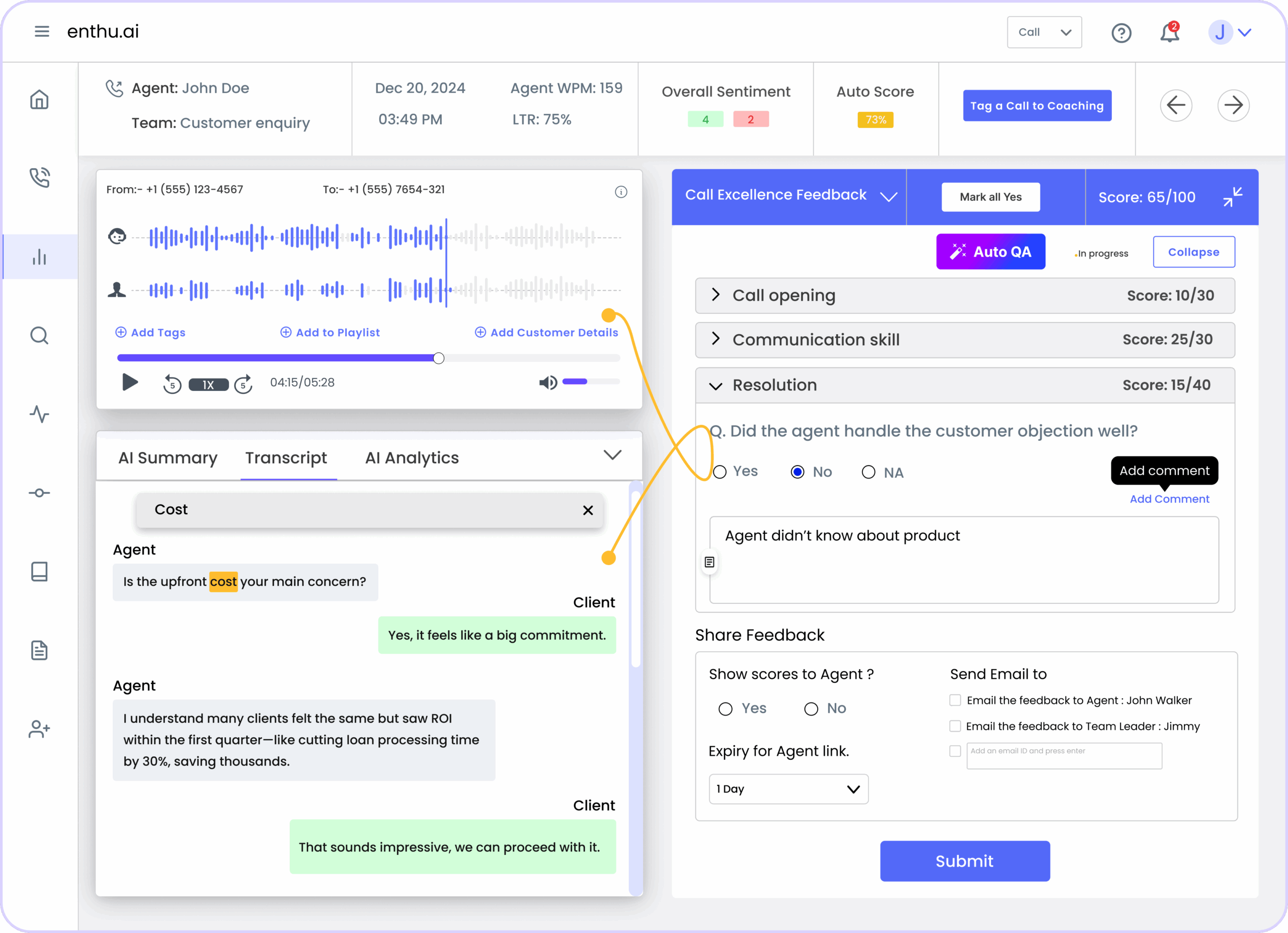

Automated systems detect sentiment changes during conversations. When a customer’s tone shifts from neutral to frustrated, the AI flags that moment for review.

This granular insight helps coaches identify exactly where agents need improvement. Instead of vague feedback like “be more empathetic,” supervisors can point to specific call segments.

Customer satisfaction scores typically rise 15-20% within six months of implementing automated QA. The consistent quality and faster issue resolution drive these improvements.

Our client CallHippo reduced revenue churn by 20% and increased CSAT by 21% by automating call quality monitoring. Omesh Makhija, VP of CallHippo, said, “Enthu has made our customer conversation data searchable. I am particularly impressed by the way Enthu helped us identify customer dissatisfaction signals and address the concerns proactively, thus reducing our churn.”

3. Reduce QA costs and human bias

Manual call review costs $5-10 per evaluation when you factor in analyst salaries and time. At 1,000 calls per day, that’s up to $10,000 daily just for 5% coverage.

Automated solutions reduce this to under $0.50 per call while evaluating 100% of interactions. The ROI calculation is straightforward and compelling.

Human evaluators bring unconscious biases to scoring. One supervisor might rate an interaction as “good,” while another scores it “needs improvement. This inconsistency confuses agents and undermines coaching credibility.

AI applies identical criteria to every call. The scoring remains objective and repeatable regardless of who reviews the results.

4. Deliver faster, data-driven feedback

Automated systems generate evaluations within minutes of call completion. Some platforms even provide real-time alerts during live conversations. This immediacy enables in-the-moment coaching corrections.

Data-driven insights also reveal patterns across your entire team. You might discover that 60% of low-scoring calls involve a specific product issue. This systemic view guides training priorities more effectively than individual call reviews.

C. Key components of an automated QA system

Every effective automated quality monitoring solution relies on several core technologies working together seamlessly. Here are the essential components you need to understand:

1. Speech analytics and transcription accuracy

Accurate transcription forms the foundation of automated QA. The system must convert speech to text with 95%+ accuracy or downstream analysis suffers.

Modern automatic speech recognition handles accents, background noise, and industry jargon effectively. Training on diverse vocal patterns improves recognition by 7% above industry standards.

Speaker diarization separates agent and customer voices in the transcript. Timestamp alignment links transcript segments to exact audio moments.

2. AI scoring models: what they measure

Automated scoring evaluates multiple dimensions simultaneously. Common criteria include:

- Greeting quality – Did the agent identify themselves and offer assistance?

- Active listening – Acknowledgment phrases and relevant follow-up questions

- Empathy indicators – Language showing understanding of customer concerns

- Compliance adherence – Required disclosures and policy statements

- Problem resolution – Whether the issue was addressed effectively

- Professional language – Avoiding slang, maintaining courtesy

Each criterion gets weighted based on your business priorities. Financial services companies might emphasize compliance heavily, while retail focuses more on sales conversion.

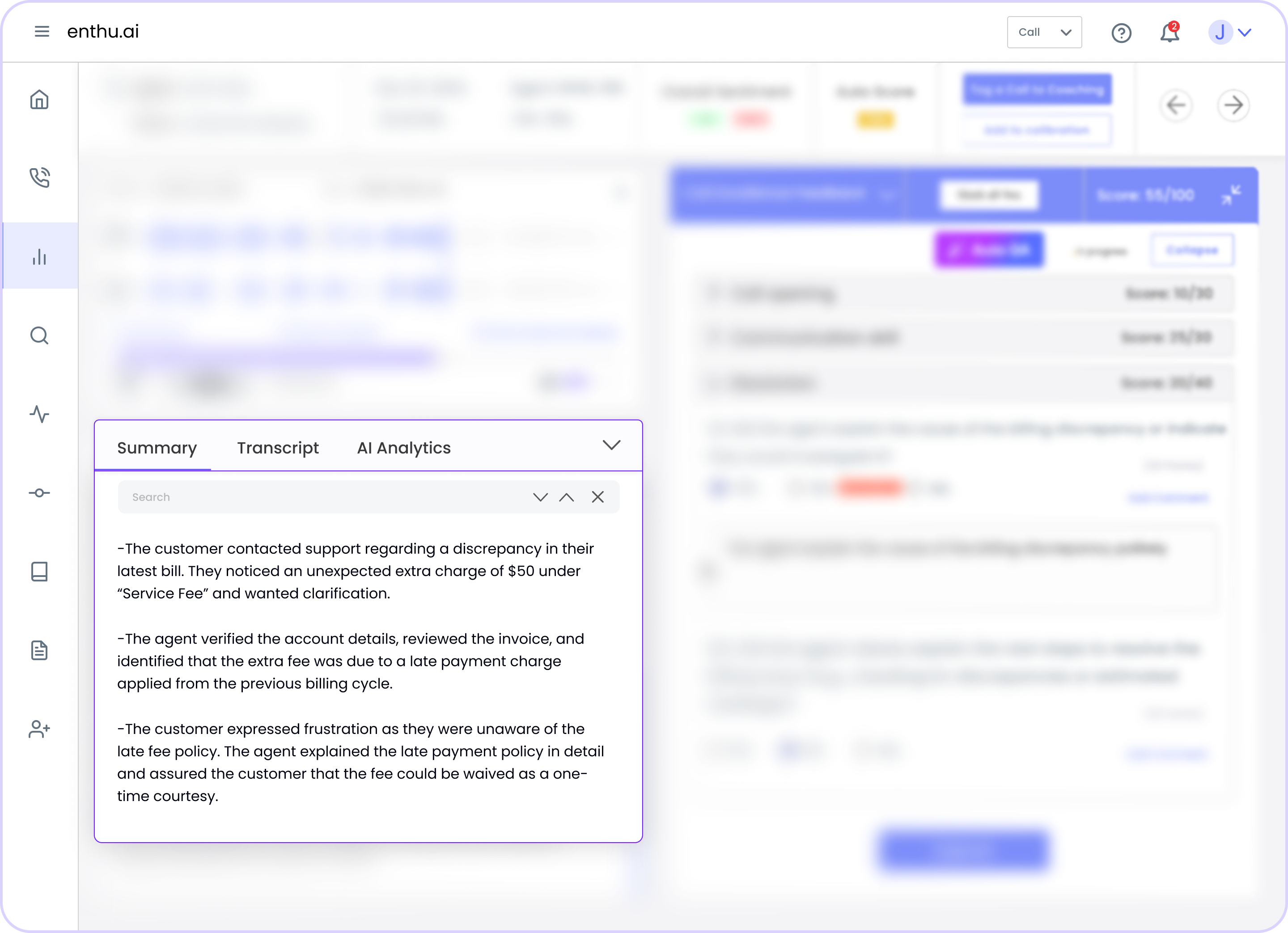

3. Call summarization & auto-tagging

AI-generated summaries distill 10-minute calls into concise paragraphs. These capture key topics, customer requests, and resolution outcomes automatically.

Auto-tagging categorizes calls by subject, issue type, and customer intent. You can instantly filter for all “billing dispute” calls or “competitor mention” conversations.

This organization transforms thousands of recordings into searchable, actionable data. QA teams spend less time hunting for specific call types and more time analyzing trends.

4. Real-time alerts for compliance and escalation

The most advanced systems monitor live calls as they happen. When the AI detects compliance language violations or rising customer anger, it alerts supervisors immediately.

Managers can then join the call with whisper coaching or take over if needed. This intervention prevents small issues from becoming major problems.

Fraud detection algorithms also flag suspicious patterns like social security number requests. Real-time protection stops data breaches before damage occurs.

5. Integrations With CRM, WFM, and ticketing tools

Automated QA systems should integrate seamlessly with your existing tech stack, pulling CRM data for contextual evaluations, feeding performance insights into workforce management, and creating automatic coaching tickets when scores drop.

Flexible APIs and pre-built connectors for Salesforce, Zendesk, and Five9 enable fast, customized deployments across diverse environments.

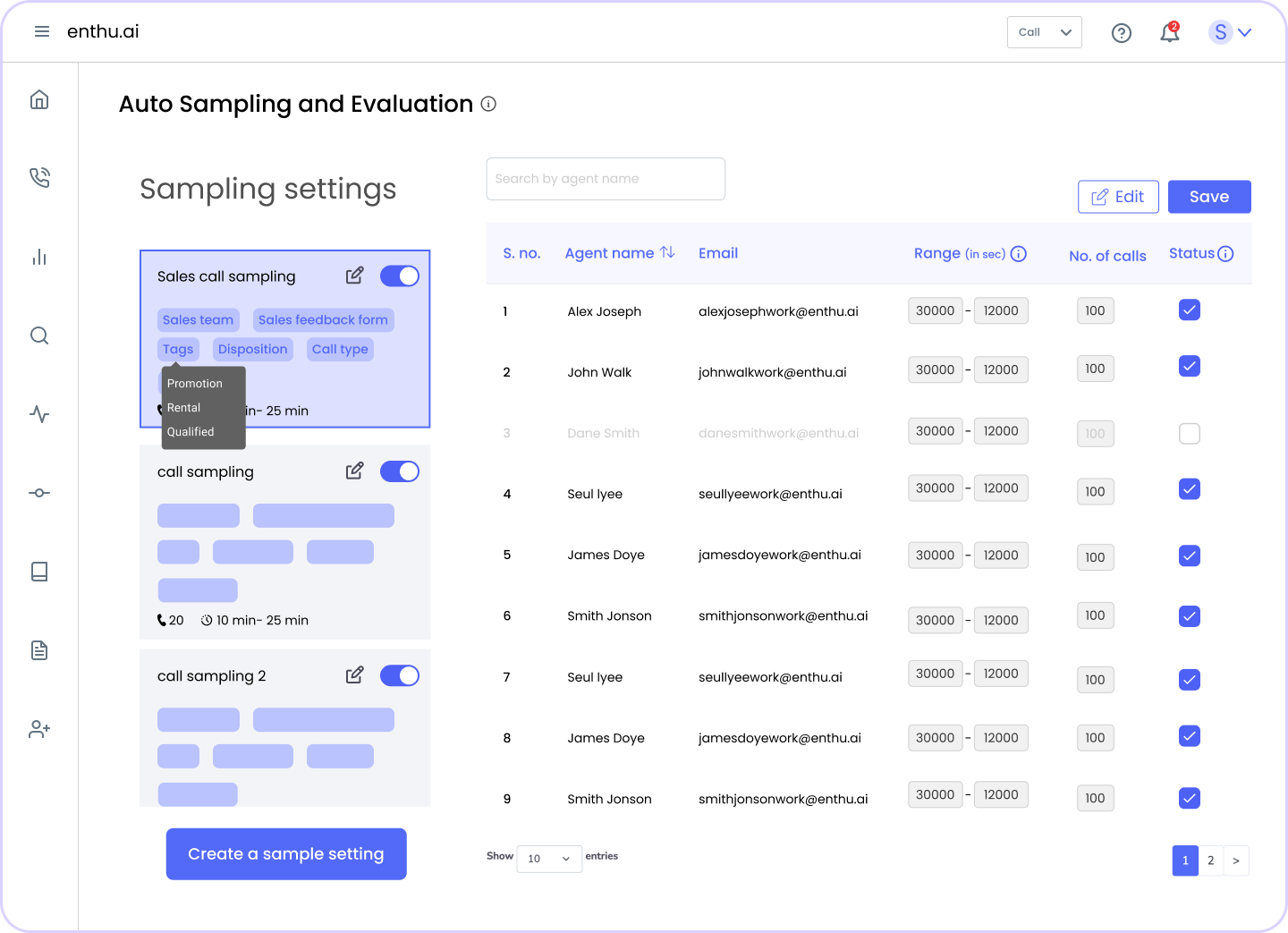

D. How to automate call quality, monitoring (step-by-step)

Successfully deploying automated QA requires following a proven implementation roadmap that minimizes risk and accelerates time to value. Following a structured implementation process ensures successful deployment and rapid ROI realization.

Step 1 – Define QA rubrics based on business goals

Start by clarifying what “quality” means for your specific contact center. Different organizations prioritize different outcomes.

Involve frontline agents in rubric development. They understand which criteria actually impact results versus bureaucratic checkbox items. This participation also increases buy-in during rollout.

Create 8-12 clear, measurable criteria. Each should tie directly to business KPIs you already track. Avoid vague standards like “be professional” in favor of specific behaviors like “use customer’s name twice during call”.

Step 2 – Choose automation tools (build vs. buy)

Most organizations find better ROI purchasing specialized platforms. Vendors like Enthu.AI offer pre-trained models that work immediately. Implementation takes hours instead of months.

Evaluate vendors on these criteria:

- Transcription accuracy for your specific industry terminology

- Customizable scoring models matching your rubric

- Integration capabilities with your phone system and CRM

- Real-time monitoring and alerting features

- Data security certifications (SOC 2, HIPAA if applicable)

- Pricing model that scales with your call volume

Step 3 – Calibrate AI with human QA insights

Compare the AI’s automated scores against these human benchmarks. Where do they differ significantly? The AI might be too harsh on certain criteria or miss nuances your team considers important.

Adjust scoring thresholds and keyword lists based on these findings. If your agents use industry jargon the AI doesn’t recognize, add those terms.

Run regular calibration sessions quarterly. As your business evolves, scoring criteria should adapt.

Step 4 – Automate workflows (scoring, alerts, coaching loops)

Once calibrated, automate the entire QA workflow. Calls should flow from recording to scoring to feedback delivery without manual steps.

Set up automated alert rules for critical situations:

- Compliance violations trigger immediate supervisor notifications

- CSAT scores below 3.0 create coaching tickets

- Positive customer mentions generate recognition tasks

Integrate coaching delivery into your existing agent tools. Create automated dashboards showing team and individual performance trends. Managers should see at-a-glance which agents need support and which deserve recognition.

Step 5 – Monitor performance and refine over time

Track key metrics weekly during the first three months. Watch for drift where AI accuracy degrades over time.

Collect agent feedback about scoring fairness. If your team consistently disagrees with AI evaluations, recalibration might be needed.

Measure business impact through standard KPIs:

- First-call resolution rate changes

- Average handle time trends

- CSAT score improvements

- Compliance violation reduction

Expand automation gradually. Start with high-volume call types where ROI is clearest. Add complexity like omnichannel evaluation after mastering voice calls.

E. Common challenges and how to solve them

Even the best automation projects encounter obstacles during the deployment and adoption phases. Here are the most common challenges teams face and practical solutions to overcome them:

1. Ensuring transcription and scoring accuracy

Industry-specific terminology and background noise degrade transcription quality, causing incorrect interpretations of medical terms, financial products, and technical jargon.

Solution: Use tools with custom vocabulary and domain tuning, and pair them with good noise-canceling headsets for cleaner input and better accuracy.

2. Avoiding false flags in compliance alerts

Overly sensitive compliance detection creates alert fatigue, leading supervisors to ignore notifications when they receive dozens of false alarms daily.

Solution: Calibrate thresholds using real violation patterns and introduce severity levels so only critical issues trigger real-time alerts.

3. Driving adoption with QA, supervisors, and agents

Agents resist automated monitoring, fearing unfair or opaque scoring that feels arbitrary and punitive rather than helpful.

Solution: Explain how scoring works, share transcripts and scores openly, and position automation as a coaching aid supported by supervisor champions.

4. Protecting customer data and privacy

Call recordings contain sensitive personal information like credit card numbers and social security numbers, creating legal and reputational risks if mishandled.

Solution: Enable redaction and masking for payment and ID data, enforce strict access controls, and work with vendors that hold robust security certifications.

5. Avoiding “black box” AI scoring problems

Agents become frustrated when they don’t understand why they received certain scores, making “the AI rated me low” feel arbitrary and unfair.

Solution: Use explainable scoring with highlighted call snippets and keep human review in the loop, supported by regular calibration against QA standards.

F. How to measure success of automated QA

Demonstrating ROI and tracking continuous improvement requires monitoring the right metrics at regular intervals. Here’s what you should measure to prove automation value:

1. KPIs to track (accuracy, efficiency, CSAT, AHT, etc.)

Monitor these key performance indicators before and after automation:

Quality Metrics:

- Overall QA score averages

- Compliance adherence rates

- Customer satisfaction (CSAT) scores

- First-call resolution percentage

Efficiency Metrics:

- Average handle time (AHT)

- Calls evaluated per QA analyst hour

- Time from call completion to feedback delivery

- Cost per quality evaluation

Business Impact:

- Customer retention rates

- Sales conversion percentages

- Repeat call frequency

- Agent turnover reduction

2. QA coverage and evaluation speed

- Track the percentage of calls receiving quality evaluation.

- Measure evaluation speed from call end to score availability.

- Calculate QA analyst productivity changes.

3. Coaching impact and agent performance lift

- Monitor individual agent score trajectories over time.

- Track the time gap between issue identification and coaching delivery.

- Measure agent engagement with feedback. Are they accessing their evaluations and transcript details?

- Survey agents quarterly about feedback quality and actionability.

4. Audit mechanisms for AI score validation

- Implement ongoing human audits to verify AI accuracy.

- Calculate inter-rater reliability between AI and human scores.

- Track score distribution patterns.

- Monitor agent appeals and overturns.

G. Build vs. buy: Should you automate QA in-house or with a vendor?

Choosing between developing custom QA automation or purchasing a vendor solution impacts your timeline, costs, and long-term flexibility. Here’s how to evaluate the right path for your organization:

1. Cost, skill set, and time-to-value comparison

Deciding whether to build or buy starts with understanding the real cost of people, time, and missed opportunities.

- Building in-house demands expensive specialists in data science, ML engineering, and DevOps, plus 12-18 months of development and ongoing tuning.

- Over three years, total spend can reach the high six or seven figures, while buying a mature platform offers pre-trained models, deployment in weeks, and far lower cost for most teams under 500 agents.

2. Data security and compliance considerations

Security and compliance can be a deciding factor, especially in regulated industries.

- When you build internally, your team must design and maintain encryption, access controls, logging, and compliance processes end to end.

- With a vendor, you usually gain audited controls, security certifications, and clear data policies, so it’s critical to ask about data location, encryption, retention, and certifications if you handle sensitive customer information.

3. When a Hybrid Approach Makes Sense

A hybrid model gives you flexibility when standard solutions don’t fully match your needs.

- Hybrid works well if you want vendor-grade transcription and scoring but need custom analytics, fraud models, or domain-specific evaluation on top.

- The most effective path is often to start with a vendor solution for quick wins, then layer in custom components later if your internal team and unique requirements truly justify it.

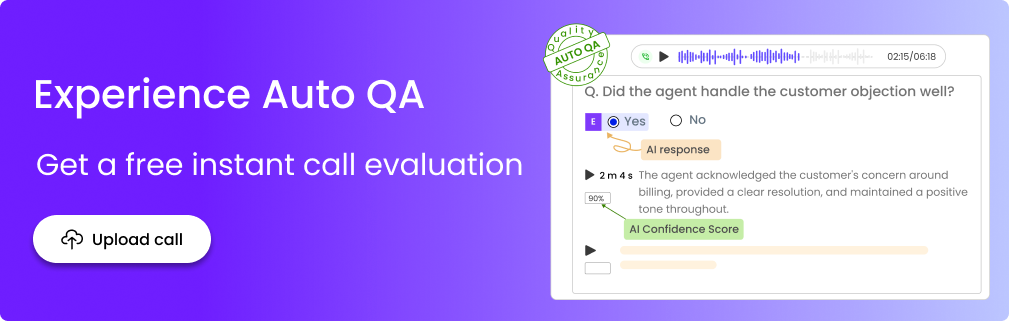

Upgrade QA with the best call center quality monitoring software

Struggling to understand what’s really happening on your calls without burning out your QA team?

Enthu.AI turns every conversation into a clear, structured view of quality. It delivers accurate transcripts, concise summaries, and smart tags by topic and sentiment, so you quickly spot issues, trends, and coaching opportunities.

AI-driven scoring keeps evaluations consistent and fair, while real-time cues surface conversations that need attention right now.

You cut manual effort, gain reliable visibility, and help agents improve with specific, call-based feedback.

Ready to experience it? Book a quick Enthu.AI walkthrough for your contact center.

FAQs

1. What is automated call quality monitoring?

Automated call quality monitoring uses AI to evaluate customer interactions without manual review. The system transcribes calls, analyzes conversations, scores agent performance, and identifies coaching opportunities automatically. It replaces traditional sampling methods with 100% call coverage.

2. How much does call quality monitoring automation cost?

Automated QA platforms typically cost $50-150 per agent monthly for cloud solutions. This includes transcription, scoring, and analytics features. Total implementation costs range from $100K-300K over three years including integration and training. ROI usually appears within 6-12 months through QA efficiency gains.

3. Can AI replace human QA analysts completely?

No, AI complements human analysts rather than replacing them. Automation handles repetitive scoring tasks while humans provide context, dispute resolution, and nuanced judgment. The best approach combines AI efficiency with human expertise for calibration and coaching.

On this page

On this page