“You can’t fix what you don’t hear.”

And let’s be honest—no one’s got the time (or patience) to review every call.

That’s where call sampling in QA steps in. It helps you monitor what matters—agent mistakes, missed scripts, call center compliance gaps—without burning out your QA team.

But here’s the kicker: most teams still rely on outdated or random sampling.

The result? Missed red flags, biased reviews, and coaching that’s all over the place.

A McKinsey study shows that only 1–2% of customer interactions are manually reviewed—meaning 98% of your calls could be slipping through the cracks.

In this post, we’ll show you how to upgrade your sampling strategy using smarter methods.

A. What is call sampling in QA?

Call sampling is the process of selecting a small set of recorded customer calls from a larger pool to evaluate agent performance, quality assurance, or compliance.

Instead of listening to every call (which is impossible in high-volume contact centers), QA managers or supervisors randomly or strategically pick a few calls per agent to:

- Spot issues or areas of improvement

- Check for compliance with scripts, policies, or regulations

- Provide coaching and feedback

- Ensure consistent customer experience

Types of call sampling

1. Random sampling

Random sampling means selecting calls without any specific logic or pattern. Every call has an equal chance of being picked, regardless of its length, outcome, or agent.

How it’s done:

QA teams use tools or manual methods to pull a small percentage of calls (say, 5–10%) randomly from the entire call database or from each agent.

You decide to review 10% of calls per agent every week, and the system picks them randomly without considering what happened in the call.

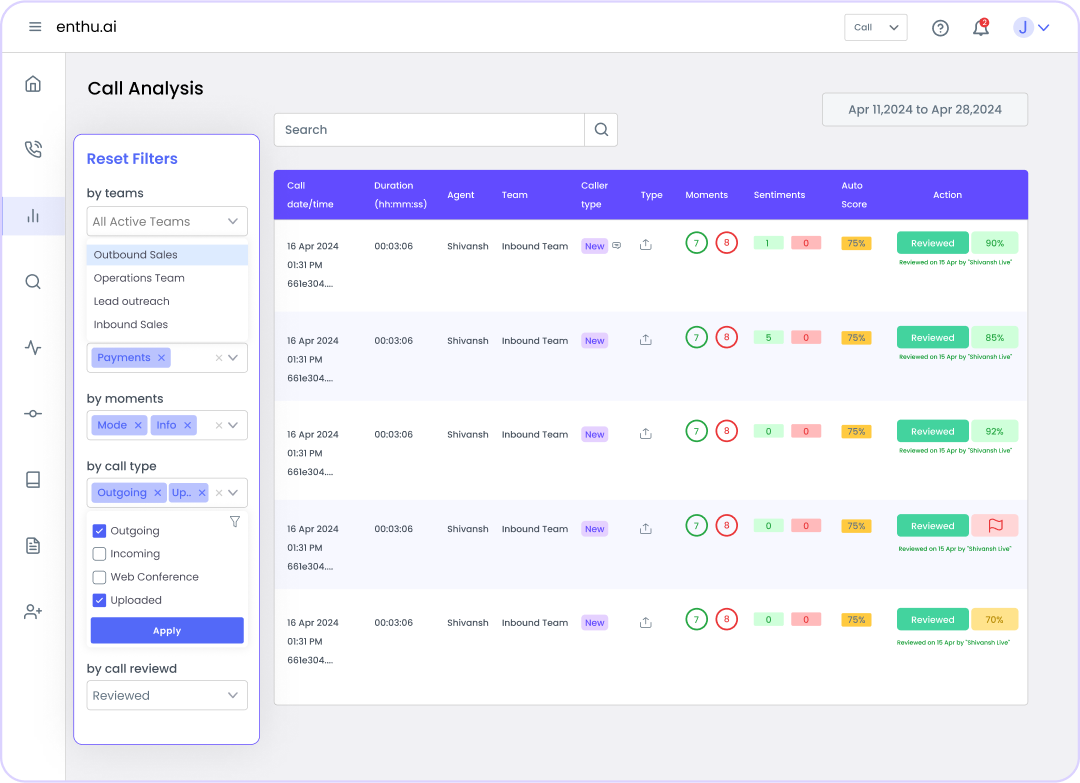

2. Rule-Based sampling

Rule-based sampling uses predefined filters to select calls. You create rules based on factors like call duration, type, agent experience, or customer feedback.

How it’s done:

The QA team sets filters such as “calls over 10 minutes,” “calls marked as escalations,” or “first 5 calls of every new agent.” Calls that match these conditions are automatically picked for review.

You review all calls that lasted more than 15 minutes and involved a new product launch to ensure agents are explaining the features correctly.

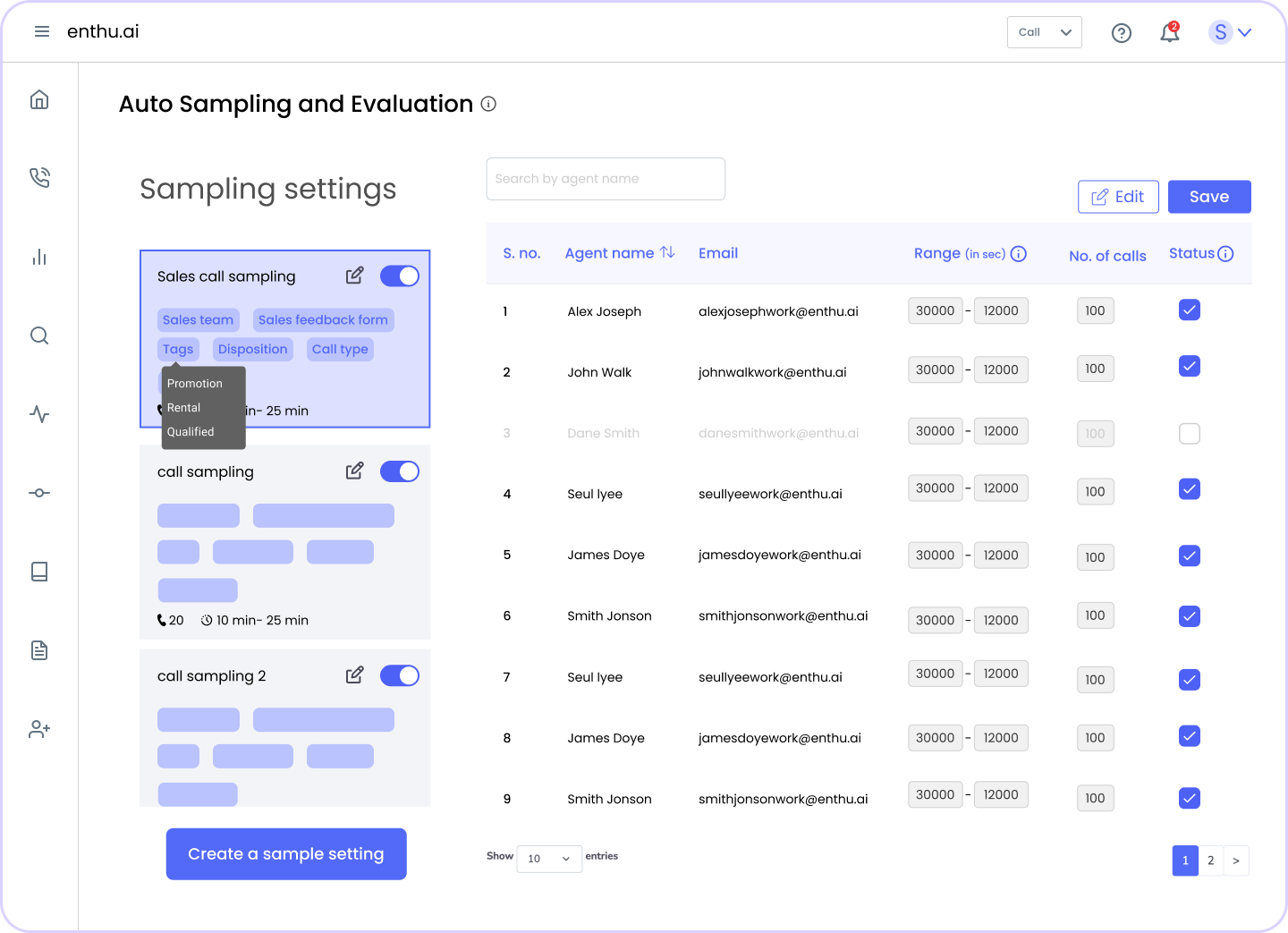

3. Auto sampling (AI-Driven sampling)

Auto sampling uses AI to automatically scan and select calls based on patterns, sentiment, keywords, or risk signals—without human-defined rules.

How it’s done

AI tools analyze 100% of calls and flag the ones showing signs of non-compliance, negative sentiment, long silences, or missed scripts. These calls are then surfaced for QA review.

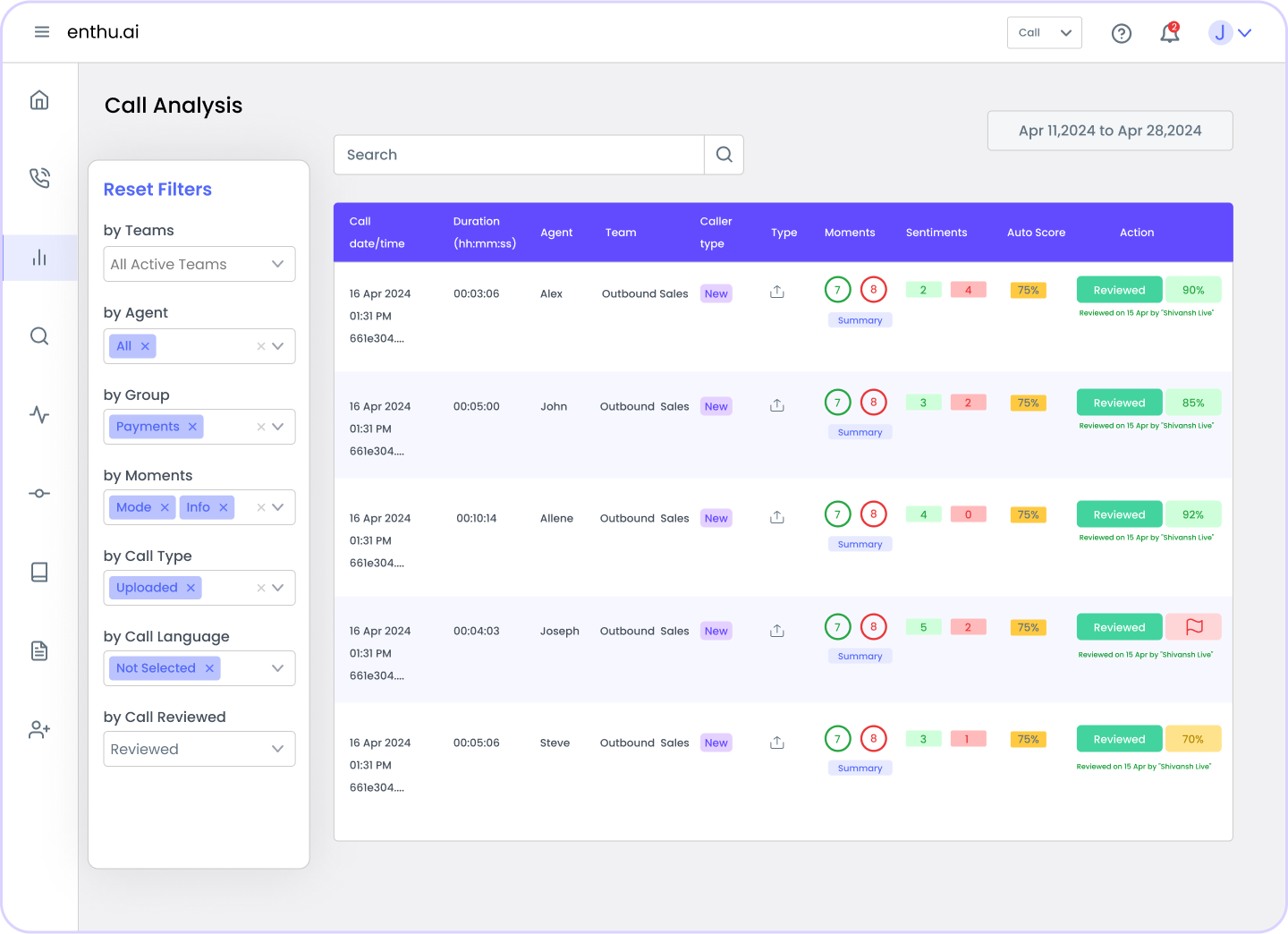

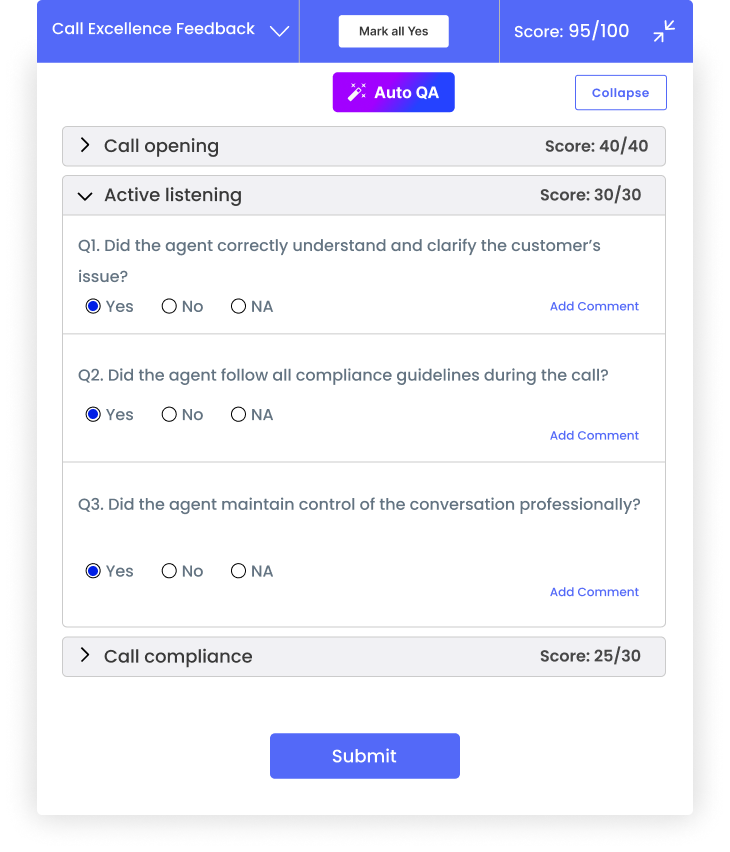

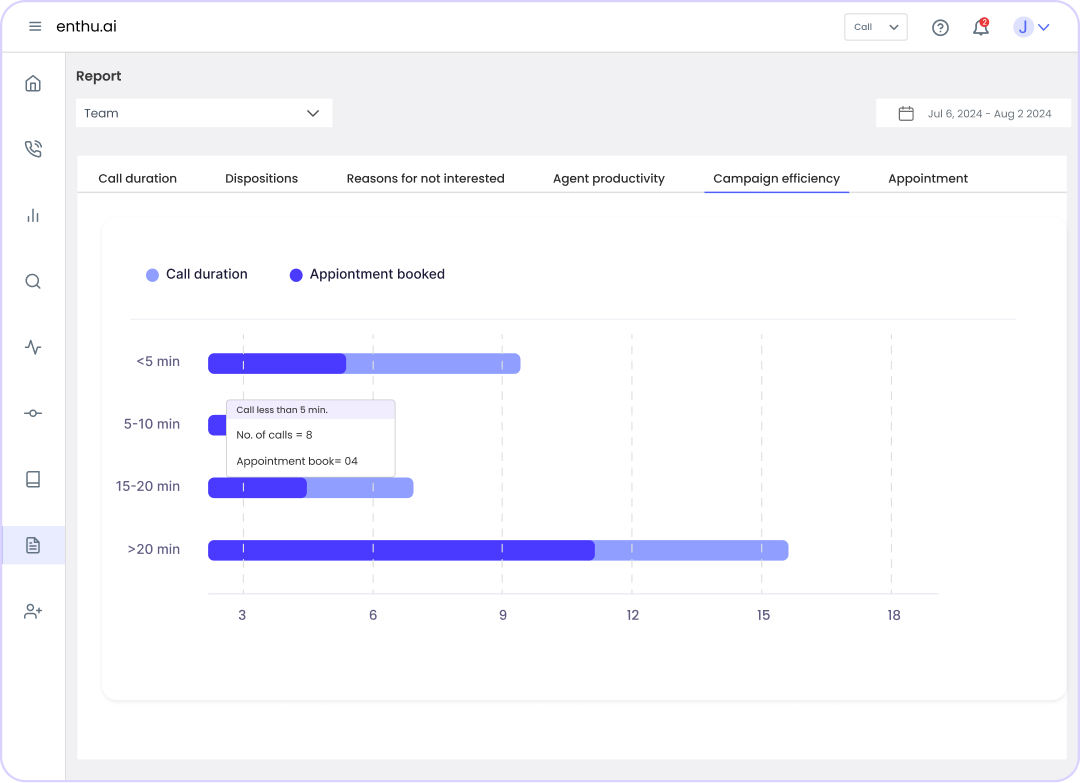

Enthu.AI automatically analyzes your sample calls, spots missed opportunities, and assigns scores.

B. Challenges with traditional call sampling

Imagine relying on a tiny fraction of calls to assess your entire team’s performance.

Traditional call sampling often focuses on a small percentage, leaving significant gaps in your quality assurance process.

This limited coverage can lead to missed call center compliance risks, inconsistencies in evaluations, and wasted time for QA teams.

With human bias and inefficiency creeping in, it’s clear that relying solely on traditional methods may not be the best way to ensure high-quality standards across your entire operation.

Let’s dive into the key challenges that come with this approach.

1. Limited coverage

Traditional call sampling typically focuses on reviewing a small fraction of all calls—commonly around 5-10%.

This means most calls are not reviewed, leaving gaps in quality assurance.

With limited coverage, there’s a risk of missing important calls that may contain serious compliance issues or performance gaps.

Why it’s a problem:

- Compliance risks: Regulatory bodies require strict adherence to scripts, disclaimers, and other legal requirements. If a non-sampled call violates compliance, it can result in fines or reputational damage.

- Performance gaps: The sample may miss recurring problems, such as agents struggling to handle specific objections, which could impact customer satisfaction and retention.

Let’s say an agent consistently mishandles calls involving a complex billing question. If only 5% of their calls are sampled, the missed calls could mean the problem goes unnoticed for months, and the agent never gets the right coaching to improve.

2. Bias and inconsistency

With traditional call sampling, the selection process is often manual or random.

This means there’s a higher chance of bias entering the equation.

A human reviewer may inadvertently favor certain agents or call types.

They might also overlook issues with less frequent call types, resulting in inconsistent quality assessments.

Why it’s a problem:

- Inconsistent QA: If certain agents or shifts are over-sampled or under-sampled, it could lead to inaccurate evaluations. Some agents may appear to be performing better than they are, while others may be unfairly penalized.

- Lack of comprehensive coverage: Some call categories (e.g., complex troubleshooting or escalations) may be overlooked, resulting in inconsistent performance monitoring across the team.

If a QA manager prefers longer calls, they may skip shorter ones. Quick, high-impact calls can be missed—especially those with compliance issues. This results in biased reviews and poor quality control.

3. High time cost for QA teams

Traditional sampling can be incredibly time-consuming, requiring QA teams to listen to, score, and analyze multiple calls.

On top of that, they must document feedback and provide actionable insights to agents.

Since only a small portion of calls is reviewed, QA teams often end up spending a significant amount of time on calls that don’t reveal any major issues.

Why it’s a problem:

- Low productivity: With only a small sample being reviewed, QA teams spend substantial amounts of time evaluating calls that might have little to no impact on the agent’s performance or customer satisfaction.

- Inefficient resource allocation: As time is wasted on calls that don’t reveal much about performance or compliance, QA teams are left with less time to address more critical issues or work on meaningful coaching.

On a typical day, a QA team might have to review 50 calls manually, spending 10-30 minutes on each. This results in a huge time commitment (up to 15 hours per day), and despite all the time spent, only a small fraction of the calls that truly matter are evaluated.

4. Missed compliance risks and coaching opportunities

When traditional sampling methods only focus on a small subset of calls, there’s a high risk of missing serious compliance violations or coaching opportunities.

This is particularly dangerous in highly-regulated industries like financial services or healthcare, where even a single overlooked mistake can lead to major fines or reputational harm.

Why it’s a problem:

- Regulatory violations: Calls that miss legal disclaimers, fail to meet regulatory standards, or involve prohibited topics may go undetected, putting the business at risk.

- Missed coaching: If an agent consistently fails to handle objections, but their calls aren’t included in the review sample, the agent misses valuable coaching that could improve their performance and the customer experience.

Imagine an agent in a financial call center. They forget to mention a required legal disclaimer during a loan conversation. That call isn’t in the 5% sample. The mistake goes unnoticed. The agent keeps repeating it. The company risks a legal issue later.

C. What are the smarter ways to sample calls?

Still relying on random picks?

That might have worked when you had 50 calls a day.

But today, you’re sitting on thousands of interactions.

Every missed call is a missed compliance risk, a coaching moment, or a CX red flag.

Smarter sampling isn’t just about reviewing more.

It’s about reviewing better—with purpose, filters, and automation.

In this section, we’ll break down how top QA teams are moving beyond guesswork and building a system that actually works.

1. Start with a goal, not a gut feeling

Randomly picking calls and hoping to “catch something” isn’t a strategy—it’s guesswork. And in high-stakes industries like finance, insurance, or healthcare, guesswork isn’t good enough.

Before you even hit “sample,” ask yourself:

What are we trying to learn?

Are you checking for:

- Compliance gaps (e.g., missed disclosures or disclaimers)?

- Agent behavior (tone, empathy, script adherence)?

- Call outcomes (was the query resolved, or did the caller hang up frustrated)?

Example

A QA manager at a lending firm wants to ensure agents aren’t skipping the “Do Not Call” disclosure.

Instead of pulling random calls, she filters only those labeled as outbound sales.

Her goal is compliance, so her sampling strategy reflects that.

A study by SQM Group found that companies who tie QA sampling to clear business goals report a 25% higher agent coaching effectiveness.

2. Don’t just go random—mix it up

Relying only on random sampling isn’t enough.

While it can uncover some hidden issues, it’s a shot in the dark.

To improve call quality monitoring, you need to mix it up. Combine random sampling with rule-based filters—like long calls, escalations, or repeat callers.

By blending randomness with rules, you create a more balanced approach. You’ll catch issues you may not have noticed and make your reviews more relevant to your goals.’

Example

A QA team at a telecom company uses random sampling to get a broad view of agent performance. But they also filter for long calls or repeat callers.

Long calls may indicate problems that need deeper analysis, while repeat callers suggest unresolved issues.

I. Set up your system to randomly sample calls, but also add filters like: II. Review both random and filtered calls to ensure you’re catching all potential issues.

3. Focus on high-risk, high-impact interactions

Not all calls are created equal. Some interactions carry more weight than others, especially when they involve complaints, refunds, or complex issues.

These are the calls that can make or break customer satisfaction and compliance.

By prioritizing high-risk, high-impact calls, you ensure that you’re addressing the most critical areas first.

It’s not just about quantity; it’s about quality—and the risk involved in missing these calls can have serious consequences.

Example

A QA manager at a retail company prioritizes calls related to refund requests or complaints about defective products.

These calls often indicate potential customer churn, so they require extra attention to ensure resolution and satisfaction.

I. Identify calls that could have the biggest impact, like: II. Focus on these calls first to identify trends, compliance issues, or training needs.

4. Audit more when there’s change

Change brings opportunity, but it also brings risk.

Whether you’re onboarding new agents, launching a new campaign, or implementing a new process, transitions are critical times to audit more.

Sampling more calls during these periods helps you catch potential issues early before they become bigger problems.

In times of change, things can slip through the cracks—missed training, unclear messaging, or misunderstood procedures.

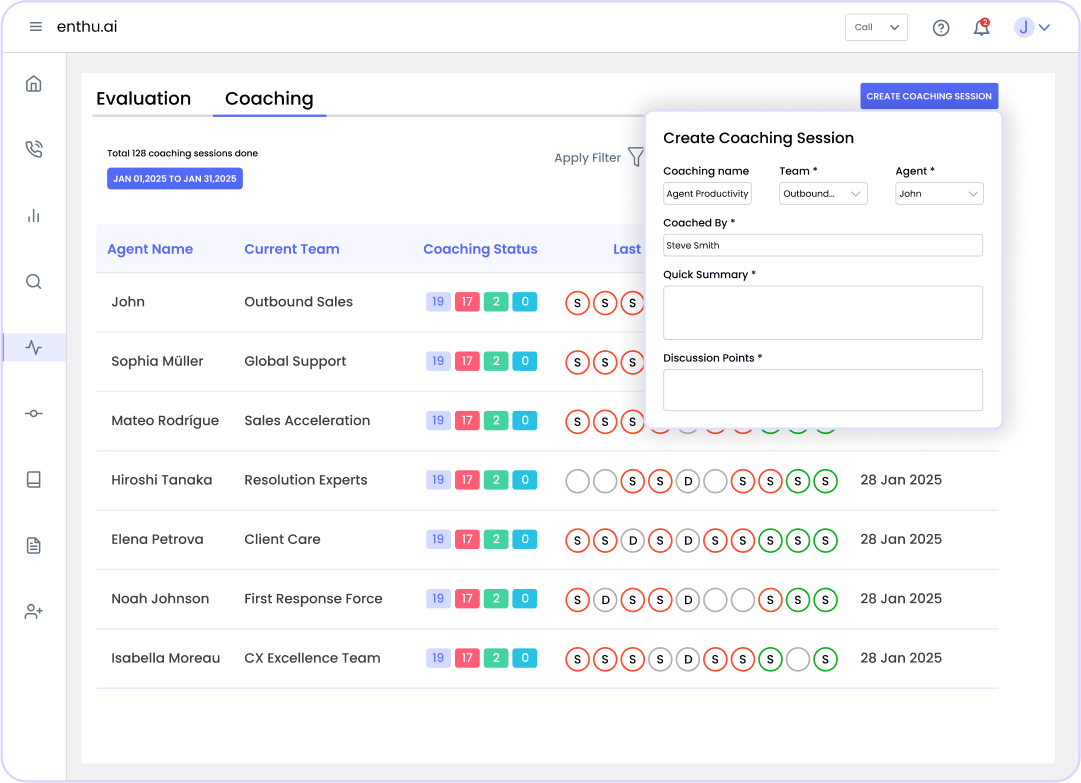

By identifying common mistakes or gaps in understanding early on, you can craft coaching sessions that focus on exactly what’s needed, helping agents improve faster and more effectively.

Example

When a financial firm launches a new loan product, they increase their call sampling to monitor agent knowledge.

Any knowledge gaps are turned into coaching sessions that focus on the new product, ensuring agents are fully trained before it goes live.

I. During major transitions, ramp up your call auditing efforts. II. Focus on: III. Create coaching sessions based on your findings to address gaps early. IV. Ensure agents get the guidance they need to adapt to changes and maintain high-quality interactions.

5. Use AI to surface what matters

Manually sifting through thousands of calls? That’s not scalable. Let AI-powered QA tools do the heavy lifting.

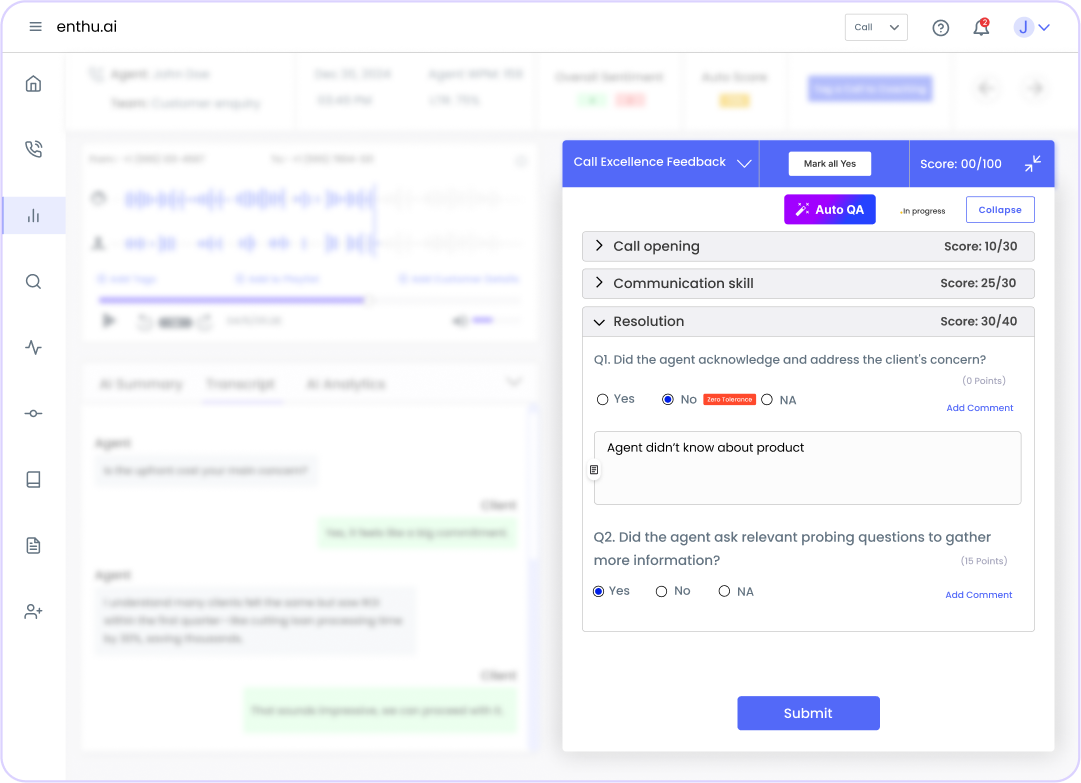

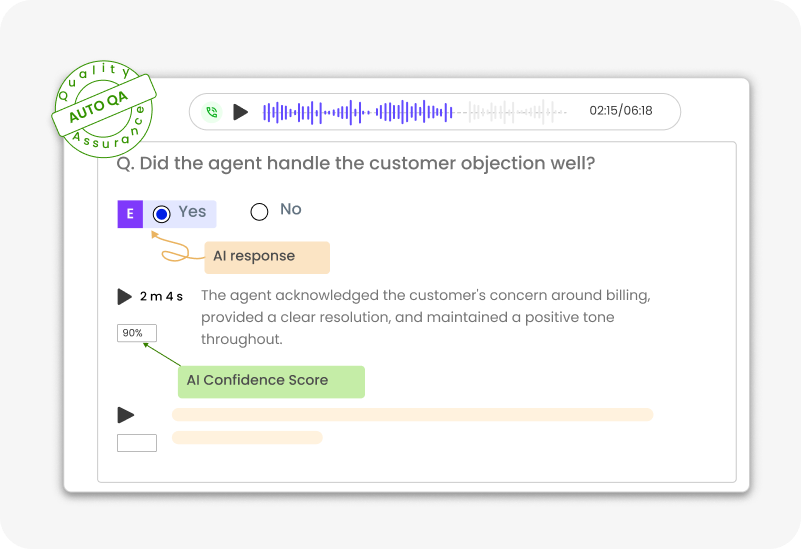

Modern QA tools—like Enthu.AI—can automatically flag risky interactions, detect negative sentiment, and catch missed compliance phrases, saving your team hours of grunt work.

AI doesn’t just speed things up—it adds precision. Instead of randomly hoping to catch issues, you’re alerted to the exact moments that need attention.

This means your QA team can focus on high-priority calls and spend more time coaching, not combing through transcripts.

Example

A consumer brand cut QA review time by 60% after using Enthu.AI to auto-flag high-risk calls.

Instead of random sampling, their team focused only on calls with compliance gaps, escalations, or negative sentiment—saving hours and speeding up coaching.

6. Keep an eye on the QA score spread

Averages can lie. Just because your team’s average QA score is 85% doesn’t mean everyone’s doing fine.

You need to look at the spread—who’s crushing it, and who’s slipping through the cracks.

Outliers tell a story. One agent consistently scoring low? Might need coaching.

One agent scoring too high? Maybe their calls aren’t being reviewed critically.

Trends over time can reveal shifts in training needs, process gaps, or even burnout.

Example

A QA lead notices that while the team average is solid, two new hires are trailing below 70%.

Instead of brushing it off, she digs in, reviews their flagged calls, and builds a custom coaching plan to help them improve fast.

7. Track & tweak monthly

Sampling isn’t a “set it and forget it” game.

What worked last quarter may not work today. New call patterns, new products, or even a spike in customer complaints should trigger a review of your strategy.

Your QA sampling plan should evolve based on what’s happening on the floor.

Check in monthly: Is your current sampling method surfacing the right insights? Are you hitting the right balance between random and rule-based calls?

Example

A support team launches a new chatbot and notices a rise in calls that start with “I tried the bot, but…” They tweak their sampling filters to prioritize these calls and uncover key friction points.

D. How AI is revolutionizing call sampling?

Manual QA sampling has always been a game of trade-offs—limited time, limited staff, limited visibility.

You pick a few calls, hope they’re representative, and try to spot red flags manually. But with AI in the mix, the game changes completely.

Now, you’re not choosing which calls to listen to. You’re choosing what to act on—because AI handles the rest. Let’s understand more:

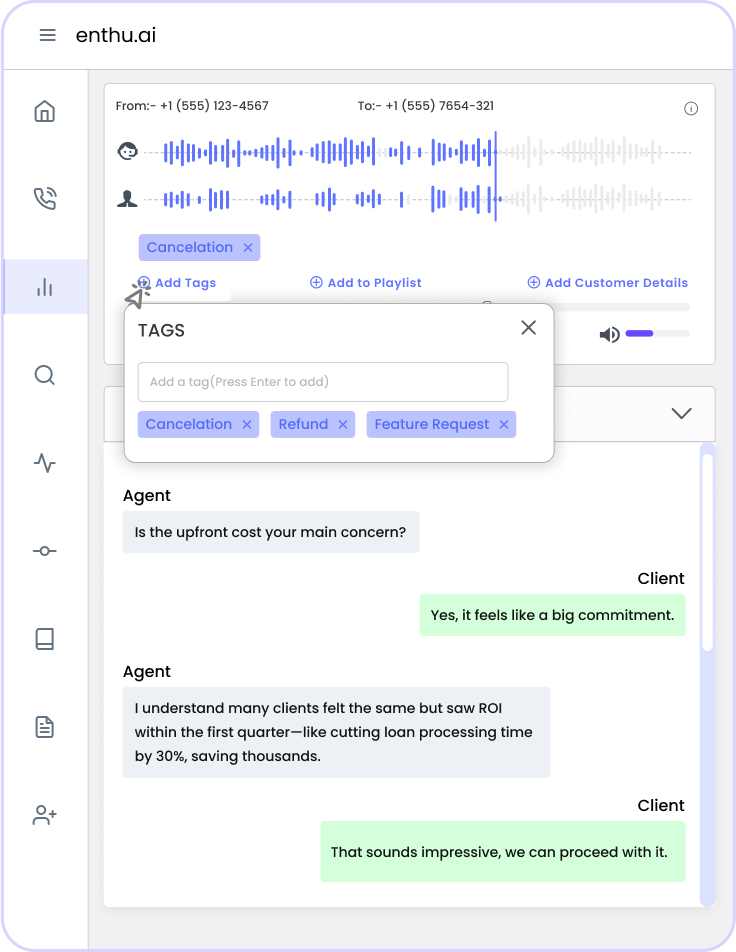

1. 100% call coverage with auto-tagging

Every single call is tagged automatically based on what’s happening in the conversation—tone, keywords, topics, sentiment, compliance language, and more. No more guessing which calls are “interesting.”

Example: Enthu.AI tags all calls that mention refunds, cancellations, or customer dissatisfaction. It surfaces instantly, no matter when they came in or who handled them.

2. Identify risky or non-compliant calls faster

AI can detect missed disclosures, aggressive tone, overtalking, or regulatory gaps way faster than a human ear.

These calls get flagged and pushed to your QA dashboard for immediate review—so you’re catching issues before they turn into customer complaints or legal trouble.

Bonus: You can also filter by repeat issues—like agents who consistently miss certain phrases or teams where escalations are unusually high.

3. Reduce manual effort in filtering and scoring

No more hunting for the “right” calls to audit. AI does the first pass—scoring conversations, tagging key behaviors, flagging outliers.

Your QA team just focuses on what matters: giving feedback, coaching reps, and improving outcomes.

Example: A QA manager sets Enthu.AI to flag all outbound sales calls over 5 minutes where the compliance checklist wasn’t completed.

That filtered list becomes her review queue automatically.

4. From data to action—fast

The real value isn’t just in tagging calls—it’s in spotting patterns. Enthu.AI users get dashboards that show trends across call types, agents, teams, and issues.

So, instead of reacting to problems, you’re proactively adjusting training, refining scripts, or updating your QA focus areas.

AI doesn’t replace your QA team—it makes them sharper, faster, and more focused.

You go from random call reviews to laser-focused analysis and coaching.

That’s how modern QA teams scale, without sacrificing quality.

Conclusion

Stop guessing. Start sampling with strategy.

You wouldn’t ignore 98% of your revenue reports—so why do it with your calls?

The truth is, random picks and outdated sampling methods can’t keep up with today’s high-volume, high-risk contact centers.

If your QA is still running on instinct and spreadsheets, you’re not just missing calls—you’re missing patterns, problems, and people who need help.

It’s time to sample smarter:

- Anchor every review to a business goal.

- Combine random with rule-based and AI-powered methods.

- Prioritize high-risk calls before they turn into compliance nightmares.

Your QA team doesn’t need to work harder—they need to work sharper.

FAQs

1. What is call samping?

Call sampling is the process of selecting specific customer calls for QA review. It helps teams evaluate agent performance, spot compliance issues, and identify coaching opportunities without listening to every single call.

2. What are the different types of call sampling methods?

There are three main types: random sampling, rule-based sampling, and AI-driven (auto) sampling. Each serves a different purpose, from ensuring fairness to focusing on high-impact interactions.

3. How can QA teams improve their call sampling strategy?

Smarter sampling combines human judgment with tech. Here’s how:

- Start with a QA goal: Know what you’re solving—compliance, CX, coaching?

- Mix sampling methods: Don’t rely solely on random; combine rule-based + AI for depth.

- Focus on edge cases: Prioritize calls with escalations, complaints, or legal exposure.

- Audit more during change: When launching a new campaign or product, sample more aggressively.

- Track trends: Use QA score spreads to find agents or processes slipping through the cracks.

- Use AI to scale: Let tools like Enthu.ai auto-tag and surface what your team would otherwise miss.

4. How does AI improve call sampling in contact centers?

AI enables 100% call coverage, auto-tags risky calls, reduces manual work, and helps QA teams move from reactive to proactive decision-making.

On this page

On this page