Ever sat in a morning huddle and thought, “Why are we still guessing?”

We have call recordings, QA scorecards, even post-call surveys, yet we still miss what’s actually happening on the floor. An angry customer walks.

A rep skips a legal disclaimer.

A golden upsell moment slips away. And we don’t find out until it’s too late.

The truth? Manual QA can’t keep up.

We’re reviewing a handful of calls per agent, per month – if that.

And even then, it’s all hindsight. By the time we catch the issue, the damage is done.

That’s why more call centers are turning to speech analytics.

It doesn’t just listen. It understands.

In this guide, I’ll break down how speech analytics works, what problems it solves, and how you can use it to coach faster.

Let’s dive in.

Table of Contents

What Is Speech Analytics in a Call Center?

At its core, speech analytics is the process of converting customer calls into searchable, analyzable data.

It listens to every interaction between your agents and customers, transcribes the conversation, and then uses AI to spot patterns – good, bad, and risky.

As a call center manager, think of it like a second set of eyes on the floor- but smarter, faster, and working 24/7.

Here’s what it helps you catch:

1. Keyword and Phrase Detection

Want to know how often customers mention “cancel,” “late fee,” or “not happy”? Speech analytics tracks it – across every call.

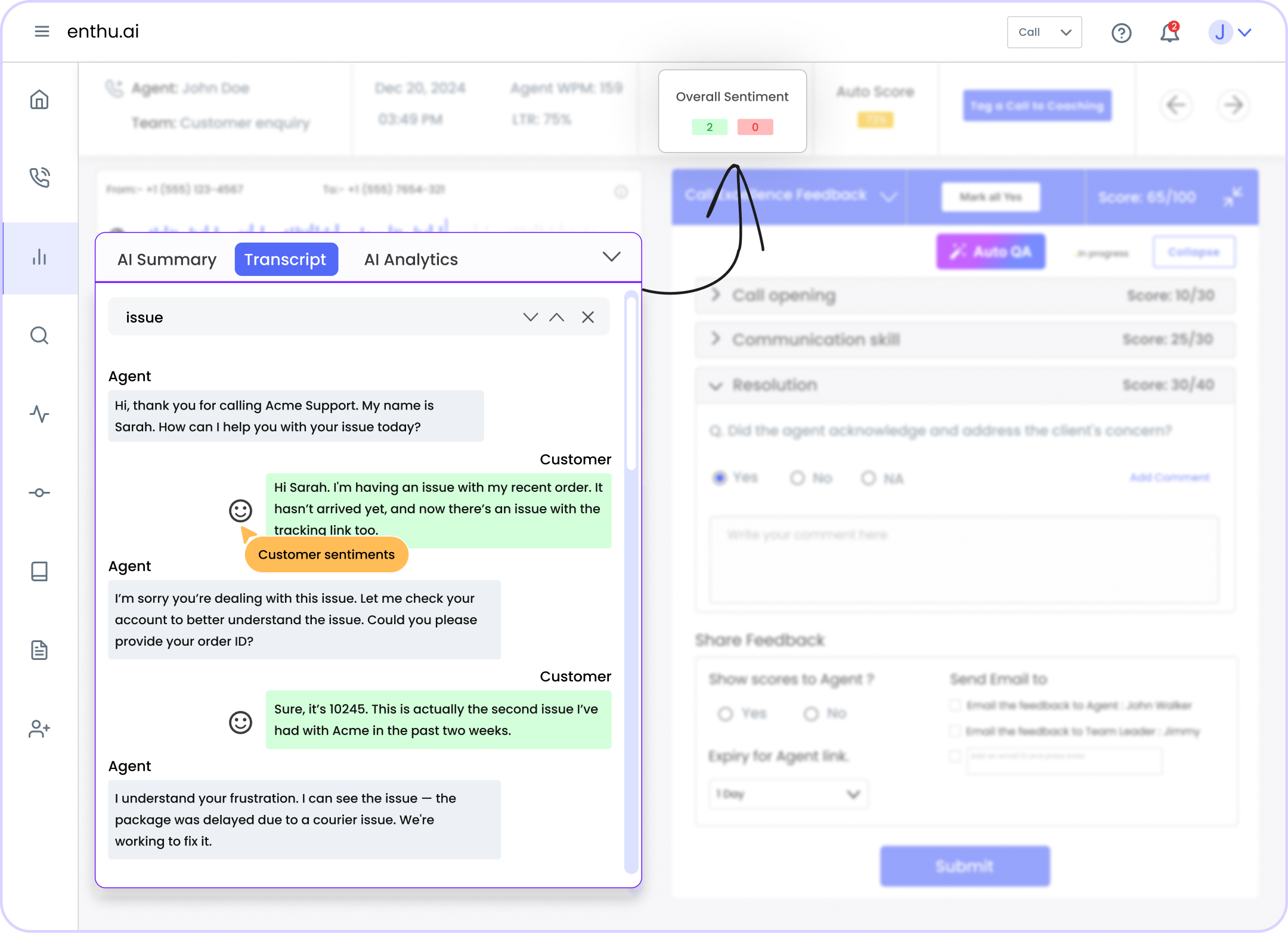

2. Emotion and Tone Monitoring

It doesn’t just hear what’s said – it picks up how it’s said. Was the customer frustrated? Did the agent sound dismissive? These tone shifts matter, especially in escalations.

3. Silence and Talk Ratios

Long pauses or one-sided conversations often point to confusion or disengagement. Speech analytics helps you catch those patterns early.

For example: You manage a team handling personal loan calls. Every agent is supposed to state an APR disclaimer – but one rep keeps skipping it under pressure. With speech analytics, you don’t need to wait for a complaint or hope QA catches it. The system flags it instantly, so you can coach the agent before it turns into a compliance issue. They might sound similar, but speech analytics and text analytics solve very different problems inside your contact center. This focuses on voice-based conversations – phone calls between your agents and customers. It captures the tone, pace, emotion, and delivery, not just the words spoken. It helps you: In short, it gives you a full picture of the interaction – from sentiment to compliance risks. Text analytics focuses on written interactions – like live chat, SMS, emails, or support tickets. It scans for keywords, customer intent, and patterns in written feedback. It helps you: Which One Should You Use? Both are valuable – but they serve different teams. If voice calls are your primary channel, speech analytics is your frontline tool for managing agent performance and customer experience. If you’re handling large volumes of written tickets or chats, text analytics adds that missing layer of insight. The real power? When both are used together you get complete visibility across every customer interaction, regardless of channel. Enthu.AI brings together voice, chat, and email analytics, giving you complete visibility across every customer conversation. AI voice analytics may sound complex, but it follows a simple, logical flow that transforms conversations into insights you can act on. Here’s how it works, step by step: Every voice analytics journey begins with a simple phone call. Whether inbound or outbound, the system captures both sides of the conversation – agent and customer. This audio is pulled directly from your call recording system or dialer integration. Most platforms integrate with your call recording tools, so this step happens in the background. Think of it as your raw material, just like a recorded meeting or voicemail, but at scale. Once the audio is captured, it’s fed into an AI-based speech-to-text engine like Enthu.AI. This tech listens to the conversation and converts it into a written transcript, line by line, attached to every spoken sentence. Why? Because you can: For example, if a customer says, “This is the third time I’ve called,” with call transcript, your QA analyst can jump right there instead of scrubbing through 20 minutes of audio. Now that we have text, the real power kicks in. Using Natural Language Processing (NLP), the system scans the conversation for patterns, behaviors, and red flags. It does this by tagging: Keywords & Phrases: Specific terms like “cancel my account,” “late fee,” “not happy,” or any custom phrases you define. Sentiment & Emotion: Was the customer angry? Did their tone shift halfway through? Did the agent sound rushed, rude, or robotic? Emotion detection captures those signals. Compliance Flags: If an agent misses a required statement (like a legal disclosure or security check), it gets flagged. You no longer have to rely on random audits. Silence & Talk Patterns: It even analyzes how much each person spoke, whether the agent interrupted, or if there were long awkward silences (which usually signal confusion or disengagement). This is what makes AI voice analytics smarter than basic call recordings. It’s not just listening – it’s interpreting. After tagging and analysis, the data is visualized in dashboards and reports. This is where you, as a manager or QA lead, come in. You can: Think of it like a control panel for your floor – except instead of relying on 5–10 audited calls a week, you’re looking at 100% of customer conversations. Speech analytics isn’t just a nice-to-have tech add-on. It’s a daily operations tool that helps you solve real problems – fast. From managing compliance to coaching at scale, here are the top ways call centers are using it today. You don’t have time to manually listen to every call, but speech analytics does. It highlights: Now, you’re not coaching based on gut feel, you’re using hard data to back it up. Need agents to read a legal disclaimer or ask security questions on every call? Speech analytics flags every call where required language is missing or incorrect. Whether you’re in finance, healthcare, or insurance, this helps you: When a customer says things like “I’ve called three times already” or “I’m done with this” you want to know immediately. Speech analytics alerts supervisors to: This allows real-time intervention and saves customer relationships before they walk. Tired of hearing “handle time is high” or “CSAT is low” without context? Speech analytics helps you dig into why: Instead of guessing, you fix the real problem at the source. Want more empathy, better listening, or fewer robotic scripts? Speech analytics tracks soft skills: It gives you measurable coaching points instead of vague feedback like “be more empathetic.” Analyzing calls at scale isn’t just about installing a single tool. It involves a tech stack that works together to capture, process, analyze, and act on voice data. Here are the core components: This is your starting point. You need a reliable system to capture inbound and outbound calls in real-time or for later analysis. Examples: Must-Have: High-quality audio capture and ability to record both sides of the conversation (agent + customer). This is the central command center for managers and QA teams. It connects the transcript, scoring rules, coaching workflows, and dashboards. Examples: Must-Have: Automated QA scoring, agent scorecards, compliance alerts, coaching workflows. This layer analyzes the text for intent, sentiment, keywords, and compliance markers. It’s where raw transcripts become actionable data. Examples: IBM Watson NLP Google Natural Language API Proprietary NLP engines in tools like Enthu.AI, or CallMiner Must-Have: Emotion detection, keyword spotting, phrase patterns, talk ratios, silence analysis. These tools connect your analysis back to the customer journey—so you can tie behavior insights to actual cases or accounts. Examples: Must-Have: Ability to sync call summaries, flags, and sentiment data back to customer records. You’ll want visual tools to track performance trends, sentiment shifts, keyword spikes, and team-level QA scores. Examples: Must-Have: Customizable filters, trend analysis, agent/team breakdowns, export/share options. Speech analytics is great, but it’s what you do with it that counts. Start simple: use the data to have better coaching conversations. Show agents where they’re doing well and where they’re falling short based on actual calls, not opinion. Keep the feedback flowing. Don’t wait for QA to catch up. If something’s off, fix it now. If someone’s improving, let them know. Also, pay attention to patterns. If the same issue shows up across the team, it’s not just an agent problem – it’s a process issue. Tweak the script. Update the training. Make the job easier to do right. The goal isn’t just cleaner dashboards. It’s a stronger, smarter floor. 1. What is speech analytics? Speech analytics in call centers helps enhance customer service by monitoring agent performance, identifying compliance issues, and uncovering customer insights, enabling data-driven decisions to improve operations and customer satisfaction. 2. What are the metrics of speech analytics? Sentiment analysis, silence/overtalk rate, keyword frequency, conversation duration, First conversation Resolution (FCR), customer satisfaction (CSAT), and compliance adherence are some of the speech analytics measures. These metrics help evaluate call quality, customer experience, and agent performance. 3. What is an example of speech analytics? Sentiment analysis of customer care call recordings is one use of speech analytics that detects emotions. Companies can measure customer satisfaction and optimize agent responses to improve service quality by identifying phrases like “frustrated” or changes in tone.B. Speech Analytics vs. Text Analytics: What’s the Difference?

1. Speech Analytics

2. Text Analytics

Feature / Focus Area Speech Analytics Text Analytics Channel Voice calls (inbound/outbound) Chat, SMS, email, support tickets Data Source Live or recorded phone conversations Written communication logs Tone & Emotion Detection ✅ Yes – detects voice tone, emotion, pace ❌ Limited – relies on text cues (e.g. ALL CAPS) Silence & Talk Ratio Analysis ✅ Yes ❌ No Keyword/Phrase Detection ✅ Yes ✅ Yes Use Cases Compliance, coaching, escalations, sentiment Ticket routing, FAQ mining, customer feedback Best For Phone-heavy call centers (sales, support) Digital-first teams (chat/email support) Real-Time Analysis ✅ Available in many tools ✅ Available in chat and bot tools Complexity of Setup Moderate (requires call recordings, voice engine) Lower (text is easier to ingest/analyze) C. How Does AI Voice Analytics Work?

Step 1: The Call Is Captured

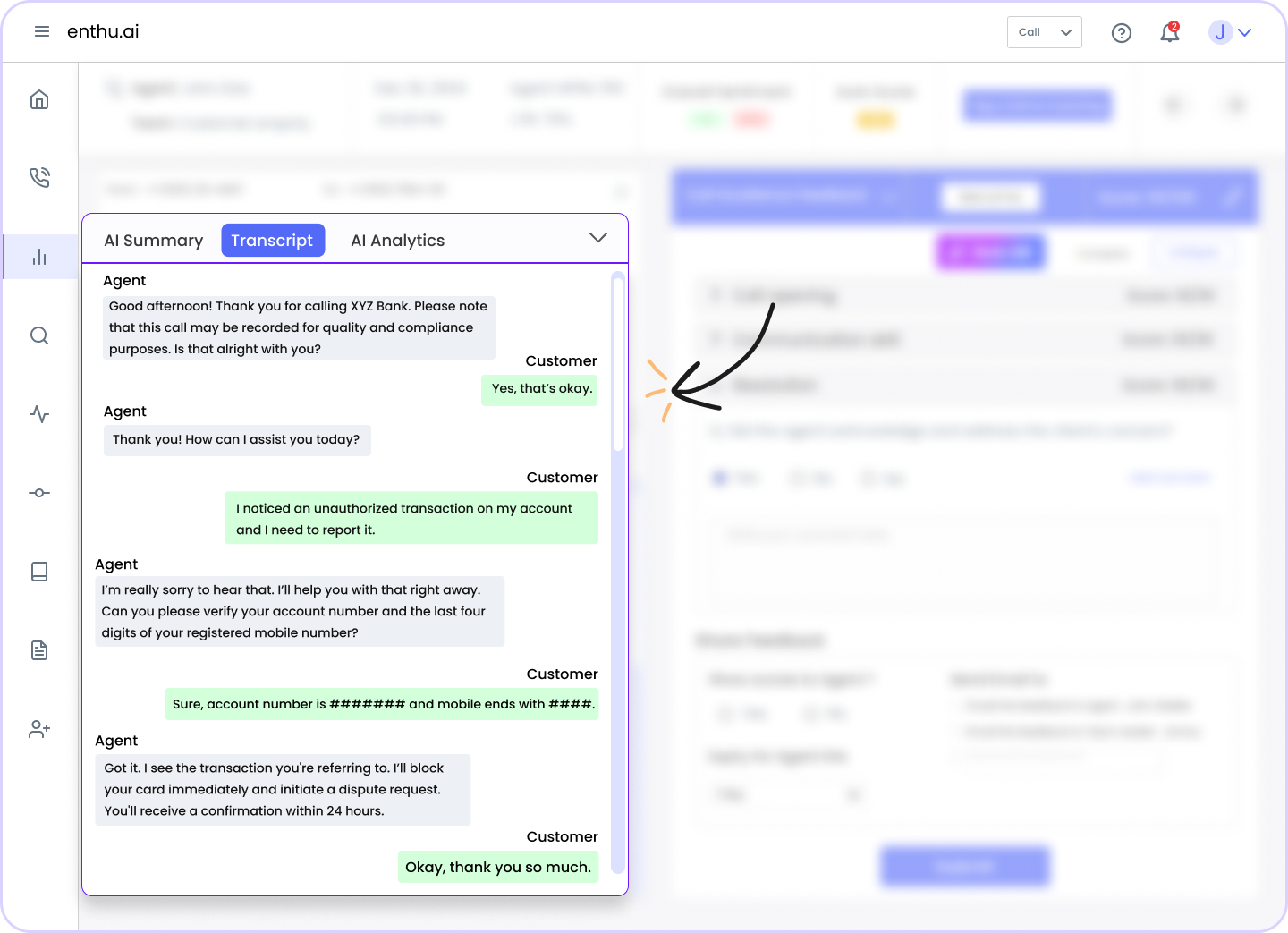

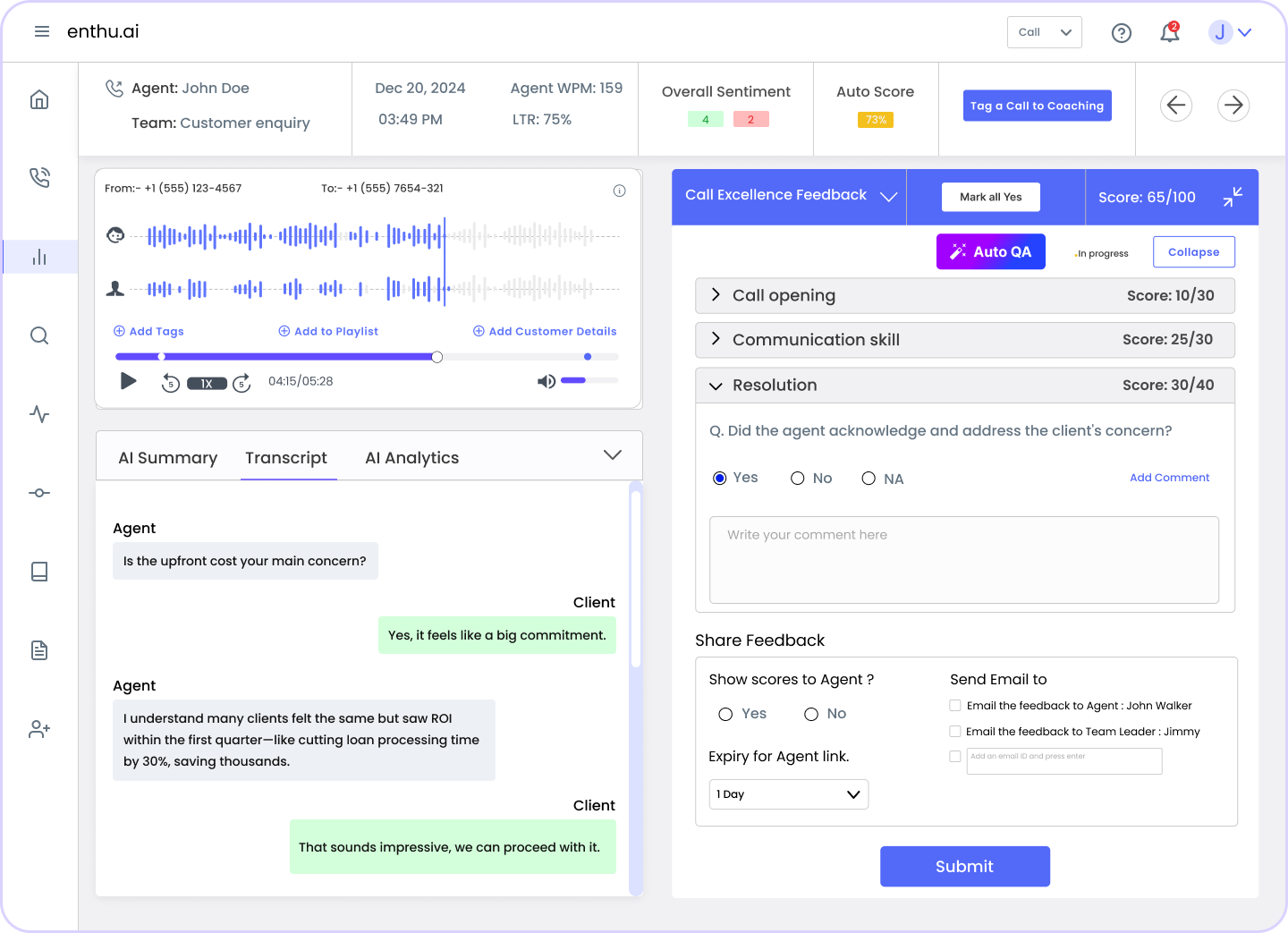

Step 2: Speech Is Transcribed into Text

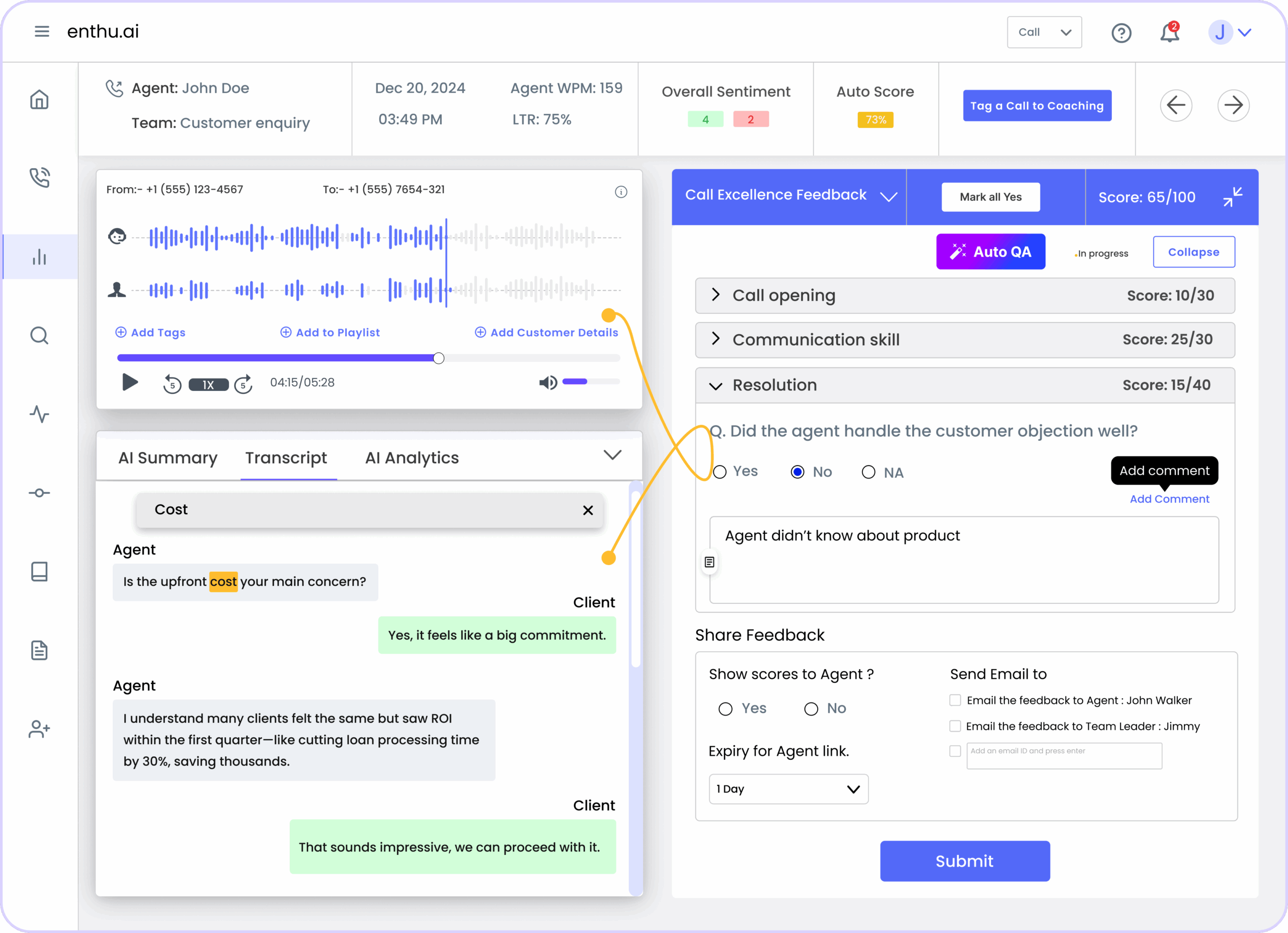

Step 3: AI Analyzes the Conversation

Step 4: Dashboards Turn Insights into Action

D. Top Use Cases for Speech Analytics in Call Centers

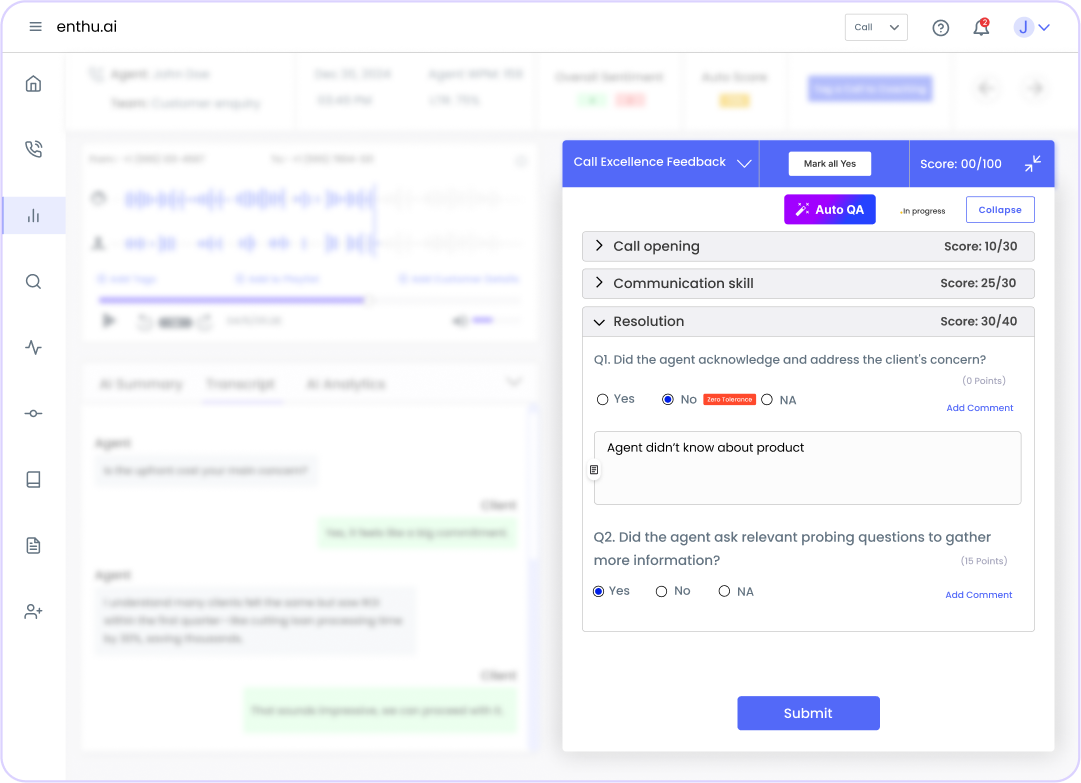

1. Smarter Coaching & Agent Performance Management

2. Compliance & Script Adherence

3. Escalation & Churn Risk Detection

4. Root Cause Analysis

5. Monitoring Soft Skills at Scale

E. Tools You Need for Call Center Speech Analytics

1. Call Recording Engine

2. Speech Analytics / QA Platform

3. Natural Language Processing (NLP) Engine

4. CRM & Helpdesk Integrations

5. Reporting & BI Dashboards

Conclusion

FAQs

On this page

On this page