Call quality scores are supposed to be fair. But what happens when three managers give three different scores for the same call? That’s a red flag.

Inconsistent QA is more common than you’d think. In fact, 42% of contact center leaders say inconsistent scoring is their biggest quality assurance challenge.

It creates confusion. Agents don’t know what to improve. Managers can’t coach the right way. And your QA process loses credibility.

This is where call calibration becomes a must. It’s a structured way to make sure everyone’s on the same page. It helps align expectations, reduce bias, and bring more trust into the feedback loop.

In this article, we’ll break down what call calibration is, why it matters, who should be part of it, and how to make it work inside your contact center.

Table of Contents

A. What is call calibration?

Call calibration is a process where QA teams, managers, and team leads listen to the same call and score it separately. Then they come together to compare results and agree on what the “right” score should be.

It’s not just about checking the boxes. It’s about making sure everyone judges calls the same way.

Without calibration, one manager might pass a call while another fails it. That’s not just unfair.

It confuses agents and makes coaching harder.

Calibration removes bias. It brings consistency to your QA process.

Everyone follows the same standards, which means better scoring, better coaching, and a better customer experience.

B. Why call calibration matters

Call calibration isn’t just another meeting. It’s the backbone of a strong QA process. Here’s why it matters:

1. It keeps your QA scoring consistent

Scoring soft skills like tone and empathy is tricky. One person might think the agent was friendly.

Another might say they sounded flat. Calibration helps fix that. Everyone uses the same lens to judge calls.

This makes your QA process fair and reliable.

2. It gives customers a steady experience

Consistency in scoring leads to consistency on calls. No matter which agent a customer talks to, the service feels the same.

That builds trust. And in call centers, trust is everything.

3. It connects agents to your business goals

Calibration isn’t just about the scorecard. It’s a chance to remind everyone of the bigger picture.

Managers can explain why certain behaviors matter. Agents get clear on what the company values.

4. It helps you hit your CSAT goals

During calibration, teams can review metrics like AHT, dead air, or customer sentiment.

You spot what’s hurting the experience. Then you fix it fast. That leads to better CSAT and happier customers.

5. It helps spot weak spots in workflows

By listening to calls, you might notice the same issue coming up. Maybe agents struggle with a billing question. Or maybe a process is too slow.

Calibration gives you that 360-degree view. You can tweak workflows or build training around those gaps.

6. It improves your coaching game

When call scores are off, coaching gets messy. Calibration fixes that. It helps managers give clearer, more focused feedback. It also shows QA teams where coaching might be falling short.

7. It builds agent trust and reduces churn

Agents want fairness. They don’t want to feel judged by opinion. Calibration brings transparency. And when agents trust the process, they stay longer.

That matters, since turnover is one of the biggest problems in contact centers right now.

C. Who should participate in call calibration?

For calibration to work, you need people who see the call from different angles.

Each role brings a unique perspective. Here’s who should join and how they help.

1. QA Analysts

They know the scorecard inside out.

Catches the small stuff and helps keep the process structured. QAs make sure scoring sticks to the rules.

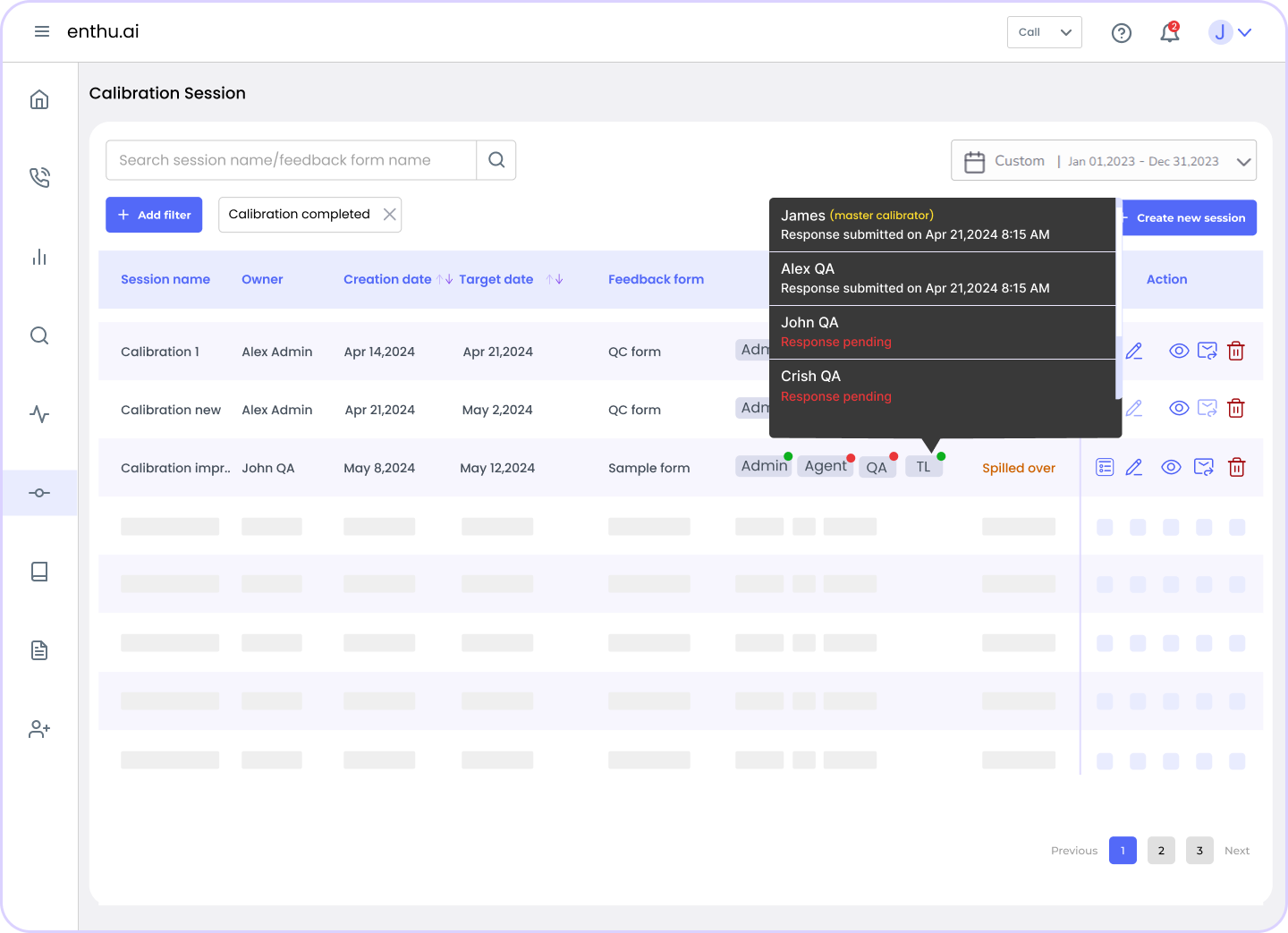

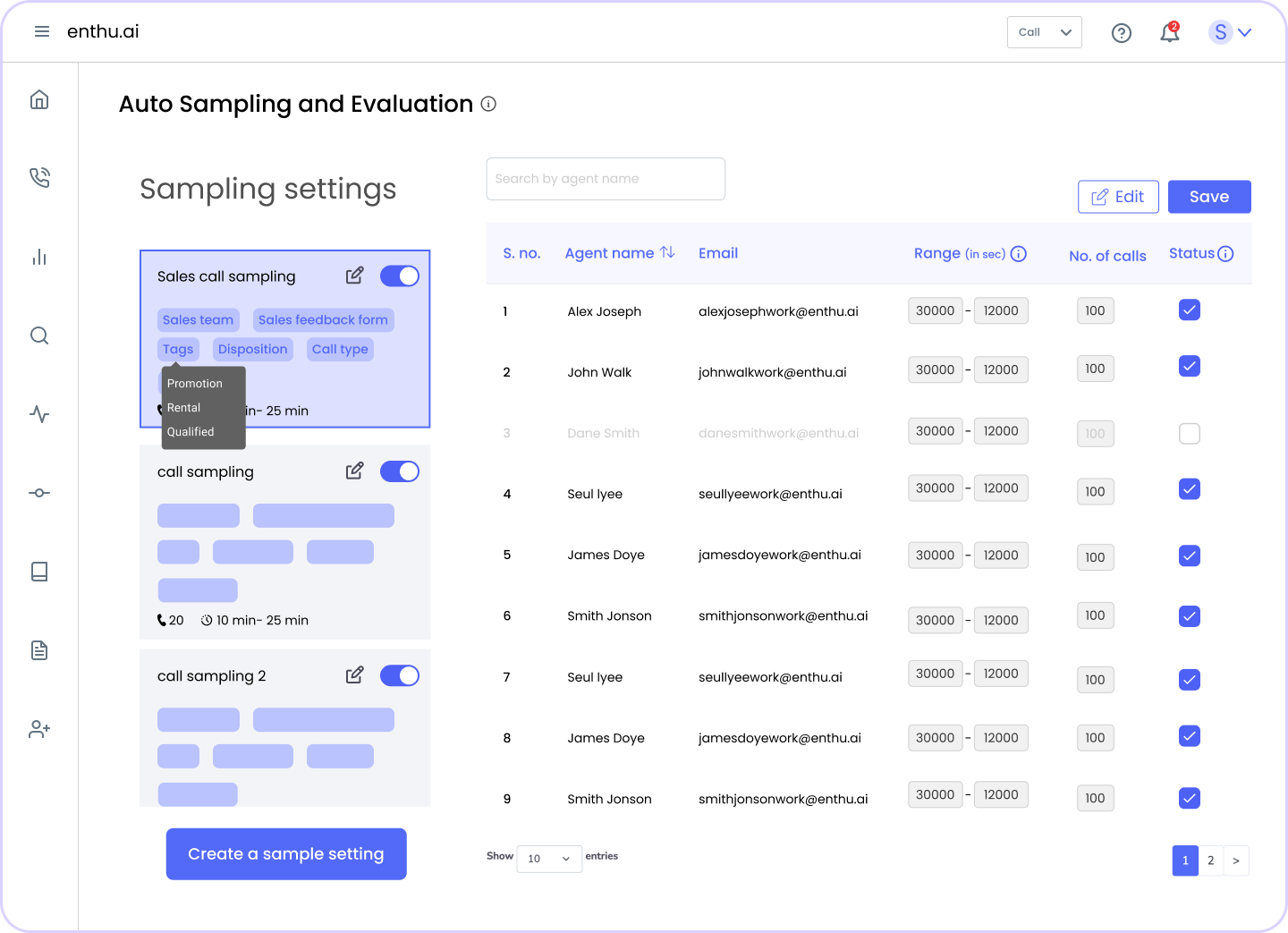

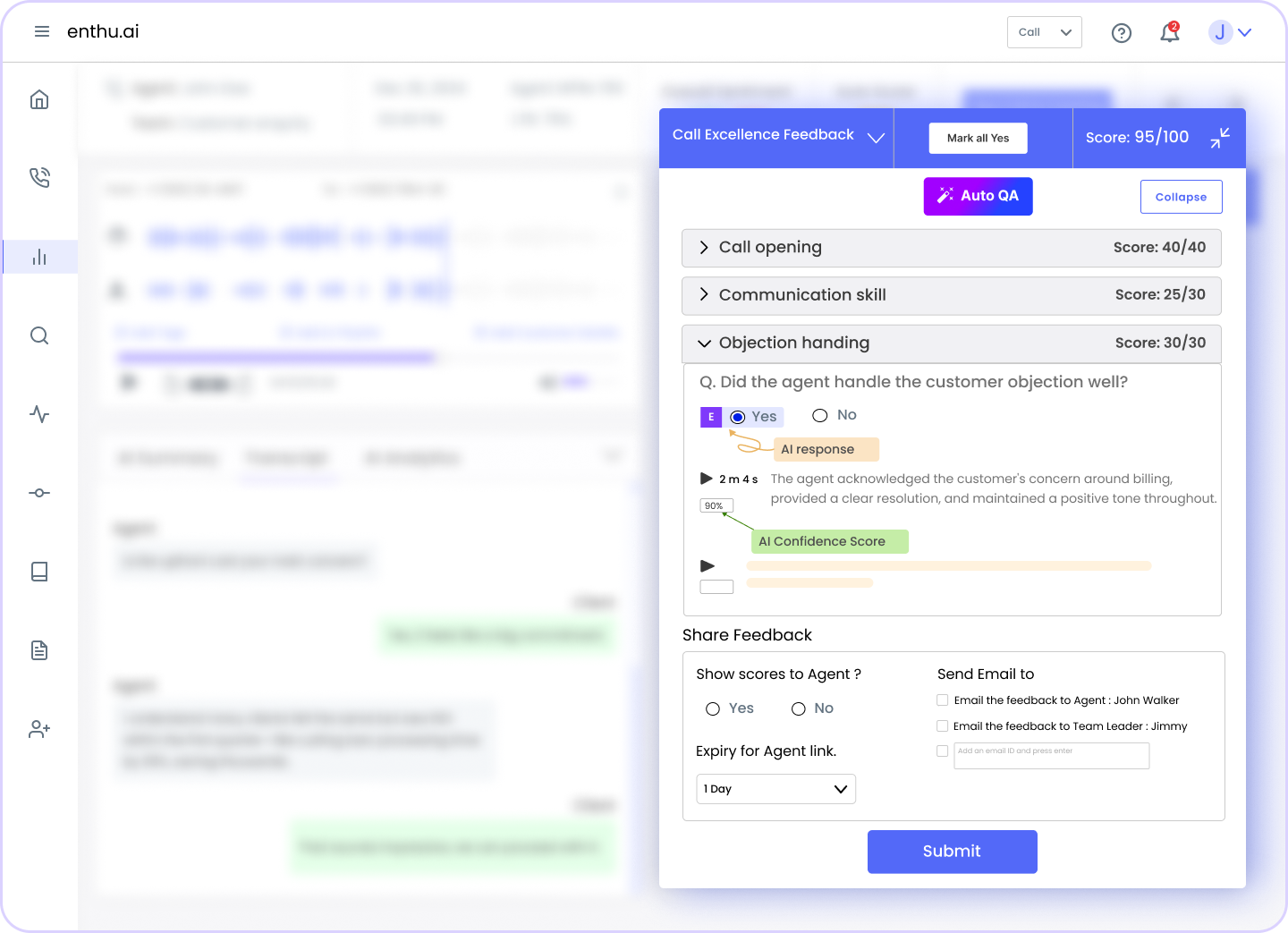

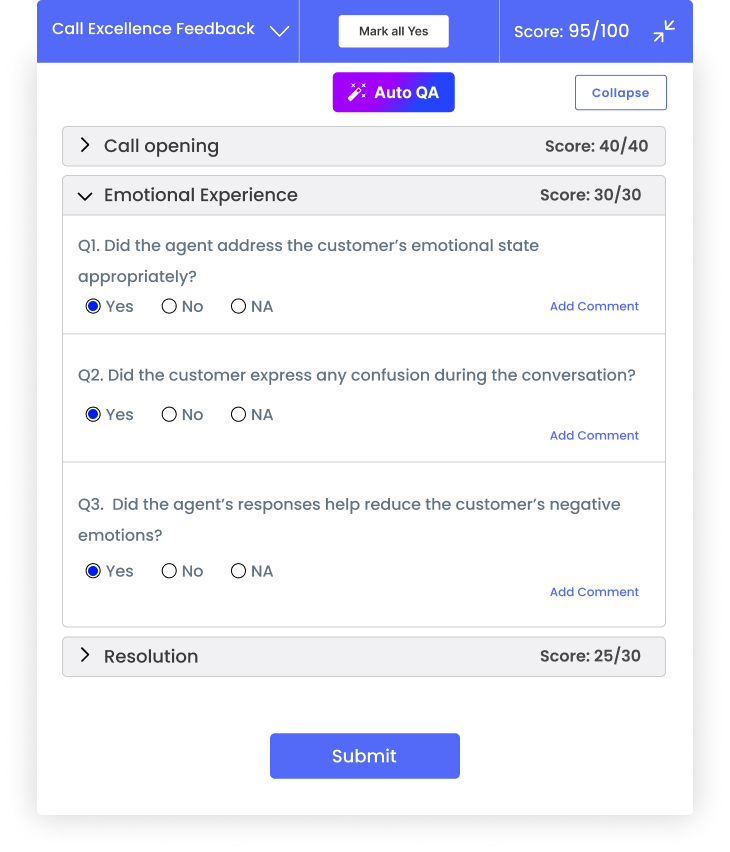

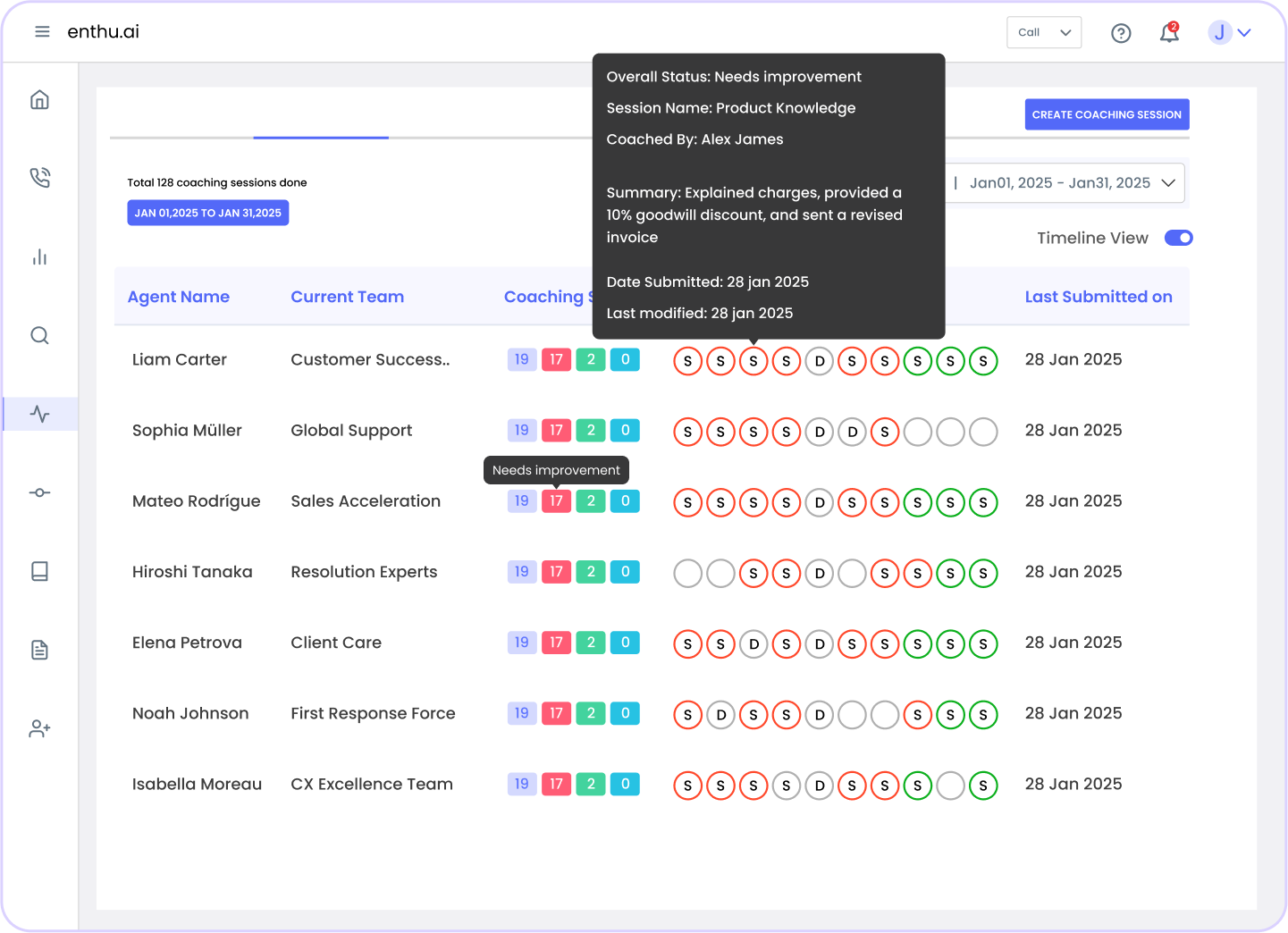

Example A QA analyst notices that Agent Priya skips the identity verification step in several calls. They bring it up so the team can agree on how serious that miss is. TLs work closely with agents. They know their team’s strengths and struggles. A team lead points out that a billing process changed last week, which explains why agents sound confused. They help explain agent behavior and flag where coaching is needed. They connect calibration with business goals and also help drive accountability. Supervisors can guide tough conversations and make final calls when there’s a tie. A manager may ask the group to tighten scoring around upselling, since it’s a top priority this quarter. Yes, agents too. Not always, but sometimes. Letting them join a session can boost trust and transparency. It shows them how scores are decided. Just make sure it’s a safe space where no one feels attacked. Example An agent joins to understand how their calls are evaluated. They realize that tone and empathy matter just as much as resolution. This builds transparency and increases trust in the QA process. Call calibration might sound like a big process, but once you break it down, it’s pretty simple. Here’s how it usually works, step by step. Start by picking a call that’s worth reviewing. It could be a strong call, a weak one, or something in the middle. The idea is to choose a call that brings learning opportunities to the table. Tip: Avoid cherry-picking. Random samples give you a clearer picture of what’s really happening on the floor. If you’re using a platform like Enthu.AI, you can quickly auto-sample the calls based on specific criteria like call duration, long hold time, or missed script points. This saves you time and helps you surface the right call for the session. Before the meeting, each participant should listen to the call on their own. They should score it using the same QA rubric or scorecard. This part matters a lot. No one should discuss the call before scoring. Independent scoring shows how each person interprets the call without outside influence. If your team uses any call auditing tool, scoring becomes easier. Everyone sees the same scorecard and call transcript, so they can focus on the actual performance instead of hunting for the details. Now it’s time to meet and compare scores. You’ll likely see differences, especially in areas like empathy, tone, or call control. That’s normal. Go through the call step by step. Ask each person why they gave the score they did. Was the tone good enough? Did the agent handle objections properly? These are the kinds of discussions that make calibration valuable. The goal isn’t to prove someone wrong. It’s to understand why people scored the way they did and where interpretations are slipping apart. This is also a great time to revisit your QA guidelines. If a question keeps coming up, your rubric might need some tightening. After all the discussion, the group should agree on what the final score should be. This becomes your benchmark. It’s what everyone should aim to match in future evaluations. Take notes during this step. You can use them to update internal documentation or even create coaching guides based on the conversation. Teams using Enthu.AI often save these benchmark calls inside the system and set a master calibrator. That way, future calibration sessions stay aligned and new team members can be trained faster. Calibration isn’t just about getting a number right. It’s about making sure everyone sees the call the same way. When your team aligns on how to judge performance, agents get clearer feedback, coaching becomes stronger, and the customer experience improves. Before you start calibrating, get clarity on what “quality” means for your team. Define clear, measurable QA standards like call flow, tone, empathy, accuracy, and compliance. These should directly reflect your company’s goals. Without this baseline, every evaluator scores based on their own assumptions, which defeats the whole point of calibration. Tip: Use a scoring rubric that breaks each section into small behaviors. Enthu.AI helps build and standardize custom scorecards across teams. Calibration isn’t just for QA folks. Your agents need to understand how they’re being evaluated. Once your scorecard is ready, use it to train agents, explain what each metric means, share real examples, and let them score calls too. When agents are part of the loop, they’re more likely to accept coaching and improve faster. Manual QA often covers only 1–2% of total calls, which means decisions are based on a tiny sample. Automated call monitoring changes that. Tools like Enthu.AI let you analyze end to end conversations, identify coaching moments, and pull specific calls for calibration. This improves both accuracy and efficiency. Generative AI can help you fast-track call summaries, flag common QA gaps, and even recommend coaching. But human context is still key. AI should assist, not replace your judgment. When your team and tech work together, you can calibrate faster and more effectively. After each session, look for patterns. Are multiple agents struggling with compliance? Do certain calls spark wide scoring discrepancies? Use that intel to coach better. And make feedback clear, specific, and tied to the rubric, not vague opinions. This builds confidence in the QA process. Consistency builds trust. Set a fixed calibration schedule weekly, bi-weekly, or monthly. Define how sessions will run: how calls are selected, how scores are shared, and how to resolve disputes. This keeps things fair and focused. Create a shared document where all decisions and changes are noted. Don’t make calibration a top-down exercise. Invite agents to join a session occasionally or give feedback on the scorecard. This shows them the process isn’t about “catching mistakes”, it’s about helping everyone grow. It also keeps your evaluation framework grounded in real-life call scenarios. Tip: Enthu.AI lets you share QA evaluations directly with agents and track feedback loops. You’d be surprised how many teams calibrate, agree and then forget what they agreed on. Always record final scores, discussion notes, and any changes to the rubric. Share these with the QA and team lead groups so everyone stays aligned going forward. It also helps onboard new evaluators faster. Disagreements are natural because call evaluations involve judgment. Make sure everyone listens carefully and explains their scores with clear examples from the call. Encourage questions like “What made you give that score?” This helps keep the conversation focused on facts instead of opinions. Be aware of common biases, such as being too lenient or too strict. Avoid assumptions about the agent’s intent or attitude. Remind the team to stick to the scoring rubric and evidence from the call. This keeps scoring fair and objectively. If disagreements get stuck, refer back to your QA guidelines or rubric for clarity. Sometimes it helps to table the discussion and revisit the call later with fresh eyes. The goal is to reach a consistent, agreed-upon score that supports better coaching and agent growth. When agents see inconsistent QA scores, it can feel unfair and demotivating. Calibration helps fix that. With everyone scoring the same way, agents get feedback they can trust. They understand what’s expected and don’t feel penalized for things outside their control. This leads to better morale and fewer complaints about “unfair scoring.” When evaluators align on what “good” looks like, agents start trusting the system. Calibration removes the guesswork and favoritism often felt in performance reviews. Over time, this trust creates a stronger, more transparent culture where QA isn’t seen as a threat but as support. When QA scores are calibrated, coaching becomes more targeted. Managers and team leads can rely on accurate data to give meaningful feedback. Instead of vague suggestions, agents receive clear insights on what to improve and how. This results in faster skill development and more confident reps. Use this quick reference before, during, and after your calibration sessions to ensure every meeting is productive, fair, and focused. Before the session During the session After the session Optional Call calibration is more than a QA step. It creates fairness, clarity, and growth in your contact center. Aligning scores and expectations helps agents improve and strengthens coaching. This builds trust and consistency, which lead to better customer experiences. In today’s competitive world, delivering consistent quality on every call sets you apart. Make calibration a priority and watch your team perform at its best. When evaluations are fair and feedback is clear, excellent service becomes the standard. 1. How to run a call calibration session? The frequency of call calibration sessions depends on the size and operational requirements of the call center. However, regular sessions, whether weekly or monthly, are recommended to ensure consistency and continuous improvement. 2. What role does technology play in call calibration? Technology, such as conversation intelligence and speech analytics tools, enhances the call calibration process by providing data-driven insights into customer interactions. These tools contribute to more objective evaluations and facilitate a more efficient calibration workflow. 3. Can call calibration be integrated into agent training programs? Yes, call calibration should be an integral part of agent training programs. Insights gained from calibration sessions can be used to identify training needs and tailor ongoing development programs, creating a seamless connection between calibration and continuous training.2. Team Leads

3. Managers or Supervisors

4. Sometimes, Agents

D. How the Call calibration process works

Step 1: Select a sample call

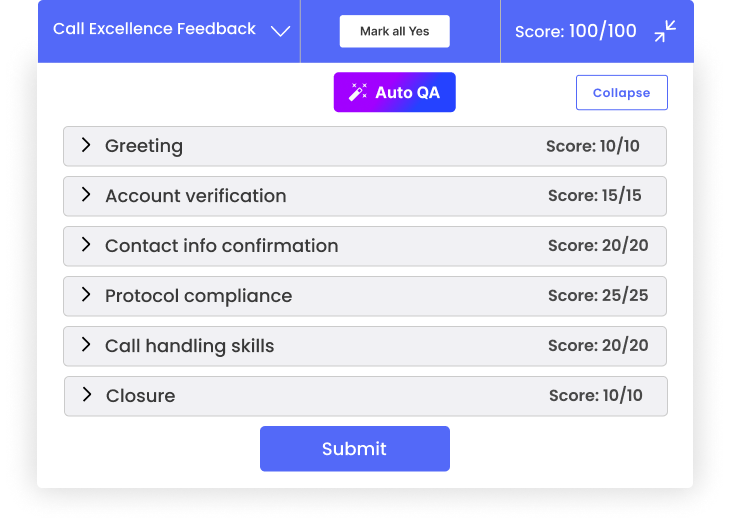

Step 2: Everyone scores the call independently

Step 3: Discuss the score differences

Step 4: Align on the final score

E. 8 Best practices for effective call calibration in a contact center

1. Define quality standards

2. Train agents

3. Automate quality monitoring

4. Leverage generative AI

5. Analyze results and provide actionable feedback

6. Follow up and establish ground rules

7. Involve frontline feedback

8. Document calibration outcomes (NEW)

F. How to handle disagreements during calibration

1. Encourage open and respectful discussion

2. Watch out for biases

3. Use your guidelines to settle differences

G. How call calibration improves QA and coaching

1. Reduces agent frustration

2. Builds trust in the QA process

3. Makes coaching more consistent and actionable

H. Call calibration checklist for managers

Conclusion

FAQs

On this page

On this page