Call quality can make or break a customer’s support experience.

In today’s omnichannel world, phone calls still reign supreme for urgent support.

59% of customers rank a phone call as their top support preference.

That means your call center’s audio quality isn’t just a technical concern; it directly shapes customer satisfaction.

Mean Opinion Score (MOS), a handy metric that captures voice call quality on a simple scale.

In this article, we’ll explore what MOS is, how to use it to evaluate call quality, and what tools and practices can boost it.

Let’s get started!

A. What is a mean opinion score (MOS)?

Mean Opinion Score (MOS) is a numerical measure of voice call quality, rated on a scale from 1 to 5, where 1 is poor and 5 is excellent.

It’s widely used in telecommunications, especially in call centers relying on Voice over Internet Protocol (VoIP) systems.

Originally, MOS was calculated through subjective testing.

A group of listeners would rate call samples based on clarity, lack of noise, and overall experience.

The average of these ratings became the MOS.

Today, objective methods dominate, using algorithms to estimate MOS by analyzing technical factors like:

- Codec performance: How audio compression affects quality.

- Network conditions: Issues like latency, jitter, and packet loss.

- Hardware quality: The effectiveness of headsets and microphones.

For QA teams, MOS is a vital tool for post-call analysis, helping flag calls with poor quality for review.

Upload Call & Get Insights

DOWNLOAD DUMMY FILE

DOWNLOAD DUMMY FILE B. What is a good MOS score and how is it calculated?

MOS is rated on a scale from 1 to 5, where 1 means the call quality was awful and 5 means it was crystal clear.

It’s an Absolute Category Rating (ACR) scale commonly used in telecom.

| MOS Score | Rating | What It Means | Call Center Impact |

| 5 | Excellent | Feels like a face-to-face conversation | Happy customers, smooth conversations |

| 4 | Good | Clear with minor imperfections | Solid experience, no major complaints |

| 3 | Fair | Robotic tones, occasional drops | Customers notice; satisfaction dips |

| 2 | Poor | Frequent gaps, hard to understand | Frustration, repeats, escalations |

| 1 | Bad | Unintelligible; callers hang up | Lost trust, lost business |

Here’s the breakdown of MOS ratings:

- 5 – Excellent: Perfect call quality. The audio is clear, loud, and free of distortion. (In practice, hitting a true 5 is rare, as even great VoIP calls have minor imperfections)

- 4 – Good: High-quality audio with only minor, barely noticeable issues. Most well-run call centers aim for MOS in the 4+ range for their calls.

- 3 – Fair: Acceptable but not great. The call was understandable but had some problems (mild static, a little muffled, etc.). This is a sign that quality could be improved.

- 2 – Poor: Significant audio issues. The conversation likely had choppy audio, delays, or noise that made it hard to communicate. A MOS in the 2’s is a serious concern.

- 1 – Bad: Virtually unusable call quality. Audio was garbled or indecipherable, to the point that communication failed.

In call centers, a MOS of 4.0 or higher is ideal, while scores below 3.6 often need attention to avoid customer dissatisfaction. For example, a MOS of 3.5 might mean 50% of customers notice issues like static or delays.

How is MOS calculated?

In simple terms, you either average human ratings or use an algorithm that predicts those ratings.

If using people, you’d have multiple listeners score the call quality 1–5, then you take the mean of those opinions – hence the name Mean Opinion Score.

For example, if three people rated a call 4, 4, and 3, the MOS would be 3.67.

In practice, call centers rely on the algorithmic approach: software tools analyze the call’s audio characteristics (using standards like the ITU-T algorithms) and output a MOS value automatically.

These tools simulate what an average person would perceive, which is why MOS is so handy.

C. MOS vs other voice quality metrics

MOS is a high-level summary of call quality, whereas metrics like jitter, latency, and packet loss are the granular technical measurements that influence that quality.

If you’ve ever heard IT folks talk about these terms, it’s because they directly affect audio performance on VoIP calls.

| Metric | Description | Impact on Call Quality |

| Jitter | Variation in packet arrival times | Causes choppy or distorted audio |

| Latency | Delay in audio transmission | Leads to conversational delays |

| Packet Loss | Lost data packets during transmission | Results in audio gaps |

| MOS | Composite score of overall call quality | Reflects user experience |

Here’s how they relate:

- Latency is the delay between when one person speaks and the other person hears it. High latency (lag) can make conversations awkward, causing people to talk over each other or wait uncomfortably. It’s measured in milliseconds.

- Jitter is the variation in packet arrival timing. In VoIP, voice data is sent in packets; if those packets arrive at uneven intervals (due to network fluctuation), the audio can sound choppy or “robotic”. Essentially, jitter is the shakiness of the connection.

- Packet loss is when some of those voice data packets never reach the destination. Missing packets mean missing pieces of audio, resulting in words dropping out or gaps of silence in the call.

While jitter, latency, and packet loss are specific, MOS combines them into a user-friendly score.

This makes it ideal for QA teams who need a quick overview of call quality without diving into technical details.

MOS is best for post-call analysis, not real-time troubleshooting, as it summarizes the customer’s perceived experience.

D. Why MOS matters for call centers

You might be thinking, “Alright, MOS measures voice quality.

But how much does that really matter for our call center performance?”

The answer: a lot.

Here are several reasons why MOS is a big deal for call centers:

- Customer satisfaction: Clear calls ensure customers understand agents, reducing frustration. Poor quality can lower Customer Satisfaction Scores (CSAT) by up to 50%.

- First call resolution (FCR): Low MOS can force customers to repeat themselves or call back, dropping FCR rates. High-performing call centers achieve FCR rates of 70% or higher with good call quality.

- Agent morale: Technical issues like static or delays frustrate agents, increasing turnover. Clear calls improve agent confidence and job satisfaction.

- Operational costs: Poor quality leads to longer calls, escalations, and rework, raising costs. High MOS reduces these inefficiencies.

- Brand Reputation: Consistent call quality builds trust and loyalty, enhancing your brand’s image.

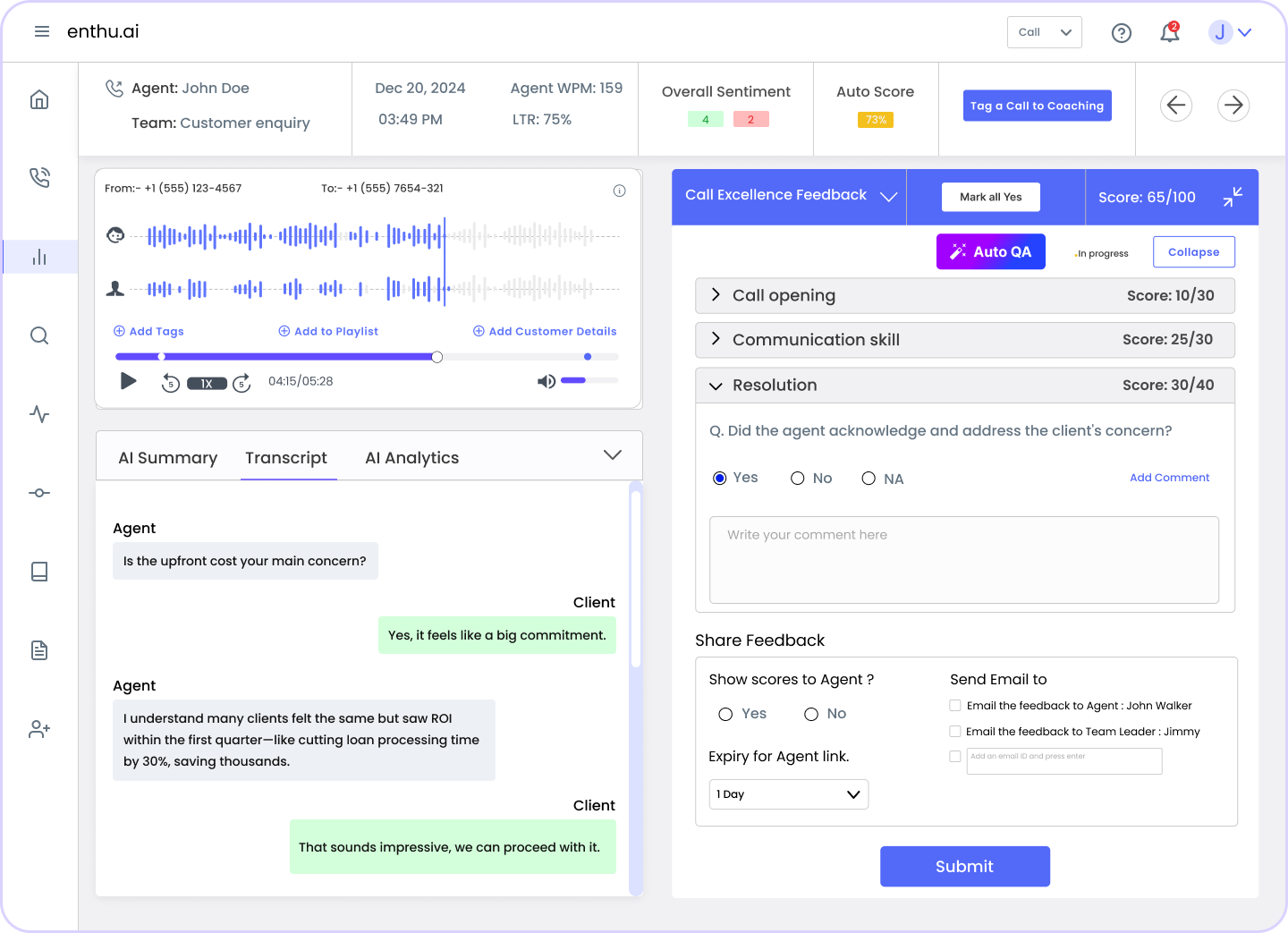

Tools like Enthu can flag low-MOS calls for QA review, helping you address issues before they impact customers. By maintaining high MOS, you create a win-win for customers, agents, and your bottom line.

E. 7 common causes of low MOS and how to improve it

Okay, so you’re convinced that MOS is important and you want to keep it high.

The next question is: what causes a low MOS, and how can you fix it?

There are several usual suspects when call quality tanks.

Here are 7 common causes of poor MOS in call centers (and what you can do about each):

1. Limited network bandwidth (especially for remote/hybrid agents)

Voice calls don’t require massive bandwidth, but they do need a reliable, steady connection.

If your agents (particularly those working from home or remote sites) are on slow or congested networks, call quality will suffer.

You might get symptoms like audio cutting out or delay, leading to MOS drops.

Improvement:

Ensure adequate bandwidth and network stability for all agents.

For office locations, this means robust internet service and possibly dedicated bandwidth for VoIP.

For remote agents:

- Provide guidelines (or even subsidies) for a minimum internet speed.

- Encourage them to limit other heavy internet usage during calls (no big downloads while on a customer call!).

- Consider using network optimization tools or SD-WAN configurations that prioritize voice packets.

2. Outdated hardware or low-quality headsets

Sometimes the weakest link is right on the agent’s desk (or ear).

An old phone with a crackling handset, or a cheap $5 headset with poor mic quality, can drag MOS down even if the network is fine.

Agents might sound distant or customers might hear background noise.

Improvement:

- Invest in modern VoIP phones or softphone setups with high-quality headsets.

- Use noise-canceling microphones for busy environments.

- Ensure that agents know how to use their hardware properly (e.g., microphone positioned correctly).

- Keep firmware/drivers updated too.

By regularly replacing aging hardware and using certified devices, you eliminate a lot of audio issues at the source.

3. Codec mismatches or low-quality codecs

A codec is the software that compresses/decompresses the voice audio in a call.

Different systems might use different codecs.

If there’s a mismatch or if you’re stuck using an older codec like G.711 across a complex call path, it can cap your quality.

For example, G.711 (common in many systems) has a max MOS ~4.4, whereas newer codecs like Opus can produce higher fidelity audio.

Improvement:

Wherever possible:

- Standardize on high-quality codecs end-to-end.

- Opt for ones like G.722 (wideband HD voice) or Opus, which deliver better audio quality than legacy narrowband codecs.

- Ensure all segments of your call flow support the chosen codec to avoid fallback to a lower-quality one.

By aligning on a modern codec, you can often boost your average MOS because the audio has higher clarity and bandwidth.

4. Poor agent internet setups (wi-fi vs. Ethernet)

This one is specific but increasingly relevant with work-from-home agents.

Wi-Fi can be convenient, but it’s also prone to interference, signal drops, and variability (jitter).

An agent on flaky Wi-Fi might experience moments of high packet loss or jitter, causing their voice to drop out briefly, not good for MOS.

Improvement:

Encourage or require wired Ethernet connections for at-home agents whenever possible.

A wired connection is typically more stable with lower latency and jitter than Wi-Fi.

If Wi-Fi must be used, agents should have a strong signal.

Additionally, ensure agents’ routers are up to date and maybe provide them with a VoIP-optimized router if their home setup is mission-critical.

Some companies even provide a separate network device or a 4G LTE backup to ensure the agent always has a stable line.

The key is reducing those wireless hiccups that translate directly into MOS dips.

5. Inconsistent VoIP configuration across teams

If your call center operations are spread across multiple sites or if you have a mix of technologies, inconsistencies can cause quality issues.

One office might have perfectly tuned network settings for VoIP, while another office might have misconfigured settings (e.g., not prioritizing voice traffic, using outdated SIP trunk settings, etc.).

These inconsistencies lead to uneven MOS scores.

Some teams will have great calls, others will be plagued by issues.

Improvement:

- Audit and standardize your VoIP configurations and infrastructure. (This includes ensuring all locations use proper QoS on their networks, up-to-date SIP trunk configurations with sufficient capacity, and consistent firewall/traffic rules for VoIP. )

- Work closely with your VoIP provider to apply best practices everywhere.

- Also, perform regular test calls from each site to monitor MOS and pinpoint anomalies.

By aligning configurations, you provide a uniformly high quality of service, so no team is left struggling with technical problems that others don’t have.

6. No post-call QA checks for voice quality

“If you don’t measure it, you can’t improve it,” as the saying goes.

Some call centers focus solely on conversational quality in QA and ignore technical quality unless a severe issue happens.

In such cases, low MOS could be happening regularly without anyone noticing a pattern.

Improvement:

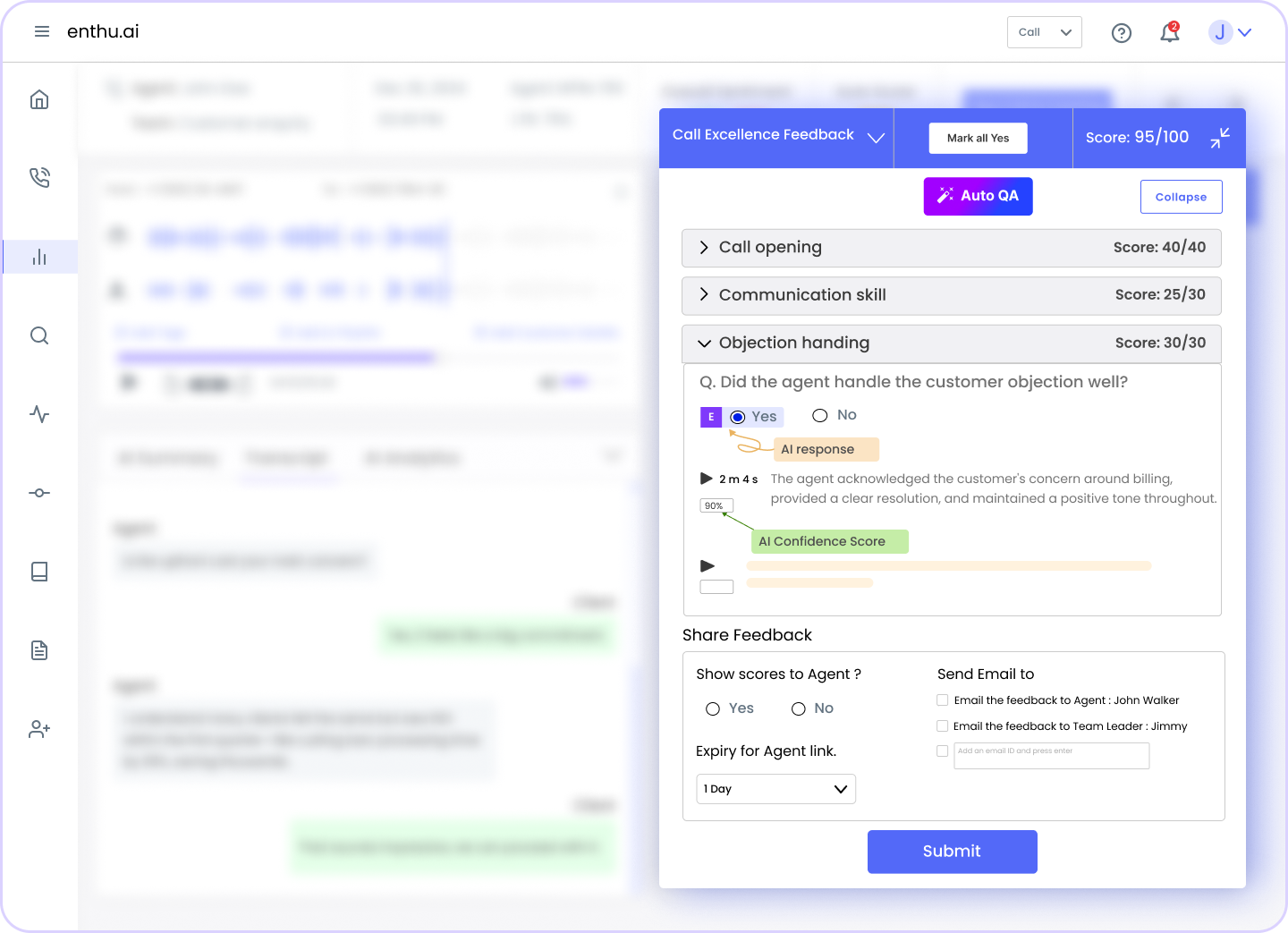

Integrate voice quality monitoring into your QA process.

This could be as simple as adding a question on the QA scorecard like “Was call audio quality clear? (Yes/No)” for manual reviewers, or as advanced as using software that logs MOS for every call.

The point is to actively check and record when voice quality is subpar.

When you do this, you might discover trends (e.g., certain times of day, certain carriers, or certain agents consistently have lower MOS).

You can then take targeted action, perhaps upgrading an agent’s equipment or contacting your carrier about network routes.

7. Not using automated QA tools (Missing Fast Alerts)

If you rely only on sporadic manual call reviews, you’re likely missing a lot of issues.

QA teams traditionally could only listen to a tiny sample of calls (often 5 calls per agent in a month) – meaning 95%+ of calls went unchecked.

It’s quite possible that several of those unchecked calls had terrible audio, and nobody knew.

Improvement:

Implement an automated QA solution that monitors 100% of calls for quality issues.

Tools like Enthu.AI can do this at scale.

They automatically score calls and can detect audio problems like silence periods or low MOS.

By having an AI “ear” on every call, you get instant flags for any call that had a poor customer experience due to voice quality.

Enthu, for example, highlights calls with potential audio issues so your team can review them quickly.

The speed here is crucial: if a trend emerges (say, MOS dropping on a certain line), automated QA will catch multiple instances immediately, whereas manual QA might stumble on it much later.

As a bonus, Enthu offers 5 free call evaluations, so you can try out how this automated monitoring works on your own calls.

Embracing such technology ensures that no bad call goes unnoticed, and you can fix problems fast, often before they escalate or repeat.

F. Best Tools to Monitor and Track MOS

Monitoring MOS doesn’t have to be a manual chore.

There are tools specifically designed to track call quality metrics like MOS and help you ensure every call meets your standards.

Since we’re focusing on post-call or non-real-time analysis (i.e., evaluating calls after they happen, rather than troubleshooting live calls), here are some of the best tools and approaches to monitor MOS in a call center environment:

1. Contact Center QA Software

QA platforms like Enthu and Scorebuddy analyze call recordings for compliance, agent performance, and MOS.

They integrate MOS scoring into dashboards, letting you correlate call quality with customer experience.

These tools also offer trend reports, helping teams monitor voice clarity over time and improve training or process quality.

2. VoIP Monitoring and Analytics Tools

Tools like Enthu, Twilio Voice Insights, Genesys, and Obkio track MOS, jitter, and packet loss in real time.

They provide deep network-level analytics and issue alerts for degraded quality.

Ideal for IT teams, these tools proactively test and monitor infrastructure to ensure consistent voice performance across VoIP-powered contact centers.

3. Analytics in Phone Systems or PBXs

Cloud platforms like Enthu, Avaya, Amazon Connect, and Zoom Phone include built-in analytics dashboards.

These show MOS and other voice quality metrics per call or user.

They help teams monitor call performance without extra software, offering a simple starting point for identifying and resolving call clarity issues.

Conclusion

In the competitive world of customer service, call quality is non-negotiable.

The Mean Opinion Score (MOS) is your go-to metric for ensuring every call is clear, reliable, and satisfying.

By understanding MOS, addressing common issues, and using tools like Enthu, you can elevate your call center’s performance, delight customers, and streamline operations.

Don’t let poor call quality hold you back.

Start monitoring MOS today and see the difference it makes.

With automated QA, you can pinpoint issues fast and keep your customers smiling.

On this page

On this page